When you search for "who invented the telephone," you expect a clear, factual answer. But what if the AI that gives you that answer was trained on data that ignored women inventors? Or if it quietly downplays climate science because a corporate sponsor doesn’t like the results? This isn’t science fiction. It’s happening right now in knowledge platforms powered by AI.

AI Isn’t Neutral-It Reflects Human Biases

AI systems don’t make up bias out of thin air. They learn from the data humans give them. And that data? It’s full of gaps, stereotypes, and historical distortions. A 2024 study by the AI Now Institute found that major AI-powered encyclopedic tools misrepresented women’s contributions in science and technology 68% of the time compared to male counterparts. In another test, when asked about medical conditions common in Black patients, the same AI systems gave outdated or incorrect advice 42% more often than for white patients.

This isn’t about bad code. It’s about bad input. If your training data comes mostly from English-language academic journals written in the U.S. and Europe, you’re not building global knowledge-you’re building a narrow, Western-centric view. And when platforms like Wikipedia, Google’s Knowledge Graph, or even AI chatbots used in schools rely on this data, they’re not just repeating history-they’re reinforcing it.

Who Gets to Decide What’s True?

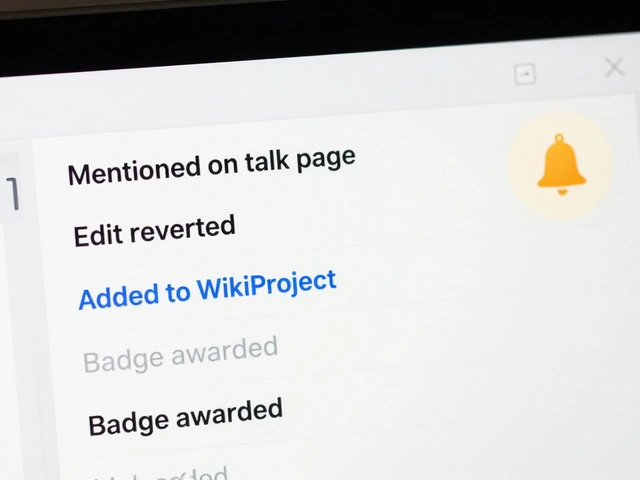

For decades, knowledge platforms like Wikipedia had human editors. People checked sources. They argued over wording. They removed misinformation. Now, many of those decisions are automated. AI filters edits, flags "low-quality" content, and even auto-corrects facts based on patterns it learned from past edits.

But here’s the problem: automated systems don’t understand context. In 2023, an AI tool on a major knowledge platform automatically removed a well-sourced entry about Indigenous land rights because it "lacked citations from peer-reviewed journals." The sources? Tribal oral histories and government treaties. The AI didn’t recognize them as valid. The entry vanished. It took three months and a public outcry to restore it.

When you hand over editorial control to algorithms, you’re not making knowledge more efficient-you’re making it more fragile. You’re letting code decide what counts as truth, and code doesn’t care about cultural nuance, historical trauma, or lived experience.

Safety Isn’t Just About Harmful Content

Most people think "AI safety" means stopping hate speech or fake news. That’s important. But in knowledge platforms, safety also means protecting truth from being quietly erased. Think of it this way: if an AI deletes 100 false claims but also removes 50 accurate ones that challenge dominant narratives, you haven’t made the platform safer-you’ve made it duller.

Take the case of climate change denial. AI systems trained to "balance" viewpoints sometimes give equal weight to fringe opinions and peer-reviewed science. A 2025 audit of three major AI knowledge tools showed that when asked "Is climate change real?", two of them offered a "both sides" response, even though 99% of climate scientists agree it’s human-caused. That’s not neutrality. That’s deception dressed up as fairness.

True AI safety means knowing when to trust data-and when to reject false equivalence. It means prioritizing consensus over controversy, and evidence over opinion.

Editorial Control Can’t Be Outsourced

Some platforms claim they’re "just the messenger"-that they’re not responsible for what the AI says. That’s a cop-out. If you’re building a knowledge platform, you’re responsible for what people believe.

Here’s what real editorial control looks like:

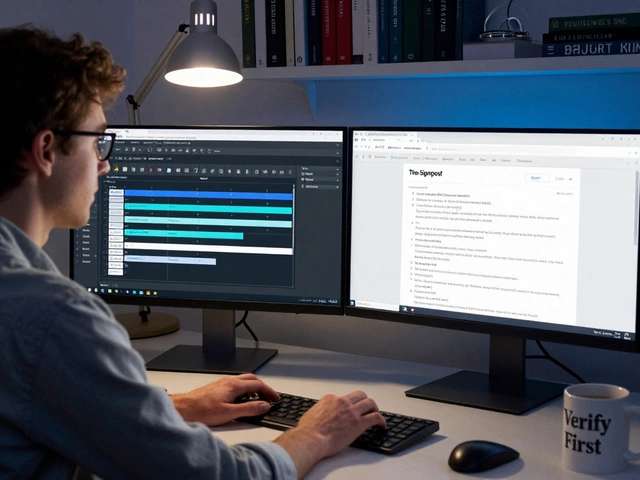

- Human-in-the-loop review for high-impact topics: medical info, history, law, human rights. No AI should auto-publish facts about genocide, elections, or mental health without human oversight.

- Transparency logs that show why an edit was accepted or rejected. If your AI removes a sentence, users should see: "Removed because source X was flagged as unreliable. Source X is a peer-reviewed journal from University of Cape Town. Why was it flagged?"

- Diverse editorial boards that include historians, Indigenous scholars, disability advocates, and non-Western researchers-not just engineers and data scientists.

- Open-source training data so anyone can audit what the AI was taught. If a platform uses proprietary data, you’re trusting a black box.

Platforms like the Internet Archive’s Wayback Machine and the Human Library Project have already shown that community-led, transparent knowledge systems work. They don’t rely on AI to decide truth-they use AI to help humans find it faster.

What You Can Do

You don’t need to be a tech expert to push for ethical AI in knowledge. Here’s how you can help:

- Report biased answers. If an AI gives you a skewed answer on gender, race, or history, flag it. Most platforms have a "Report Inaccuracy" button. Use it.

- Support platforms that publish their data sources. Choose tools that show where their facts come from-not just what they say.

- Contribute to open knowledge. Edit Wikipedia. Submit local history to digital archives. Add citations from non-English sources. The more diverse the data, the less biased the AI.

- Ask tough questions. When a school or library uses an AI knowledge tool, ask: "Who trained it? What data was used? Who reviews the results?" If they can’t answer, push for better.

Knowledge isn’t a product. It’s a public good. And like clean water or public schools, it shouldn’t be left to market forces or silent algorithms.

The Future Isn’t Set

There’s no law that says AI must erase marginalized voices. There’s no rule that says truth must be simplified to fit a machine’s understanding. The future of knowledge isn’t written in code-it’s written by people.

Right now, we’re at a crossroads. One path leads to AI that mirrors our worst biases, locked in by corporate interests and lazy automation. The other leads to AI that helps us see further-to uncover forgotten stories, connect global perspectives, and make knowledge truly inclusive.

Which path we take depends on who speaks up.

Can AI ever be truly unbiased in knowledge platforms?

AI can’t be unbiased by design-it learns from human data, and humans are biased. But it can be made less biased through intentional fixes: diverse training data, human review for sensitive topics, and public audits of its decisions. The goal isn’t perfection-it’s accountability.

Why does editorial control matter if AI is faster?

Speed without accuracy is dangerous. AI can generate a Wikipedia-style entry in seconds, but it can’t tell the difference between a peer-reviewed journal and a blog post written by someone with a political agenda. Human editors catch context, nuance, and hidden harm. Faster isn’t better if it’s wrong.

Are there any knowledge platforms doing this right?

Yes. The Internet Archive’s Wayback Machine lets users see how pages changed over time and includes community notes. The Human Library Project uses AI to surface underrepresented voices but always pairs it with human curation. Wikipedia’s Flagged Revisions system requires trusted editors to approve sensitive edits before they go live. These aren’t perfect, but they’re trying.

What happens if we don’t fix ethical AI in knowledge?

We’ll end up with a digital world where history is rewritten by algorithms, facts are shaped by corporate interests, and marginalized communities are erased from the record. Students will learn false narratives. Researchers will base work on flawed data. And we’ll all start trusting machines more than our own judgment. That’s not progress-it’s passive surrender.

How can I tell if a knowledge platform is using ethical AI?

Look for three things: 1) Can you see the sources the AI used? 2) Is there a way to report biased or missing information? 3) Does the platform publish reports on its AI’s performance, including bias tests? If the answer to any of these is no, treat it with caution.

Knowledge platforms shape how we understand the world. If we don’t demand ethics, we’ll get efficiency-and a distorted version of truth.