AI fact-checking: How Wikipedia and AI work together — and where they clash

When you ask an AI assistant a question, it doesn’t pull answers from thin air — it often pulls from AI fact-checking, the use of automated systems to verify claims using structured data and trusted sources. Also known as automated verification, it’s becoming a backbone of how search engines and apps decide what’s true. But here’s the catch: AI doesn’t understand context the way a human editor does. That’s where Wikipedia, the free, collaboratively edited encyclopedia that relies on human verification and cited sources steps in. AI tools scan Wikipedia for reliable summaries, but they don’t always get why certain sources matter more than others — or how bias hides in data.

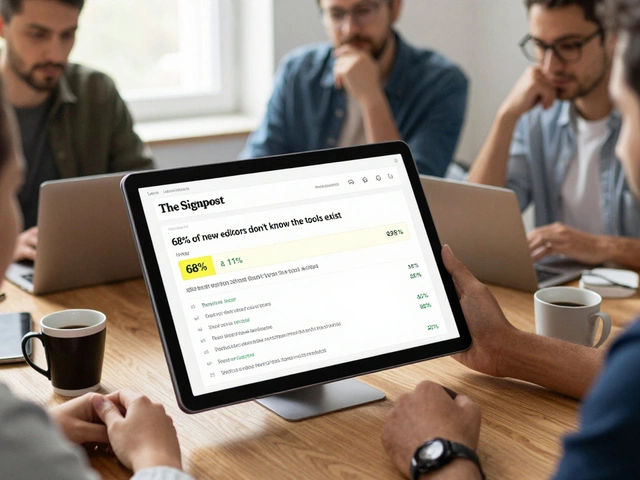

AI bias, the tendency of automated systems to repeat or amplify errors from their training data is a real problem. If an AI learns mostly from corporate databases or skewed web content, it will miss marginalized voices, misrepresent historical events, or overstate fringe opinions. Wikipedia fights this with policies like due weight and reliable sources — rules that force editors to reflect real-world consensus, not popularity. The Wikimedia Foundation, the nonprofit that supports Wikipedia and its sister projects through open knowledge and transparency is pushing back against AI companies that scrape Wikipedia without credit or accountability. They’re not just asking for respect — they’re demanding that AI systems show their work, cite their sources, and let humans audit the results.

It’s not all conflict. Volunteers use AI to flag vandalism, suggest missing citations, and even help spot gaps in coverage — like articles about Indigenous communities or local history that never made it into mainstream databases. But no algorithm can replace the judgment of someone who’s read 20 sources, checked the dates, and understands the nuance behind a claim. That’s why Wikipedia still wins in public trust surveys, even when AI answers faster. The real power isn’t in the machine — it’s in the community that checks it.

What you’ll find here isn’t theory. It’s real stories: how Wikipedia editors are using AI tools to clean up articles, how AI is being misused to erase reliable content, and why the next big battle over truth won’t be fought in courtrooms — it’ll be fought in edit histories and licensing terms. You’ll see how journalists use Wikipedia as a fact-checking gateway, how takedown requests quietly delete knowledge, and why the people building AI need to learn from the people who’ve been verifying facts for 20 years.

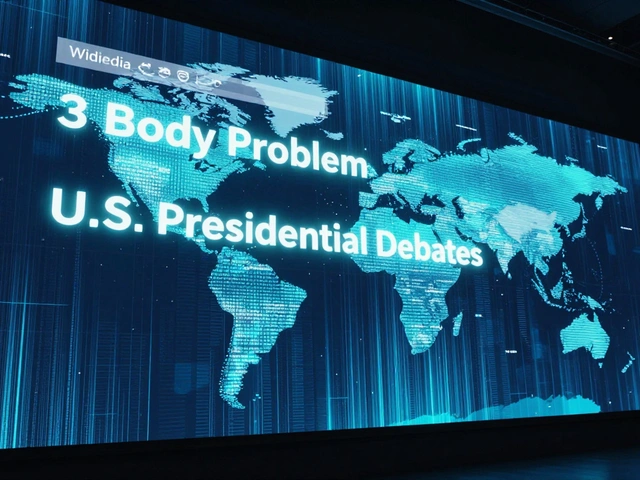

Auditing AI With Wikipedia: Grounded Evaluation Protocols

Wikipedia provides a real-time, living benchmark to audit AI accuracy. Learn how grounded evaluation protocols expose hallucinations, outdated facts, and lack of temporal awareness in AI systems.

Source Lists in AI Encyclopedias: How Citations Appear vs What’s Actually Verified

AI encyclopedias show citations that look credible, but many don't actually support the claims. Learn how source lists are generated, why they're often misleading, and how to verify what's real.