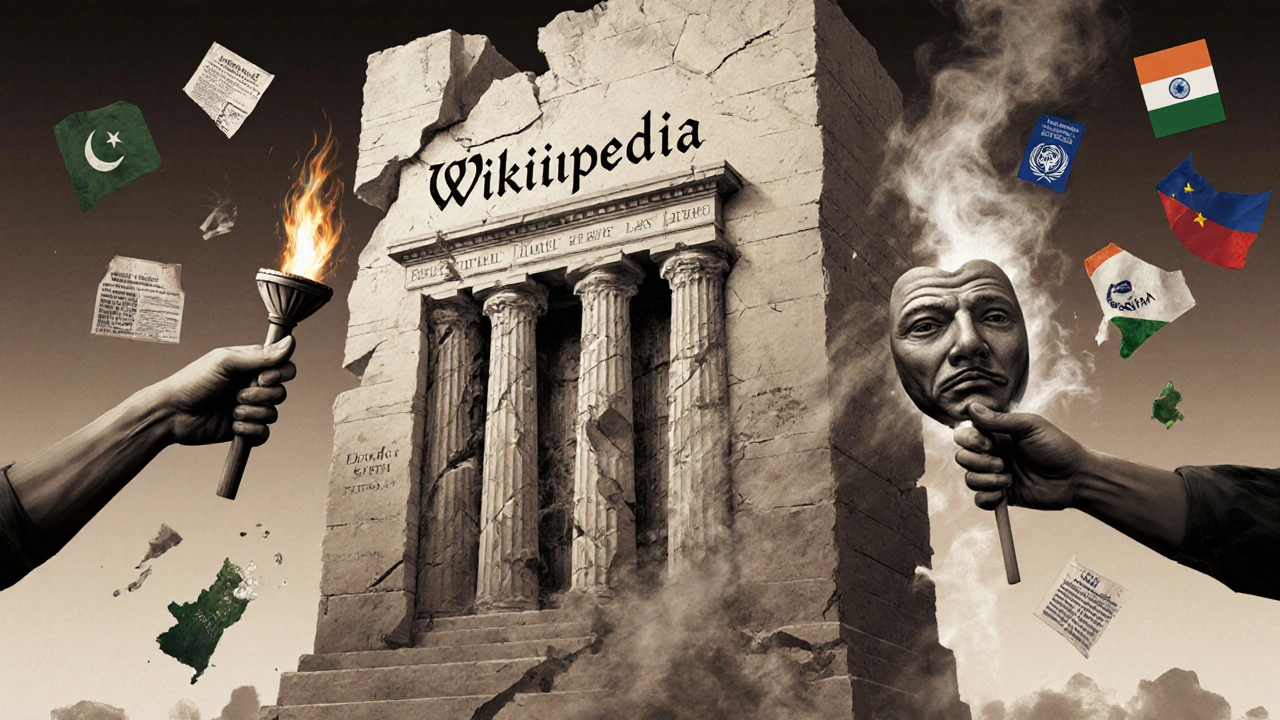

Wikipedia isn’t just a collection of neutral facts. It’s a battlefield where nations, ideologies, and powerful interests fight for control over how history is written-right now, in real time. You might think of it as a quiet library, but behind the scenes, thousands of editors are locked in silent wars over wording, images, and even which events get mentioned at all. These aren’t random disputes. They’re carefully coordinated campaigns by state-backed actors, political groups, and corporate entities trying to shape global perception through the world’s most visited reference site.

What Makes a Wikipedia Edit War Geopolitical?

A geopolitical edit war happens when the content of a Wikipedia article is altered not to fix errors, but to advance a national agenda, suppress dissent, or rewrite history. These aren’t casual mistakes. They’re systematic efforts to control the narrative. The edits often follow patterns: deleting references to human rights abuses, adding nationalist claims as fact, or burying inconvenient truths under layers of vague language.

Unlike vandalism, which is obvious and quick to reverse, geopolitical edits are subtle. They might change "invaded" to "entered," swap "occupied" for "administered," or remove entire sections citing international reports. The goal isn’t to confuse readers-it’s to make the altered version feel normal. Over time, repeated edits by coordinated accounts make the biased version stick.

Wikipedia’s open-editing model, meant to ensure transparency, becomes a vulnerability when exploited. Countries with strict information controls often use proxy accounts, paid editors, and automated bots to push their version of events. And because Wikipedia relies on consensus, the side with more persistent, organized editors usually wins-even if they’re lying.

The Ukraine-Russia Conflict: Erasing a Nation’s Story

Since 2014, the Wikipedia pages about Ukraine have been among the most heavily contested in the world. Russian state-linked editors have systematically tried to reframe the annexation of Crimea as a "legitimate referendum," downplay Russian troop involvement in eastern Ukraine, and label Ukrainian government forces as "neo-Nazis."

In 2022, after Russia’s full-scale invasion, editors from Russian IP addresses flooded articles with phrases like "special military operation" and removed all references to war crimes documented by the UN and Human Rights Watch. One Russian-language article on the Bucha massacre was edited over 800 times in two weeks-each edit removing photos, victim names, or forensic evidence.

Wikipedia’s English-language community fought back by locking the page, requiring administrator approval for edits, and citing verified sources like the International Criminal Court. But the damage was done. Russian state media began citing Wikipedia as proof that "the West is rewriting history." By April 2023, Russian search engines showed the manipulated version as the top result, even when users searched in English.

Wikipedia’s response? They banned over 1,200 Russian-linked accounts in 2023 alone. But the conflict continues. New accounts appear daily, often using university IPs or VPNs to mimic legitimate users.

China and the Taiwan Question: A Battle Over Identity

On Wikipedia, Taiwan is not a country. At least, not according to the Chinese government’s version of the article.

Chinese state actors and affiliated editors have spent years ensuring the "Taiwan" article never implies sovereignty. They remove references to Taiwan’s democratically elected government, delete mentions of its participation in international organizations under its own name, and replace "Taiwanese people" with "compatriots in Taiwan."

In 2021, an edit war erupted over the phrase "Republic of China (Taiwan)." Chinese editors insisted on "Taiwan, Province of China," while international editors pushed for "Taiwan, a self-governing democracy." After months of back-and-forth, Wikipedia’s Arbitration Committee ruled that the phrase "Taiwan" alone was acceptable as a geographic term-but only if paired with a clear note that the People’s Republic of China claims it.

The compromise satisfied no one. Chinese users still see a version that treats Taiwan as a province. International users see a footnote that feels like a concession. And in mainland China, where Wikipedia is blocked, state-run encyclopedias and social media platforms present Taiwan as an inseparable part of China-with no mention of elections, a military, or a president.

Wikipedia’s policy of neutrality here is a mirage. The platform can’t enforce truth when one side controls the narrative at the national level.

India and Pakistan: Rewriting Partition

The article on the 1947 Partition of India is another flashpoint. Indian editors often emphasize the violence committed by Muslim mobs and downplay the role of British colonial policies. Pakistani editors, in turn, portray the partition as a necessary act of liberation and omit massacres committed by Pakistani militias.

In 2020, a single edit to the article removed all references to the estimated 200,000-2 million deaths during the partition, replacing them with a vague line: "significant loss of life occurred on both sides." The edit was made from an IP address linked to a government-funded research institute in Islamabad.

Wikipedia’s English version now carries multiple disclaimers, but the damage is done. In India, school textbooks still cite Wikipedia as a source. In Pakistan, state-sponsored YouTube channels use Wikipedia screenshots to prove their historical claims. Neither side has access to the full, unfiltered truth.

Wikipedia’s solution? Locking the article and requiring editors to cite academic sources only. But enforcement is inconsistent. Volunteer moderators are overwhelmed. And the most persistent editors-often backed by institutional resources-keep coming back.

The United States and the Iraq War: When Power Edits Itself

Geopolitical edit wars aren’t limited to authoritarian regimes. Even in democracies, powerful interests manipulate Wikipedia.

After the 2003 invasion of Iraq, U.S. government contractors and military PR firms edited the Wikipedia article to remove mentions of the lack of weapons of mass destruction and to highlight the "liberation" narrative. One editor, later identified as working for a defense contractor, made over 300 edits in three months, adding phrases like "democratic transition" and "freedom mission."

Wikipedia’s community flagged the edits as biased, but it took over a year for the article to be fully cleaned. By then, the false narrative had already spread to news outlets, think tanks, and even congressional briefings that cited Wikipedia as a source.

That incident led to Wikipedia’s first formal policy on paid editing. But enforcement remains weak. Today, lobbyists, political campaigns, and corporate PR teams still pay editors to shape Wikipedia content-often without disclosure.

How Wikipedia Tries to Fight Back

Wikipedia doesn’t sit idle. It has tools: edit restrictions, semi-protection, administrator oversight, and a volunteer army of fact-checkers. But the system is stretched thin.

High-profile articles like those on Ukraine, China, or Israel-Palestine are now flagged as "high-risk." Only trusted editors can modify them. Some pages require multiple approvals. Others are locked entirely.

Wikipedia also uses AI tools to detect coordinated editing patterns-like dozens of accounts making the same edit within minutes. In 2024, they rolled out a new system called "EditWar Shield" that flags edits from known state-linked IP ranges.

But no tool can replace human judgment. And the people doing the editing? They’re not always bad actors. Many are well-meaning nationalists who genuinely believe their version of history is correct. That’s what makes these wars so hard to win: the enemy isn’t just outside the gate-it’s inside the building.

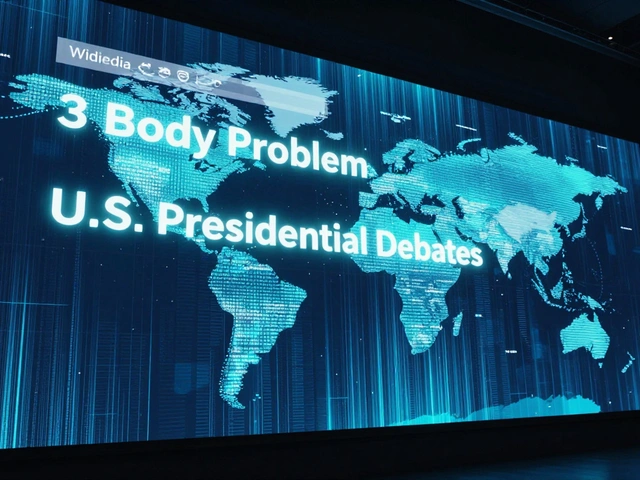

Why This Matters Beyond Wikipedia

When people search for "what happened in Crimea?" or "is Taiwan a country?"-they don’t go to academic journals. They go to Wikipedia. It’s the first result. Often, the only result.

That makes Wikipedia the most influential history textbook in human history. And right now, it’s being rewritten by governments that don’t believe in truth, only control.

Wikipedia’s greatest strength-its openness-is also its greatest weakness. It allows anyone to edit. But it doesn’t verify who they are, or why they’re editing.

For now, the best defense is awareness. If you use Wikipedia for research, always check the edit history. Look for sudden changes, repeated edits from the same region, or sources that don’t match academic standards. And never trust a single version. Compare the English, Russian, Chinese, and Arabic versions side by side. The differences tell the real story.

Wikipedia won’t fix itself. It never was meant to be a government-proof archive. But it’s still the closest thing we have to a global public record. And if we stop caring about who’s editing it, we’ll lose even that.

Are Wikipedia edit wars illegal?

No, editing Wikipedia to push a political agenda isn’t illegal, even if it’s deceptive. Wikipedia’s rules prohibit paid advocacy without disclosure, but enforcement is inconsistent. Governments and organizations often use volunteers or anonymous accounts to avoid detection. Legal action is rare because Wikipedia operates globally, and laws vary by country. The platform relies on community moderation, not law enforcement.

Can I trust Wikipedia for academic research?

Wikipedia is not a primary source and shouldn’t be cited in academic papers. But it’s an excellent starting point. Check the references at the bottom of articles-those are the real sources. If an article on a geopolitical topic has mostly news outlets or government websites as citations, be skeptical. Look for peer-reviewed journals, UN reports, or academic books in the reference list. The quality of citations tells you more than the article’s wording.

Why doesn’t Wikipedia just lock all controversial pages?

Wikipedia’s core principle is open editing. Locking pages goes against its mission. Editors believe that truth emerges through debate, not control. But in practice, high-risk pages like those on Ukraine, China, or Israel-Palestine are already semi-protected. Only experienced editors can change them. The challenge is balancing openness with accuracy. Too many locks, and the site becomes inaccessible. Too few, and it becomes propaganda.

How do I spot a biased edit on Wikipedia?

Check the edit history. Look for sudden changes in tone, removal of citations from reputable sources, or repeated edits from the same region or IP range. If a sentence about a conflict suddenly changes from "invaded" to "entered," or if photos of casualties disappear, that’s a red flag. Also, look at the talk page-editors often debate changes there. If one side is silent or uses aggressive language, it’s likely a coordinated effort.

What’s being done to stop state-sponsored editing?

Wikipedia’s volunteer community and the Wikimedia Foundation have increased monitoring since 2020. They use AI to detect coordinated editing, ban known state-linked IPs, and require disclosure for paid edits. In 2023, they banned over 1,500 accounts linked to Russia, China, and Iran. But the scale is overwhelming. New accounts are created daily. The real solution? More volunteers. If you care about truth, you can help by reviewing edits on high-risk pages or reporting suspicious activity.