Wikipedia isn’t just a website. It’s a living archive shaped by millions of anonymous editors, corporate lobbyists, politicians, and everyday users trying to shape what the world remembers. And over the past 20 years, that openness has made it a magnet for controversy.

The Essjay Scandal (2006)

In 2006, a Wikipedia editor known as "Essjay" claimed to be a tenured professor of theology with multiple advanced degrees. He used that fake identity to argue with other editors, mediate disputes, and even get appointed to Wikipedia’s Arbitration Committee - the body that handles the most serious conflicts on the site.

Turns out, Essjay was a 24-year-old college dropout from Kentucky named Ryan Jordan. He never attended graduate school. He never taught. But he lied so convincingly that major media outlets like The New Yorker quoted him as an expert on academic matters.

When the truth came out, Wikipedia’s founder Jimmy Wales called it a "serious breach of trust." The scandal forced Wikipedia to rethink how it handles editor credentials. Today, the site doesn’t let editors claim titles unless they can prove them - and even then, those titles don’t give them special authority.

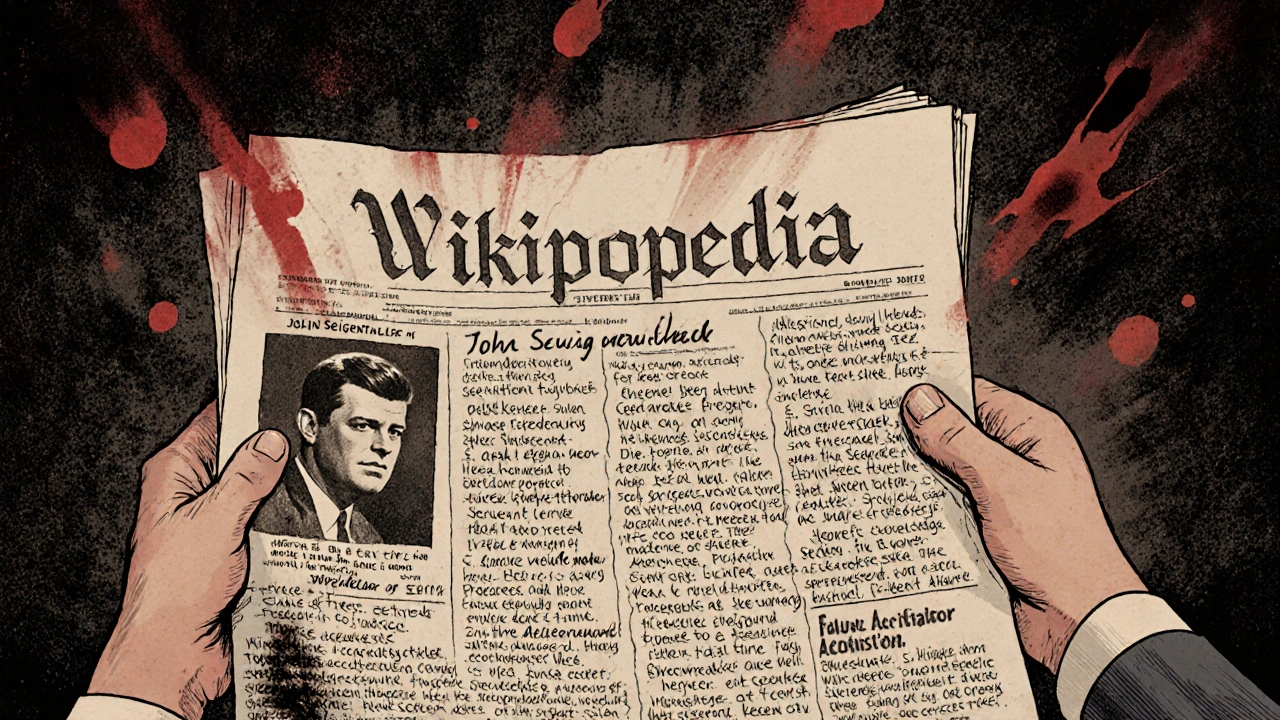

The Seigenthaler Biography Incident (2005)

One of the most damaging Wikipedia controversies involved a false biography of John Seigenthaler, a respected American journalist and former aide to Robert F. Kennedy.

In May 2005, an anonymous user added a fabricated passage claiming Seigenthaler had been suspected of involvement in the assassinations of JFK and RFK. The lie stayed live for 132 days. No one noticed. No one corrected it. Seigenthaler only found out when a friend showed him the page.

He wrote a scathing op-ed in USA Today, calling Wikipedia a "flawed and irresponsible research tool." The article went viral. It triggered a national conversation about the reliability of user-generated content.

Afterward, Wikipedia changed its rules. Anonymous users could no longer create new articles. Only registered users could. The site also began flagging unverified biographies of living people for extra review. It was the first time Wikipedia admitted its system had a fatal blind spot.

The Wikipedia Sockpuppet Investigations (2007-2010)

Some of the biggest fights on Wikipedia aren’t about facts - they’re about control. And that’s where sockpuppets come in.

A sockpuppet is when someone creates fake accounts to pretend they’re multiple people supporting the same agenda. In 2007, Wikipedia’s administrators uncovered a massive network of sockpuppets tied to the Church of Scientology. These fake accounts edited articles to remove criticism of the church, delete references to its controversial practices, and attack critics.

It wasn’t just Scientology. In 2008, a PR firm called Wiki-PR was found running hundreds of sockpuppet accounts to rewrite corporate Wikipedia pages - for clients like Apple, Microsoft, and Coca-Cola. They’d pay editors to remove negative info, add fluff, and bury bad press.

Wikipedia banned Wiki-PR. The Church of Scientology’s edits were locked down. But the problem didn’t go away. Today, companies still hire "editors" to clean up their pages. And Wikipedia’s volunteer watchdogs are constantly chasing ghosts - fake accounts that pop up overnight, make a few edits, and vanish.

The "Edit Wars" Over Climate Change (2010s-Present)

Climate change is one of the most edited topics on Wikipedia. And it’s also one of the most politicized.

For over a decade, a small but persistent group of editors - many with ties to fossil fuel-funded think tanks - have tried to dilute Wikipedia’s climate science content. They’d insert phrases like "some scientists believe" or "the debate continues" into articles that clearly state the overwhelming scientific consensus: humans are causing global warming.

Wikipedia’s rules say content must reflect reliable sources. The IPCC, NASA, and NOAA all agree on human-caused climate change. But editors loyal to denialist narratives kept reverting edits, filing complaints, and dragging out discussions for months.

Wikipedia responded by semi-protecting key climate articles. Only established editors could make changes. They also added clear templates that say: "This article reflects the consensus of the scientific community." Still, the battles never really end. Every time a new report comes out, the same editors show up again.

The "Gorilla" and "Black Lives Matter" Bias Accusations (2016-2020)

Wikipedia’s content doesn’t just reflect the world - it reflects who’s editing it. And for years, the editing community was overwhelmingly white, male, and Western.

In 2016, a user noticed that Wikipedia’s article on gorillas listed "human" as the animal’s closest relative - but didn’t mention that humans and gorillas share 98% of their DNA. The edit was made by someone who thought "human" was a category, not a species. It was a factual error, but it became symbolic.

Then came the Black Lives Matter movement. Activists noticed that Wikipedia’s coverage of racial justice was thin. Articles on police brutality, systemic racism, and civil rights leaders were often incomplete, buried under neutral tone, or dominated by white editors who downplayed structural inequality.

Groups like Art + Feminism and WikiProject Black Lives Matter started organizing edit-a-thons. They trained new editors - mostly women and people of color - to fix gaps. They added citations from Black scholars, corrected biased language, and expanded coverage of underrepresented figures like Barbara Jordan, Audre Lorde, and Marsha P. Johnson.

It wasn’t perfect. Some veteran editors resisted, calling it "activism." But the changes stuck. Today, Wikipedia has over 200 active WikiProjects focused on diversity, equity, and inclusion. The site still has bias - but now it’s being actively challenged.

The Russian Government and Wikipedia (2018-2025)

In 2018, Russia blocked access to Wikipedia after the site refused to remove articles critical of the Kremlin - including one detailing the poisoning of opposition leader Alexei Navalny.

Wikipedia didn’t budge. It stood by its policy: no censorship. So Russia ordered ISPs to block the entire site. Millions of Russians lost access. The move backfired. More people used VPNs to reach Wikipedia. The site’s Russian-language edition grew even more popular.

Then came the war in Ukraine. Wikipedia became a real-time archive of war crimes, troop movements, and destroyed cities - all edited by volunteers inside Ukraine, Russia, and around the world. Russian state media called it "Western propaganda." Ukraine’s government called it "the most reliable source."

Wikipedia’s Russian-language version was temporarily taken over by pro-Kremlin editors. But volunteers from Ukraine and other countries fought back - reverting edits, flagging violations, and restoring truth. In 2024, Wikipedia published its most detailed timeline of the war ever written, with over 12,000 edits and 300 verified sources.

Today, Russian authorities still try to pressure Wikipedia. But the site remains one of the few places in Russia where citizens can find uncensored facts.

How Wikipedia Survives Controversy

Every major scandal made Wikipedia stronger - not weaker.

After Essjay, it tightened credential rules. After Seigenthaler, it protected biographies of living people. After sockpuppets, it built detection tools. After climate denial, it locked down high-risk articles. After bias accusations, it empowered new voices.

Wikipedia doesn’t have a CEO. It doesn’t have ads. It doesn’t answer to shareholders. It answers to its own rules - and the people who follow them.

That’s why, despite every scandal, every lie, every manipulation - Wikipedia still stands as the most visited reference site in human history. Not because it’s perfect. But because it’s willing to fix itself.

Why does Wikipedia allow anonymous edits?

Wikipedia allows anonymous edits to keep editing open to everyone - including people who can’t or won’t create accounts. But after the Seigenthaler incident, anonymous users lost the ability to create new articles. Only registered users can now start pages. Anonymous edits are still allowed on existing pages, but they’re heavily monitored and often reverted if they’re suspicious.

Can companies pay to fix their Wikipedia pages?

Yes, but it’s against Wikipedia’s rules. Companies can hire editors to fix factual errors or add citations - but they can’t remove criticism, promote fluff, or manipulate tone. The 2008 Wiki-PR scandal exposed this practice, and Wikipedia now bans paid editing without full disclosure. Editors must declare if they’re being paid, and their edits are flagged for extra review.

Is Wikipedia biased toward Western perspectives?

Yes, historically. Over 70% of Wikipedia editors are from North America and Europe. This led to gaps in coverage of non-Western history, culture, and science. But since 2015, initiatives like WikiProject Global South and Art + Feminism have trained thousands of new editors from Africa, Asia, and Latin America. Coverage is improving, but imbalance still exists - especially in languages like Swahili, Hindi, and Arabic.

How does Wikipedia handle false information?

Wikipedia relies on a network of volunteer editors who monitor changes, revert vandalism, and cite reliable sources. High-profile articles are semi-protected, meaning only experienced editors can edit them. Bots automatically flag suspicious edits. And if false info spreads, the community usually corrects it within hours - sometimes minutes. The system isn’t perfect, but it’s fast, transparent, and constantly improving.

What happens when governments try to censor Wikipedia?

Wikipedia refuses to remove content based on government pressure. When countries like China, Russia, or Turkey block the site, Wikipedia doesn’t negotiate. Instead, it works with digital rights groups to help users bypass blocks using encrypted connections. In some cases, Wikipedia partners with local organizations to mirror content on alternative servers. The goal is always to keep information accessible - even when it’s dangerous.