Collaborative Knowledge: How Wikipedia Builds Trust Through Shared Editing

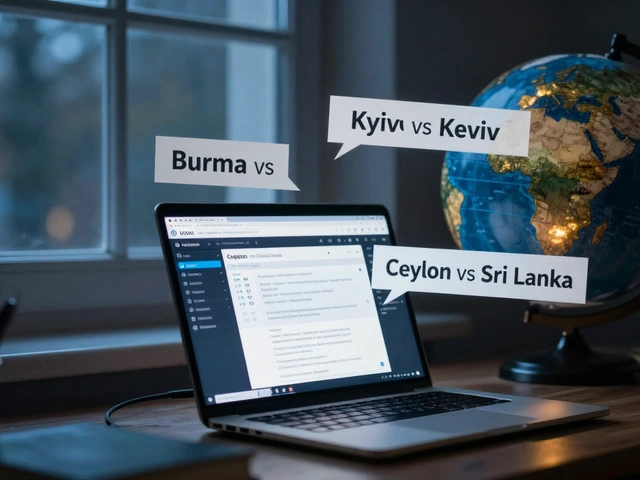

When you read a Wikipedia article, you’re not just seeing facts—you’re seeing the result of collaborative knowledge, a system where thousands of volunteers collectively research, debate, and refine information without central control. Also known as community-driven content, it’s how a free encyclopedia became the go-to source for billions, even as AI tools try to copy it. This isn’t magic. It’s rules, patience, and people showing up day after day to fix typos, cite sources, and push back on misinformation.

Behind every accurate Wikipedia page is a network of Wikimedia Foundation, the nonprofit that supports the technical and legal infrastructure but doesn’t write content and open knowledge, a philosophy that says information should be free to use, edit, and share as long as credit is given. These aren’t just buzzwords—they’re the foundation. The collaborative knowledge model works because it demands evidence. Want to add a claim? You need a reliable source. Want to argue over a point? You need to follow policy, not popularity. That’s why Wikipedia still beats AI encyclopedias in trust surveys—because humans check the links, not algorithms guessing from scraped data.

It’s messy sometimes. Editors clash over due weight, copyright takedowns erase good content, and off-wiki harassment scares people away. But the system adapts. Task forces fix bias. The Signpost reports on what matters. WikiProjects like Medicine and Indigenous Coverage bring experts together. Volunteers clear 12,000 articles in a single drive. Wikidata syncs facts across 300 languages. This isn’t a top-down project. It’s a living, breathing network of people who care enough to fix things—even when no one’s watching.

You don’t need to be an expert to help. You just need to care about getting it right. Whether you’re checking a citation, updating a date, or defending a minority view with sources, you’re part of this. Below, you’ll find real stories from inside the system—how journalists use Wikipedia without trusting it, how AI editing risks erasing voices, how licensing lets anyone reuse the content, and how volunteers keep the lights on without pay or ads. This is collaborative knowledge in action. Not theory. Not hype. Real work, by real people.

Grokipedia and AI-Generated Encyclopedia Content: The Challenge to Collaborative Knowledge

Grokipedia is an AI-generated encyclopedia that produces content at lightning speed-but without human oversight. While it's fast and polished, it lacks accountability, transparency, and the ability to correct bias. Understanding how it differs from collaborative platforms like Wikipedia is critical for using AI knowledge responsibly.

Peer-Reviewed Journals Specializing in Wikipedia and Open Knowledge

Peer-reviewed journals are now publishing rigorous research on Wikipedia and open knowledge systems, treating them as legitimate subjects of academic study. These journals promote transparency, open access, and community-driven scholarship.

Future Directions in Wikipedia Research: Open Questions and Opportunities

Wikipedia research is shifting from traffic metrics to equity. Learn the open questions about bias, AI, offline access, and how to make global knowledge truly inclusive.