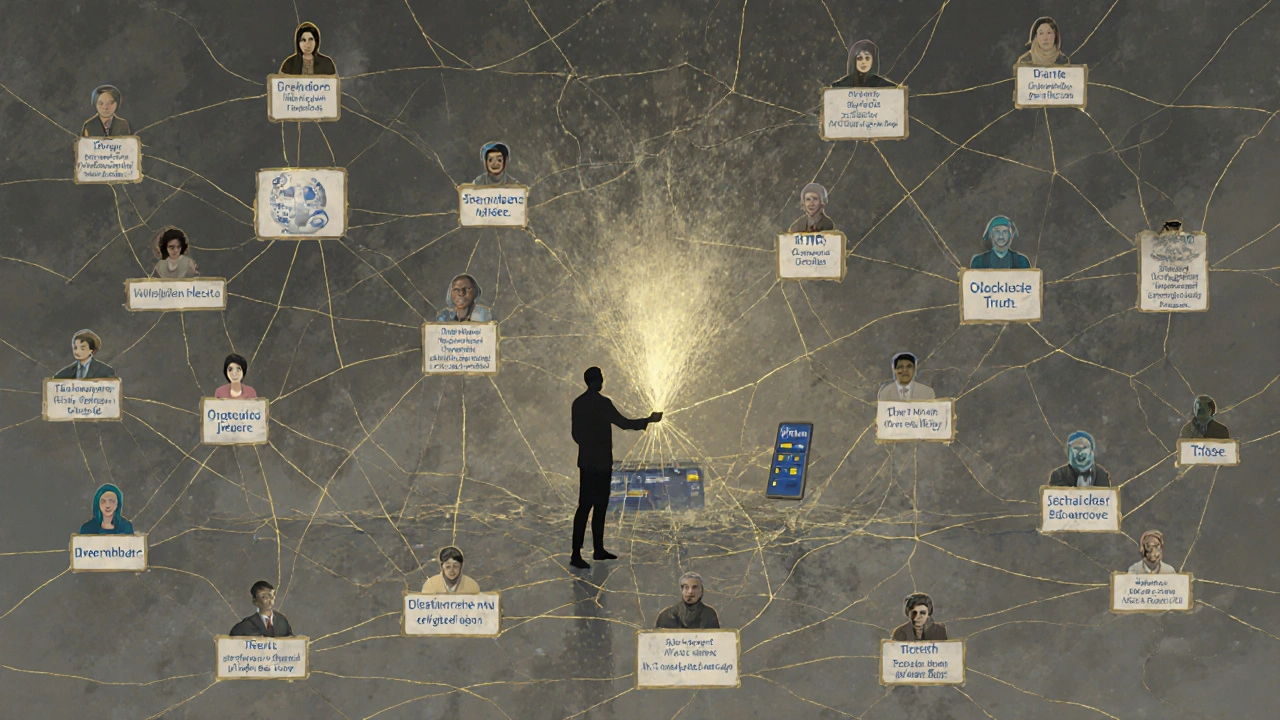

Wikipedia claims to be the free encyclopedia anyone can edit. But behind the scenes, a quiet war is being fought-not over facts, but over identity. Someone creates a fake account, pretends to be a neutral editor, and slowly pushes a biased version of history, science, or politics. These aren’t trolls. They’re sockpuppets: hidden identities used to manipulate consensus, silence critics, and game the system. And when they’re found, the fallout isn’t just a ban-it’s a crisis of trust.

What Exactly Is a Sockpuppet?

A sockpuppet on Wikipedia is a user account created to deceive others into thinking it’s a separate, independent person. The real editor behind it might be banned, have a conflict of interest, or just want to amplify their voice without revealing their true identity. They’ll comment on their own edits, vote in disputes, or attack opponents-all while pretending to be multiple people.

This isn’t just cheating. It breaks Wikipedia’s core rule: edit with integrity. The platform relies on transparency. If you can’t prove you’re who you say you are, your edits lose credibility. Sockpuppetry doesn’t just distort articles-it corrupts the entire process of collaborative truth-building.

How Do Investigators Find Them?

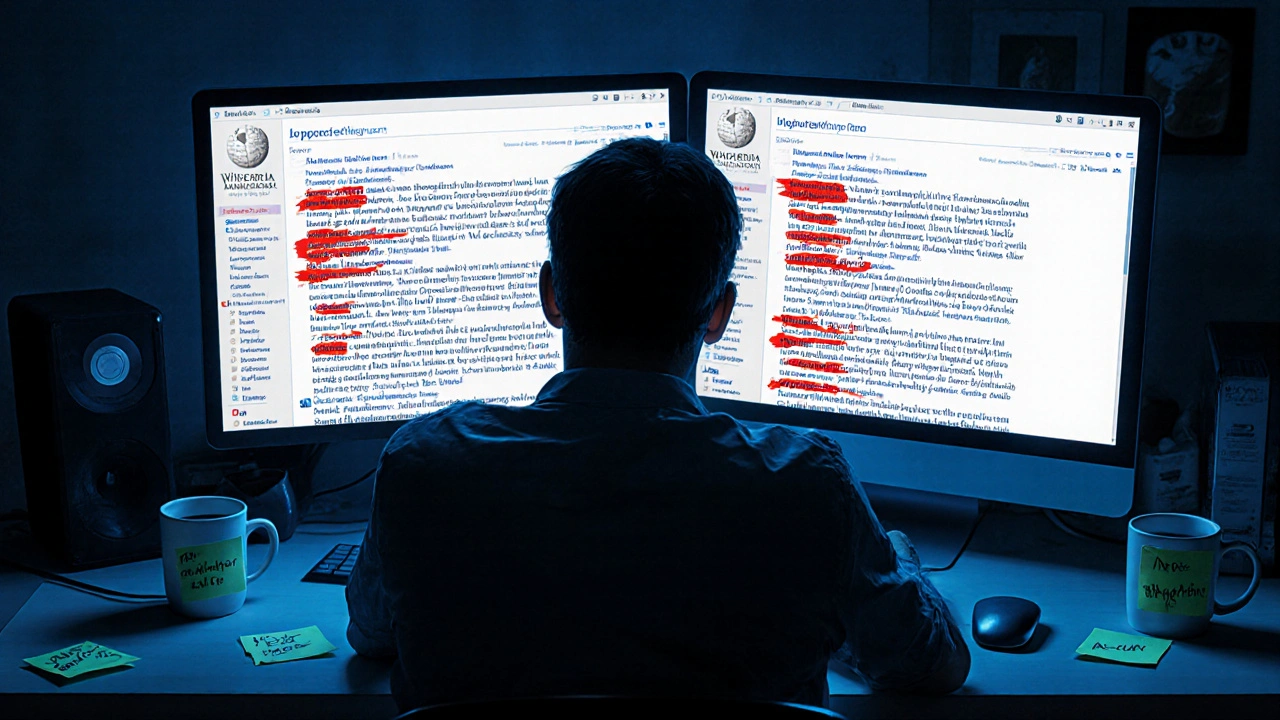

Wikipedia doesn’t have a police force. But it does have a small group of volunteer administrators and checkusers-trusted editors with special tools to trace digital footprints. These aren’t hackers. They use technical clues: IP addresses, browser fingerprints, editing patterns, timing, and even typos.

For example, if two accounts edit the same article within 30 seconds of each other, from the same location, using identical phrasing, that’s a red flag. If one account accuses another of bias, then the second account turns around and defends the first, that’s a classic loop. Investigators also look at edit histories: sockpuppets often make minor, non-controversial edits early on to build trust before slipping in biased changes.

In 2023, a checkuser team uncovered a network of 17 sockpuppets tied to a single individual who had been editing articles on U.S. political candidates for over five years. The accounts had different usernames, different biographies, and even fake email addresses. But their edit times matched perfectly-always late at night, always after the original editor had gone to sleep. That pattern was the giveaway.

Common Targets and Tactics

Not all articles are equally vulnerable. Sockpuppets tend to focus on topics where emotions run high and facts are contested: politics, religion, corporate controversies, and biographies of living people.

Here’s how it usually plays out:

- They create multiple accounts to simulate public support for a claim.

- They tag legitimate editors as "biased" or "vandalism" to get them blocked.

- They revert edits made by others, then claim they’re "restoring consensus."

- They use fake sources or misquote studies to back up their version of events.

In one high-profile case, a corporate PR firm used sockpuppets to soften negative coverage of a major tech company’s labor practices. The fake accounts cited "independent researchers," but those researchers didn’t exist. The articles were rewritten to emphasize "employee satisfaction" while burying complaints about overtime and pay. It took six months and three separate investigations to unravel the network.

The Fallout: When Trust Breaks Down

When sockpuppetry is exposed, the damage isn’t limited to the article. It ripples outward.

Regular editors lose faith. Why spend hours verifying sources if someone else is just gaming the system? New contributors get discouraged. Why join a community that seems rigged? And when media outlets cite Wikipedia as a source, they’re unknowingly amplifying manipulated content.

In 2024, a study by the University of Wisconsin found that articles targeted by sockpuppet networks had 42% more edit wars and 31% higher rates of editor burnout. The study also showed that readers who noticed inconsistencies in Wikipedia articles were 68% less likely to trust the platform for any topic-not just the ones they’d seen tampered with.

Wikipedia’s response? It’s not perfect. Bans are common, but they’re reactive. The platform still lacks automated tools to catch coordinated manipulation in real time. And because editors are volunteers, investigations can take weeks-or months-while the damage spreads.

What Happens to the Perpetrators?

If you’re caught, you don’t just get blocked. You get erased.

Your account is permanently banned. All your edits are reviewed and reverted. Your username is added to a public blacklist. If you try to return under a new name, checkusers will likely catch you again. In extreme cases, your IP address is globally blocked-meaning you can’t edit Wikipedia from any device on that network.

Some people don’t realize how serious this is. They think it’s just "editing on the internet." But Wikipedia’s community treats sockpuppetry like fraud. It’s not a mistake. It’s a betrayal of the social contract that keeps the site alive.

There are exceptions. Sometimes, people create sockpuppets out of fear-like activists in repressive regimes using fake accounts to avoid persecution. Wikipedia has a process for those cases, but they’re rare. Most sockpuppeteers are trying to manipulate, not protect.

How You Can Help

You don’t need to be an admin to spot trouble. Here’s what to watch for:

- Multiple accounts praising each other’s edits.

- Someone who always defends one side of a controversial topic.

- Edits that appear right after a critic leaves the site.

- Accounts with no other activity besides one article.

If you suspect sockpuppetry, don’t accuse. Report it. Use Wikipedia’s CheckUser request page (if you’re eligible) or flag it on the Administrators’ Noticeboard. Let trained volunteers handle it. Your role is to notice, not to judge.

Why This Matters Beyond Wikipedia

Wikipedia isn’t just a website. It’s a mirror of how societies try to build shared truth. Sockpuppetry isn’t just a Wikipedia problem-it’s a symptom of a larger crisis: the erosion of trust in information systems.

When fake accounts manipulate Wikipedia, they’re doing the same thing that disinformation campaigns do on social media: creating the illusion of consensus to drown out reality. The tools are different, but the goal is the same: make people doubt what’s true.

Wikipedia’s fight against sockpuppets is a quiet experiment in democratic accountability. It’s messy. It’s slow. But it’s working-because people still care enough to show up, dig through edit histories, and call out deception. That’s the real story behind the investigations.

How common are sockpuppet accounts on Wikipedia?

Sockpuppetry is rare compared to the total number of edits-less than 0.1% of all edits are tied to confirmed sockpuppet networks. But because these accounts target high-traffic, controversial topics, their impact is disproportionate. In 2024, over 1,200 sockpuppet investigations were opened, with about 300 confirmed cases. Most are small-scale, but a handful involve coordinated campaigns with dozens of accounts.

Can sockpuppets be caught automatically?

Wikipedia uses automated tools like ClueBot NG and Huggle to flag suspicious edits, but they can’t detect coordinated sockpuppet networks on their own. These tools catch vandalism and spam, but sockpuppets are designed to look legitimate. Human investigators are still essential. Machine learning models are being tested, but they’re not reliable enough yet to replace human judgment.

Do companies use sockpuppets to control their Wikipedia pages?

Yes. Corporate PR teams, lobbyists, and political consultants have all been caught using sockpuppets to shape how their organizations are portrayed. In 2022, a major pharmaceutical company was exposed for using 12 fake accounts to downplay side effects in drug articles. The company claimed it was "unauthorized employees," but the editing patterns and timing pointed to coordinated action. Wikipedia banned the accounts and flagged the company’s official edits for extra scrutiny.

What’s the difference between a sockpuppet and a paid editor?

A paid editor openly discloses their affiliation under Wikipedia’s conflict of interest guidelines. They can still edit, but they must be transparent. A sockpuppet hides their identity and motive. Paid editing isn’t illegal on Wikipedia-it’s just heavily regulated. Sockpuppetry is a violation of core policies and leads to bans. The difference is honesty.

Can I be banned for accidentally using a sockpuppet?

If you created a second account without realizing it was against policy-like using a different username to avoid a block-you can be warned and given a chance to explain. But if you used it to influence edits, deceive others, or evade restrictions, you’ll be banned. Intent matters. Accidental use is rare; most cases involve deliberate deception.

Why doesn’t Wikipedia just require real names?

Wikipedia protects anonymity because it’s essential for safety. Journalists, whistleblowers, and people in authoritarian countries rely on pseudonyms to contribute without fear. Requiring real names would shut out many valuable contributors. Instead, the system relies on transparency of behavior-not identity. You don’t need to be who you say you are, but you must edit fairly and openly.