Collaborative Reporting on Wikipedia: How Volunteers Build Trust Together

When you think of collaborative reporting, a system where thousands of unpaid volunteers work together to research, write, and verify news and reference content in real time. Also known as open journalism, it's how Wikipedia turns crowd-sourced effort into reliable knowledge—without editors getting paid, assigned beats, or corporate oversight. This isn’t groupthink. It’s structured teamwork built on policies, sourcing rules, and a shared belief that knowledge should be free and accurate.

What makes this different from traditional newsrooms? In Wikipedia editing, a process where anyone can improve an article but must follow strict guidelines to ensure neutrality and reliability, no single person decides what’s true. Instead, edits are debated, cited, and reviewed by others. Wikipedia community, a global network of volunteers who monitor changes, resolve disputes, and maintain standards across millions of articles runs on consensus, not hierarchy. That’s why articles about controversial topics like climate change or elections don’t reflect one person’s opinion—they reflect what reliable sources agree on. This system only works because people show up, not for fame or money, but because they care about getting it right.

And it’s not just about writing. volunteer journalism, the practice of researching and publishing factual news stories using open platforms like Wikinews and The Signpost is alive and active. Volunteers track policy changes, expose vandalism, and document internal debates—just like reporters, but without bylines or paychecks. They use tools like watchlists, edit summaries, and talk pages to keep things transparent. When a major event happens, like a natural disaster or political shift, hundreds of editors jump in to update articles with verified details, sourced from trusted media, academic papers, or official releases. They don’t chase clicks. They chase accuracy.

There are no algorithms making the final call. No AI auto-writing summaries. Just humans checking sources, asking questions, and sometimes arguing for days over a single sentence. That’s the cost of trust. And it’s why, even with all the AI tools out there, people still turn to Wikipedia first. Because behind every fact is a story of collaboration—of someone taking the time to fix a mistake, cite a source, or defend a minority view. You won’t find that in a chatbot.

Below, you’ll find real stories from inside this system: how editors handle bias, how they fight harassment, how they keep the site running without ads, and how they decide what deserves to be in the encyclopedia. These aren’t theoretical guides. They’re field reports from the front lines of open knowledge.

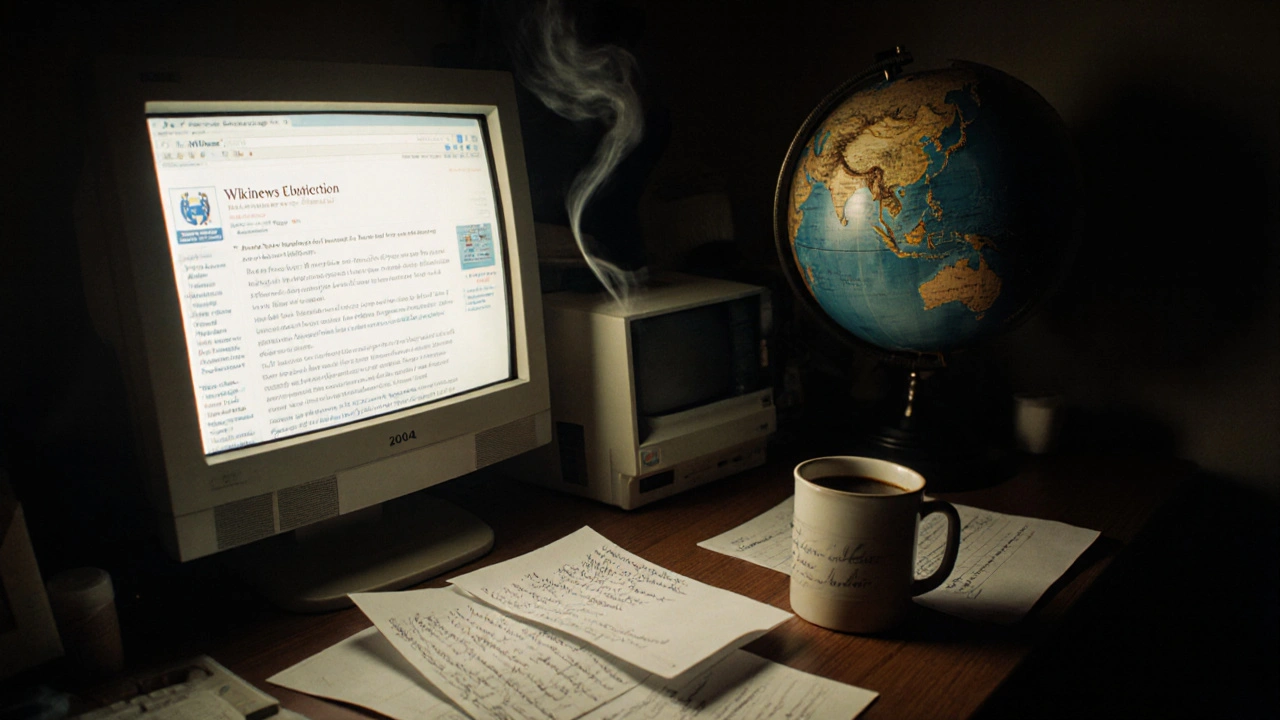

The History and Evolution of Wikinews Since Its 2004 Launch

Wikinews launched in 2004 as a volunteer-driven news site using Wikipedia’s open model. Though it never went mainstream, it pioneered transparent, source-based journalism and still operates today as a quiet archive of verifiable reporting.