Wikipedia News Archive: September 2025 Updates and Community Trends

When you check the Wikipedia, the free, community-edited online encyclopedia that powers one of the world’s most visited websites. Also known as the free encyclopedia, it runs on the efforts of hundreds of thousands of volunteers who edit, debate, and defend its content every day. In September 2025, those efforts were focused on big shifts—new tools to fight vandalism, heated debates over content moderation, and a quiet but growing movement to bring in more editors from underrepresented regions.

The Wikimedia movement, the global network of volunteers, chapters, and organizations that support Wikipedia and its sister projects. Also known as the Wikimedia community, it’s not just about writing articles—it’s about governance, funding, and protecting the platform from abuse. This month, the movement faced pressure over how it handles disputes in high-traffic topics like politics and health. Several regional chapters reported spikes in local edit-a-thons aimed at closing content gaps, especially in African and Southeast Asian languages. Meanwhile, the Wikimedia Foundation quietly rolled out a new machine-learning filter to catch coordinated spam campaigns, which already blocked over 12,000 fake accounts in the first week.

Editor trends, the patterns in how people contribute to Wikipedia, including who edits, how often, and what topics they focus on. Also known as contributor behavior, these trends reveal the health of the platform. Data from September showed a 14% drop in edits from long-term editors in North America and Europe, while new editors from India, Nigeria, and Brazil made up nearly 40% of all new accounts. Many of these newcomers came through mobile apps and school outreach programs. But retention remains a problem—over half of new editors made just one edit and disappeared. The community responded with simplified onboarding guides and video tutorials in 12 languages.

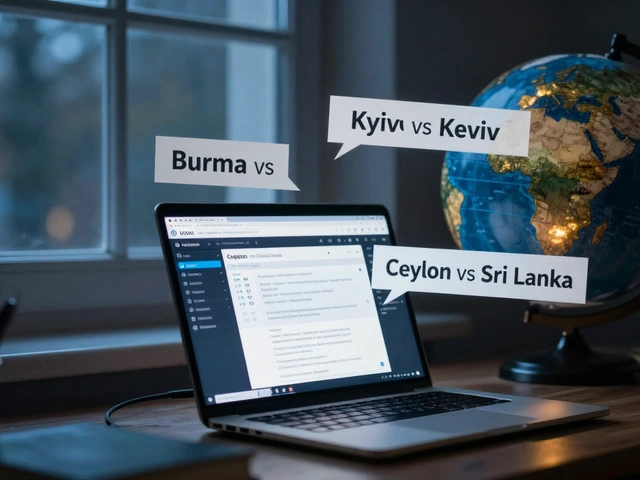

Policy changes, official updates to Wikipedia’s rules that affect how content is added, removed, or debated. Also known as Wikipedia guidelines, these are the invisible laws that keep the site running. The most talked-about change this month was the updated Neutral Point of View policy, which now explicitly requires editors to cite independent sources for claims about living people, even if those claims are widely believed. This came after a series of high-profile disputes over biographies of public figures. Another rule tweak made it easier for users to request the deletion of low-quality articles created by bots, cutting down on clutter by over 20% in two weeks.

And then there’s anti-vandalism, the ongoing effort to detect and undo malicious edits, spam, and fake information on Wikipedia. Also known as content protection, it’s the quiet backbone of the site’s reliability. In September, automated tools caught a wave of coordinated edits trying to insert false information into pages about elections and medical conditions. A new feature called "Edit Shield" gave trusted editors the power to lock high-risk pages for 72 hours after major news events—something that had been debated for years but finally got approved. The result? A 30% drop in disruptive edits during global news spikes.

What you’ll find in this archive isn’t just a list of headlines. It’s a snapshot of how a living, breathing community keeps the world’s largest encyclopedia accurate, open, and free—even when the pressure mounts. You’ll see real stories from editors who stayed up past midnight to fix misinformation, policy debates that lasted weeks, and small wins that barely made the news but mattered deeply to those involved. These aren’t abstract concepts. They’re actions taken by real people. And they’re what keep Wikipedia working.

How to Repair Your Reputation After a Wikipedia Dispute

Wikipedia disputes can damage your reputation even after they’re resolved. Learn how to recover by using credible sources, avoiding conflict-of-interest mistakes, and building positive content that outranks the article in search results.

How Wikipedia Uses Wikidata to Support Citations and Source Metadata

Wikipedia uses Wikidata to store structured metadata for citations, making sources more reliable, easier to verify, and automatically updatable across articles. This system helps combat misinformation and improves global knowledge accuracy.

Publishing Workflow on Wikinews: From Draft to Peer Review

Learn how Wikinews turns drafts into verified news through open peer review. No editors, no paywalls-just truth, sources, and community checks.

Women and Non-Binary Editors: Programs That Work on Wikipedia

Women and non-binary editors are transforming Wikipedia through targeted programs that build community, reduce bias, and expand knowledge. Learn which initiatives are making real change-and how you can help.

How Wikipedia Maintains Neutral Coverage of Religion and Belief Topics

Wikipedia maintains neutral coverage of religion by relying on reliable sources, avoiding personal bias, and representing all beliefs fairly. Learn how it handles controversy, small faiths, and conflicting claims without taking sides.

What Newcomers Should Know Before Joining Wikipedia Community Discussions

Before joining Wikipedia discussions, newcomers should understand the community’s focus on consensus, reliable sources, and civility. Learn how to edit respectfully, avoid common mistakes, and contribute effectively without triggering backlash.

Wikipedia Talk Pages as Windows Into Controversy for Journalists

Wikipedia Talk pages reveal the hidden battles over facts, bias, and influence behind controversial topics. Journalists who learn to read them uncover leads, detect misinformation, and understand how narratives are shaped - often before they hit the headlines.