AI Literacy: Understanding How AI Shapes Wikipedia and Your Information

When you see an answer from an AI encyclopedia, AI literacy, the ability to understand how artificial intelligence generates, filters, and sometimes distorts information. Also known as digital information literacy, it’s not about coding—it’s about knowing when an AI is guessing, when it’s repeating bias, and when it’s hiding the fact that it didn’t check a single source. Most people think AI encyclopedias are faster and smarter than Wikipedia, but speed doesn’t mean accuracy. AI can spit out a confident answer backed by fake citations—sources that look real but don’t actually say what the AI claims. Meanwhile, Wikipedia’s edits are visible, traceable, and often tied to real books, studies, or news reports. You can click through every reference. AI doesn’t offer that.

AI bias in knowledge, the way algorithms lock in historical inequalities by favoring dominant perspectives is a quiet crisis. If every training dataset leans on English-language sources from Western institutions, AI will keep pushing out the same narrow view of history, science, and culture. That’s why Wikipedia’s source verification, the practice of checking that every claim is backed by a reliable, published source matters more than ever. AI doesn’t care if a source is peer-reviewed or a blog post—it just needs to find the words. Human editors on Wikipedia do. They fight to include underrepresented voices, correct misinformation, and remove content that’s been pulled from copyright takedowns or rewritten by bots without context.

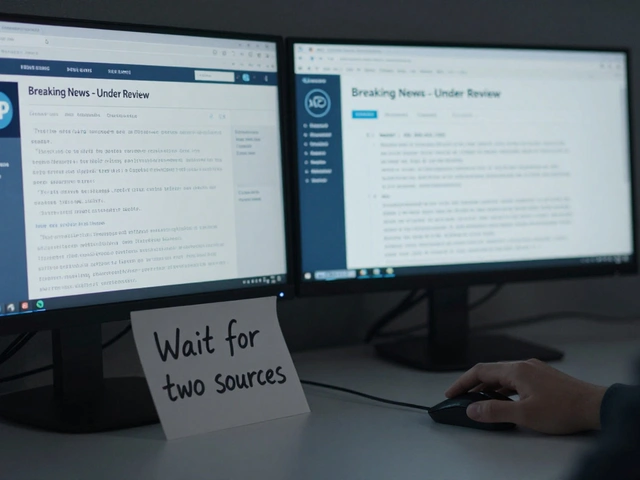

You don’t need to be a tech expert to practice AI literacy. Start by asking: Where did this come from? Can I trace it back to a real document? Is this claim supported by multiple independent sources—or just one that looks official? When you see an AI-generated summary of a historical event, check Wikipedia. You’ll often find the same facts, but with citations, edits, and discussion pages that show how the knowledge was built. That’s the difference between a black box and an open library.

AI literacy isn’t just about protecting yourself from lies. It’s about protecting knowledge itself. When volunteers fix biased entries on Wikipedia, they’re not just editing a page—they’re pushing back against the idea that machines can replace human judgment. The posts below show how this plays out in real time: from AI trying to edit Wikipedia without oversight, to journalists learning how to use Wikipedia to find real sources, to the quiet battles over what gets included—and what gets erased—when algorithms take over.

Wikimedia Foundation's AI Literacy and Policy Advocacy

The Wikimedia Foundation is fighting to ensure AI learns from open knowledge responsibly. Their AI literacy programs and policy advocacy aim to protect Wikipedia’s integrity and demand transparency from AI companies using public data.