Crowdsourced Data: How Wikipedia and Volunteers Shape Reliable Knowledge

When you think of crowdsourced data, information gathered from large groups of people, often volunteers, to build or verify knowledge. Also known as collective intelligence, it’s the engine behind Wikipedia’s 60 million articles—all written, checked, and updated by ordinary people, not paid editors. Unlike corporate databases or AI-trained models, this data doesn’t come from algorithms guessing what’s true. It comes from someone reading a peer-reviewed study, comparing it to another source, and editing a page because they care about getting it right.

This kind of data relies on volunteer editing, the unpaid, community-driven work of updating, fact-checking, and defending Wikipedia content. It’s not random. Editors follow strict rules: no original research, only reliable sources, and consensus before changes stick. You’ll see this in action in posts about the Wikimedia Foundation, the nonprofit that supports Wikipedia’s infrastructure and defends open knowledge, or how the Signpost, Wikipedia’s volunteer-run news site that reports on internal community decisions picks stories based on impact, not clicks. Crowdsourced data only works when people show up—daily—to fix vandalism, update obituaries, or argue over whether a movie deserves its own page.

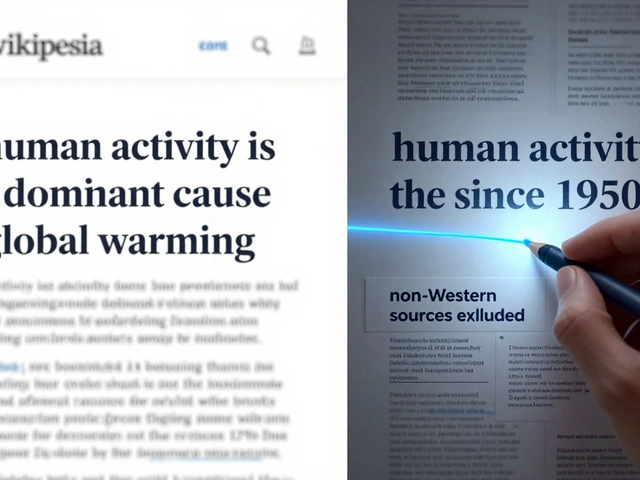

But it’s not perfect. Crowdsourced data can be slow, uneven, or biased if certain voices dominate. That’s why task forces focus on adding Indigenous knowledge, why copy editors clear 12,000 articles in a single drive, and why Wikidata exists—to sync facts across 300+ language versions so one edit fixes errors everywhere. This isn’t magic. It’s a system built on trust, transparency, and repetition. When journalists use Wikipedia to find leads, they’re not using the article—they’re using the citations behind it. Those citations? They’re there because someone took the time to verify them.

What you’ll find below is a collection of real stories from inside this system: how editors handle harassment, how copyright takedowns erase content, how AI tries to mimic human judgment, and why public trust in Wikipedia still beats AI encyclopedias. This isn’t theory. It’s the messy, quiet, vital work of keeping the world’s largest encyclopedia alive—one edit at a time.

Future Directions in Wikipedia Research: Open Questions and Opportunities

Wikipedia research is shifting from traffic metrics to equity. Learn the open questions about bias, AI, offline access, and how to make global knowledge truly inclusive.