Edit Disputes on Wikipedia: How Conflicts Shape Knowledge

When two editors clash over a sentence in an article, it’s not just a disagreement—it’s a edit dispute, a conflict between Wikipedia contributors over content changes, often rooted in policy, bias, or sourcing. Also known as edit wars, these clashes are the engine of Wikipedia’s self-correction system—messy, loud, and surprisingly effective. Unlike other platforms where content is controlled by a few, Wikipedia lets anyone challenge what’s written. That means every fact, every word, every date can be questioned. And when that happens, the community doesn’t just delete or revert—it debates.

These disputes don’t happen in a vacuum. They’re shaped by Wikipedia policies, the formal rules that guide editing, like neutral point of view, verifiability, and no original research. When someone adds a claim without a source, or pushes a viewpoint that favors one side, others step in. Sometimes it’s a quick revert. Other times, it spirals into a weeks-long battle on the article’s talk page. That’s when Wikipedia governance, the system of volunteer-led decision-making that includes arbitration, mediation, and community votes kicks in. The arbitration committee, a group of trusted volunteers who resolve the most serious and repeated conflicts steps in when things get too heated. They don’t just pick a side—they look at patterns: Who’s editing? What’s their history? Are they using sockpuppets? Are they paid? These aren’t just technical questions—they’re about trust.

Most edit disputes never reach the arbitration committee. They get resolved quietly, through collaboration, better sourcing, or just giving up. But the ones that don’t? They leave marks. They change policies. They spark new tools. They reveal who’s being left out of the conversation. When a dispute over a historical figure’s portrayal leads to a new guideline on cultural bias, that’s not chaos—it’s evolution. And that’s why these fights matter. They’re not about winning. They’re about getting it right.

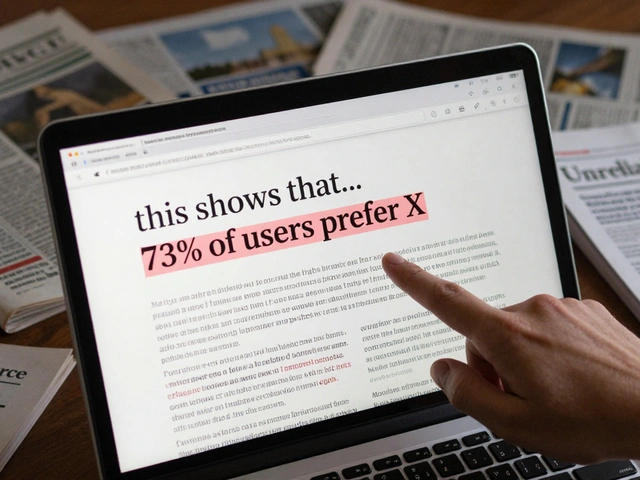

What you’ll find below isn’t a list of heated arguments. It’s a window into how Wikipedia turns conflict into clarity. From how talk pages fix disputed news to how bots help calm edit wars, these stories show the quiet machinery behind the noise. You’ll see how community feedback shapes policy, how volunteers protect editors under threat, and how even the most bitter disputes can lead to better knowledge—for everyone.

How to Monitor Wikipedia Article Talk Pages for Quality Issues

Monitoring Wikipedia talk pages helps identify quality issues before they spread. Learn how to spot red flags, use tools, and contribute to better information across the platform.