Fact-Checking Tools on Wikipedia: How Volunteers Verify Truth Online

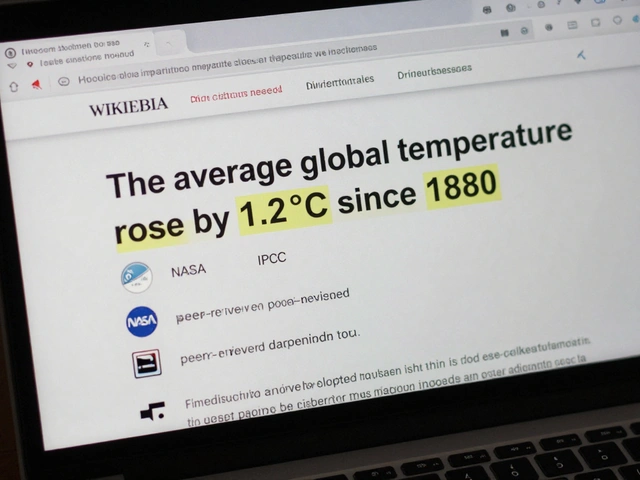

When you see a claim on Wikipedia, it didn’t just appear out of thin air. Behind every fact is a fact-checking tool, a set of methods and technologies used by volunteers to confirm information before it’s published. Also known as source verification systems, these tools help editors spot false claims, trace claims back to reliable sources, and block misinformation before it spreads. Unlike AI encyclopedias that guess answers and show fake citations, Wikipedia’s fact-checking is done by people using real evidence—cited books, peer-reviewed journals, and trusted news outlets.

This system doesn’t work alone. It relies on reliable sources, published materials that have been vetted by editors for accuracy and credibility. Also known as secondary sources, they’re the backbone of every good Wikipedia edit. Editors don’t trust blogs, press releases, or social media posts—they look for articles from newspapers, academic journals, or official reports. Then they use Wikipedia watchlist, a tracking feature that alerts editors to changes on pages they care about. Also known as edit monitoring, it helps catch vandalism and false edits in minutes, not days. Tools like these aren’t flashy, but they’re what keep Wikipedia accurate when other sites are drowning in AI-generated noise.

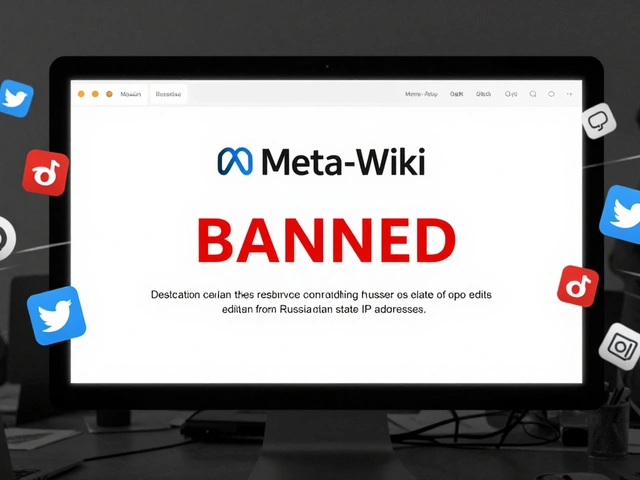

It’s not just about finding sources—it’s about knowing how to use them. That’s where annotated bibliographies, organized lists of sources with notes explaining why each one supports a claim. Also known as source summaries, they help editors build strong cases for controversial edits. You’ll see these in long-form article discussions, where volunteers debate whether a fact belongs. And when AI tries to edit Wikipedia? That’s where AI fact-checking, the process of auditing automated edits for bias, hallucinations, or misleading citations. Also known as algorithmic verification, it’s become a growing concern as AI tools try to auto-fill Wikipedia entries with made-up references. Volunteers are now spending more time checking AI-generated text than ever before.

Fact-checking on Wikipedia isn’t about being perfect—it’s about being transparent. Every edit can be traced. Every source can be clicked. Every dispute can be reviewed. That’s why people still trust Wikipedia more than AI-generated answers, even when the AI answers faster. The tools aren’t magic. They’re simple. But they work because real people use them every day.

Below, you’ll find real stories from editors who use these tools to fight misinformation, fix biased content, and defend the integrity of the world’s largest encyclopedia. No fluff. No hype. Just how truth gets verified, one edit at a time.

Fact-Checking Using Wikipedia: Best Practices for Journalists

Wikipedia is not a source-but for journalists, it’s a powerful tool to find verified facts. Learn how to use citations, avoid pitfalls, and turn Wikipedia into a gateway to real evidence.