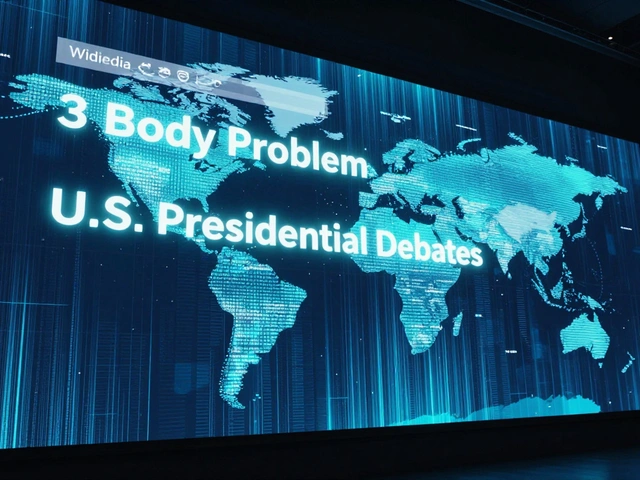

Knowledge platforms: How Wikipedia and open knowledge systems shape what we know

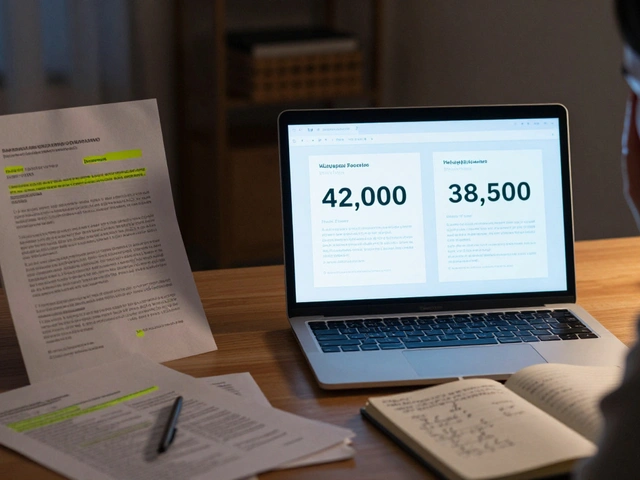

When we talk about knowledge platforms, digital systems built to collect, organize, and share information by communities rather than corporations. Also known as open knowledge systems, they rely on volunteers, not paid editors, to keep content accurate and up to date. Wikipedia is the biggest one—but it’s not alone. Behind it are tools, policies, and people working to make sure knowledge stays free, accessible, and fair. These platforms don’t just host facts—they fight for them.

What makes a knowledge platform, a system where users collaboratively build and maintain shared information. Also known as open knowledge systems, they rely on volunteers, not paid editors, to keep content accurate and up to date. different from a website or database? It’s the rules. Wikipedia doesn’t let anyone just add anything. It uses policies like neutral point of view, reliable sources, and notability checks to keep things honest. But those rules get messy. Who decides what’s notable? Who gets to edit controversial topics? That’s where Wikimedia Foundation, the nonprofit that supports Wikipedia and its sister projects by providing infrastructure, legal aid, and funding for global initiatives steps in—not to control content, but to protect the people who make it possible. They invest in safety tools for editors in dangerous regions, fund projects in underrepresented languages like Swahili and Yoruba, and push back against AI companies that scrape Wikipedia without permission.

These platforms don’t just reflect knowledge—they shape it. When a student writes a paper for class and publishes it on Wikipedia, that’s knowledge being made public. When a volunteer in Nigeria checks local news sources to update a page, that’s knowledge becoming more global. When bots fix broken links and human editors debate a policy change on a talk page, that’s knowledge being refined. It’s messy. It’s slow. But it’s real. And it’s working—despite funding gaps, declining volunteers, and rising misinformation.

What you’ll find here isn’t a list of how-to guides or tech specs. It’s a window into the real world behind Wikipedia: the editors who risk their safety, the grants that keep African language projects alive, the elections that decide who controls the rules, and the quiet tools that keep the whole thing running. These stories show how knowledge platforms survive—not because they’re perfect, but because people still believe in them.

Ethical AI in Knowledge Platforms: How to Stop Bias and Take Back Editorial Control

Ethical AI in knowledge platforms must address bias, ensure editorial control, and prioritize truth over speed. Without human oversight, AI risks erasing marginalized voices and reinforcing harmful stereotypes.