Minority Views on Wikipedia: How Underrepresented Voices Shape the Encyclopedia

When we talk about minority views on Wikipedia, the perspectives and knowledge systems of groups historically excluded from mainstream publishing. Also known as underrepresented perspectives, these views include Indigenous knowledge, non-Western histories, LGBTQ+ experiences, and regional cultural narratives that don’t always fit traditional encyclopedic norms. Wikipedia’s goal is to be the sum of all human knowledge—but for years, that sum was skewed by who had access, time, and confidence to edit. The result? Gaps. Omissions. Biases that quietly became part of the record.

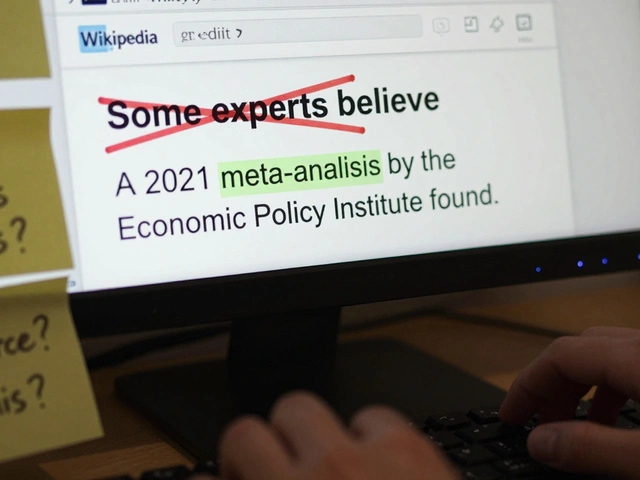

It’s not just about adding more articles. It’s about changing how reliability is judged. A local newspaper in Nigeria or a community oral history project might be dismissed as "not notable"—even when it’s the only verified source on a topic. Meanwhile, mainstream Western media gets treated as gold standard. That’s why systemic bias, the deep-rooted patterns that favor dominant cultures and voices in knowledge creation is now a major focus for volunteer editors. Task forces, WikiProjects, and edit-a-thons are actively working to fix this. They’re not just adding content—they’re rewriting the rules of what counts as credible. And it’s working. Articles on Indigenous land rights, queer scientists, and African oral traditions are no longer rare exceptions. They’re becoming part of the backbone of the encyclopedia.

But progress isn’t automatic. It needs people. It needs patience. It needs editors who understand that a citation from a university journal isn’t always more valid than one from a tribal council meeting. That’s where Wikipedia diversity, the intentional inclusion of voices from marginalized communities in editing and decision-making comes in. The Wikimedia Foundation’s 2025-2026 plan puts this front and center—not as a side project, but as core to its mission. This isn’t about political correctness. It’s about accuracy. A world encyclopedia that ignores half the world’s stories isn’t complete—it’s broken.

What you’ll find below isn’t a list of complaints. It’s a collection of real efforts—by volunteers, journalists, and local historians—to make Wikipedia reflect the full range of human experience. From how Indigenous narratives are reclaimed on the site, to how AI tools risk erasing minority knowledge, to how small-town histories finally get documented—these stories show what’s possible when the encyclopedia stops being a mirror for the powerful and starts being a window for everyone.

Due Weight on Wikipedia: How to Balance Majority and Minority Views in Articles

Wikipedia's due weight policy ensures articles reflect the real balance of evidence from reliable sources-not popularity or personal bias. Learn how to fairly represent majority and minority views without misleading readers.