News Source Credibility on Wikipedia: How Trust Is Built and Broken

When you read a Wikipedia article, you’re not just reading facts—you’re reading the result of a constant battle over news source credibility, the measure of how trustworthy a publication is when used to verify claims on Wikipedia. Also known as source reliability, it’s the backbone of everything Wikipedia claims to be: accurate, neutral, and open. If a source is biased, outdated, or secretly paid for, the article suffers. And if editors can’t tell the difference, misinformation spreads—even on the world’s most visited encyclopedia.

This isn’t just about big newspapers. It’s about local journalists in Nigeria, independent bloggers in Brazil, and academic journals in rural India. Wikipedia relies on reliable sources, publications with editorial oversight, fact-checking, and accountability. Also known as secondary sources, they’re the gold standard for verifying claims. But as local news outlets shut down, Wikipedia loses ground. When the only source left is a blog post with no author or date, editors have to ask: can we trust this? The answer often comes down to sockpuppetry, the use of fake accounts to push a narrative under false pretenses. Also known as coordinated editing, it’s one of the biggest threats to news source credibility because it makes lies look like consensus. When a group of hidden users floods talk pages with links to a single shady article, it tricks others into thinking the source is widely accepted. The system catches some of them—but not all.

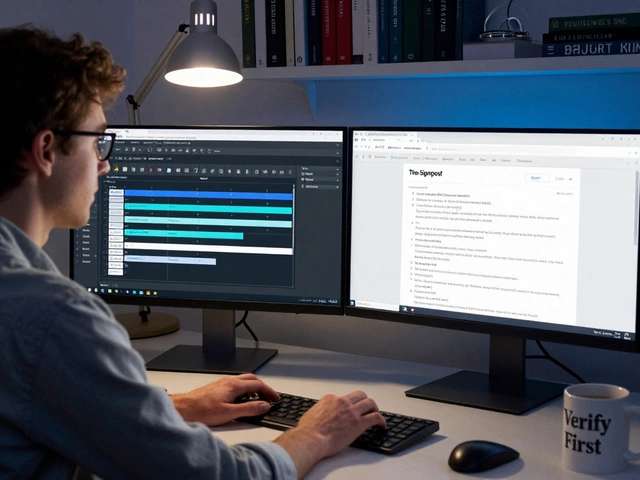

Wikipedia doesn’t have editors working for paychecks. It has volunteers who spend hours checking citations, chasing down broken links, and arguing over whether a tweet counts as evidence. That’s why Wikipedia policies, the rules that guide what can and can’t be added to articles. Also known as content guidelines, they exist to protect the platform from manipulation matter so much. The neutral point of view rule isn’t just a suggestion—it’s a firewall. And when editors use talk pages to debate sources instead of editing wars, that’s when credibility wins. But it’s fragile. One viral misinformation campaign, one bot that auto-reverts good edits, one paid editor slipping in a biased quote—and trust cracks.

What you’ll find in the posts below isn’t theory. It’s real cases. How a student group in Canada improved hundreds of articles using peer-reviewed journals. How The Signpost, Wikipedia’s own newspaper, picks stories based on impact, not clicks. How bots fix broken links before humans even notice them. How African language Wikipedias are building credibility from the ground up, using sources in Swahili and Yoruba that mainstream media ignores. You’ll see how editors spot red flags on talk pages, how grants help underrepresented regions find better sources, and why even one unverified photo can ruin an entire article’s trustworthiness. This isn’t about being perfect. It’s about being honest. And that’s harder than it looks.

Local News Sources on Wikipedia: How Reliable Are They in Different Regions?

Wikipedia's local news coverage depends on existing media. In areas without newspapers or reliable outlets, local events vanish from the record. Here's how reliability varies by region-and what you can do about it.