Wikipedia doesn’t take sides. That’s not because it’s boring - it’s because it’s designed to survive in a world where everyone thinks they’re right. When you search for something controversial - abortion, climate change, gun rights, or the history of a war - you don’t want a rant. You want facts. That’s where neutral coverage comes in. It’s the rule that keeps Wikipedia from becoming just another echo chamber.

Neutral doesn’t mean empty

People often misunderstand what neutral coverage means. They think it means saying nothing. Or worse - pretending both sides are equally valid, even when one side is backed by evidence and the other isn’t. That’s not neutrality. That’s false balance.Neutral coverage on Wikipedia means presenting all significant viewpoints fairly, accurately, and in proportion to how widely they’re held. If 97% of climate scientists agree human activity is warming the planet, then Wikipedia’s article on climate change reflects that. But it also mentions the minority view - not because it’s true, but because it exists in public discourse and has been published in credible sources. The key is context. You don’t just list opinions. You explain where they come from, who supports them, and what evidence they rely on.

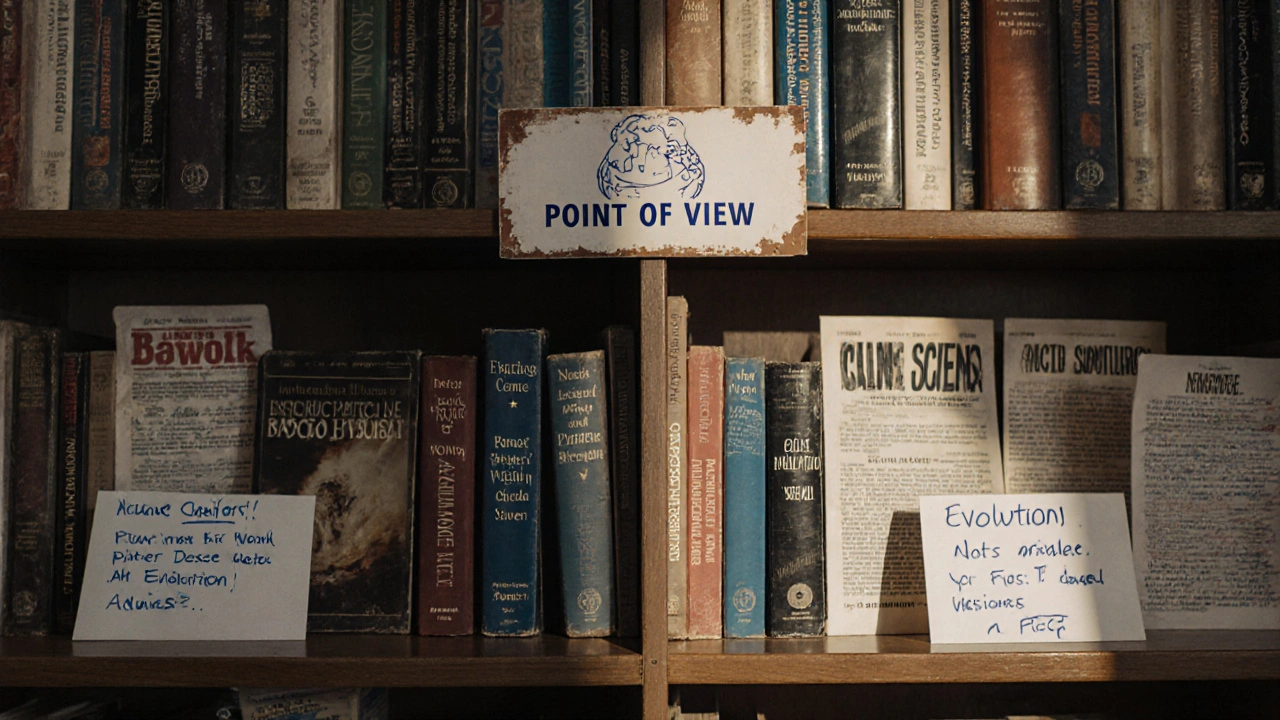

Take the article on evolution. It doesn’t say, "Some people believe in evolution, others believe in creationism." It says: "Evolution by natural selection is the scientific consensus for the diversity of life on Earth, supported by evidence from genetics, paleontology, and comparative anatomy. Creationism, as a religious belief, is not considered a scientific theory and is not taught in science curricula in most countries. However, it has been promoted in some U.S. public school debates and legal cases."

That’s neutral. Not because it’s soft. But because it’s precise.

How Wikipedia enforces neutrality

Wikipedia doesn’t rely on editors’ good intentions. It relies on rules - and a lot of eyes watching.The Neutral Point of View (NPOV) policy is one of the Five Pillars of Wikipedia. It’s not optional. Every edit, every citation, every word is judged against it. If an editor adds a claim that favors one side without proper sourcing, other editors will revert it. If someone deletes a minority viewpoint entirely, they’ll get flagged for bias. The system is messy, but it works because it’s public.

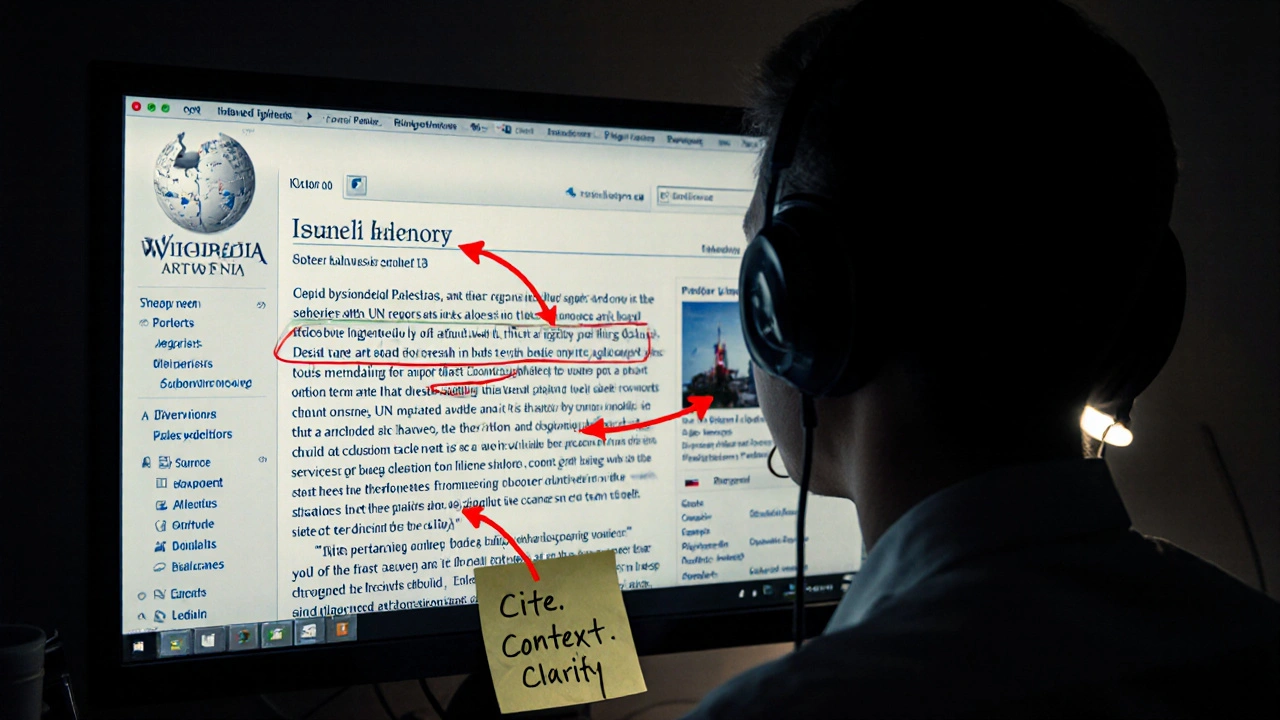

Every edit is logged. Every change can be tracked. Every user has a talk page where disagreements are hashed out. There are no secret moderators. No corporate overlords. Just thousands of volunteers who care enough to argue about whether a sentence about abortion should say "unborn child" or "fetus." And they do it in the open.

When a topic becomes too heated - like the Israeli-Palestinian conflict or the history of colonialism - Wikipedia creates “protected” pages. Only experienced editors can edit them. Disputes are moved to dedicated talk pages. Mediators step in. Sometimes, articles get split into sub-articles to handle different perspectives without drowning the main one.

What happens when neutrality breaks down

It breaks down often. And when it does, it’s usually because someone tries to weaponize neutrality.One common mistake is giving equal weight to fringe views. For example, an article on vaccines once included a long section on anti-vaccine theories, citing blogs and personal stories. That wasn’t neutral. That was misleading. The community corrected it by requiring all claims to be backed by peer-reviewed journals, government health agencies, or major medical associations. Now, the article says: "Vaccine hesitancy is a social phenomenon with roots in misinformation. While some individuals express concerns, scientific studies consistently show vaccines are safe and effective."

Another problem is sourcing bias. If an article on gun control only cites liberal think tanks and ignores conservative ones, it’s biased. But if it only cites conservative sources that reject public health data, it’s also biased. Neutral coverage demands a mix - and a way to judge credibility. Wikipedia uses reliable sources: academic journals, books from university presses, major newspapers, government reports. Blogs, forums, and YouTube videos don’t count - unless they’re cited by someone who’s credible.

There’s also the issue of language. Words like "radical," "extremist," or "fraud" can carry judgment. Editors are trained to avoid loaded terms unless they’re directly quoted from a reliable source. Instead of saying "climate change deniers," they write "individuals who reject the scientific consensus on climate change." It’s clunky. But it’s fair.

Why this matters in a polarized world

In 2025, people don’t trust institutions. They don’t trust the media. They don’t trust schools. But they still trust Wikipedia - because it doesn’t look like a news channel. It doesn’t have ads. It doesn’t have algorithms pushing outrage. It looks like a library.Studies show that Wikipedia is one of the most trusted sources for controversial topics, even among people who distrust other forms of media. Why? Because it doesn’t pretend to be the truth. It shows you the truth - and the arguments around it.

When someone searches "did the Holocaust happen?" and finds a clear, sourced answer backed by historians, museums, and court records - that’s powerful. When they search "is the Earth flat?" and find a section titled "Scientific consensus" followed by a list of evidence and a note that flat-Earth beliefs are not supported by any credible scientific body - that’s not censorship. That’s clarity.

Neutral coverage doesn’t solve polarization. But it gives people a place to check facts without being shouted down. It doesn’t tell you what to believe. It tells you what’s documented, what’s debated, and what’s dismissed - and why.

The limits of neutrality

Wikipedia’s neutrality isn’t perfect. It’s limited by who contributes. Most editors are from North America and Europe. That means some global perspectives - especially from the Global South - are underrepresented. A topic like colonialism might be covered with Western academic sources, missing indigenous oral histories or local scholarship.There’s also the problem of systemic bias. Articles about men, Western history, and technology tend to be longer and better sourced than those about women, non-Western cultures, or marginalized groups. Efforts to fix this - like WikiProject Women in Red or edit-a-thons focused on African history - are ongoing. But progress is slow.

And neutrality can’t fix bad sources. If every major newspaper in a country is state-controlled and repeats government propaganda, Wikipedia can’t magically create truth. It can only report what’s published - which sometimes means reflecting falsehoods as if they’re legitimate viewpoints. That’s why sourcing matters more than ever.

What you can do

You don’t need to be an editor to help. If you read a Wikipedia article on a polarized topic and notice something missing - a key perspective, a missing citation, a loaded word - you can fix it. You don’t need permission. You just need to follow the rules: cite reliable sources, avoid original research, and stick to the facts.Or, if you’re not ready to edit, just compare articles. Look at how Wikipedia covers a topic versus how a partisan website does. You’ll see the difference. One tells you what to think. The other tells you what people think - and why.

Wikipedia’s neutrality isn’t about being perfect. It’s about being transparent. It’s about showing the work. And in a world full of noise, that’s the quietest, most powerful kind of truth.

Does Wikipedia’s neutral coverage mean all opinions are treated equally?

No. Wikipedia doesn’t treat all opinions as equally valid. It presents viewpoints in proportion to their representation in reliable, published sources. For example, scientific consensus on climate change is given far more weight than fringe denialist claims, even if both are mentioned. The goal is accuracy, not symmetry.

Can Wikipedia be biased even if it follows neutrality rules?

Yes. Bias can creep in through sourcing choices, language use, or gaps in coverage. If most editors come from one region or background, certain perspectives may be underrepresented. Wikipedia’s neutrality policy helps reduce bias, but it doesn’t eliminate it entirely. Ongoing efforts focus on diversifying editorship and improving coverage of underrepresented topics.

Why doesn’t Wikipedia just delete false claims?

Wikipedia doesn’t delete false claims - it documents them. If a false claim is widely believed or has been published in credible sources (like a political speech or a viral article), it’s included with context explaining why it’s incorrect. This prevents misinformation from being hidden, while still correcting it. Deleting content entirely can make people think it’s being censored.

How does Wikipedia handle topics with no scientific consensus?

For topics without a clear consensus - like the origins of certain diseases or interpretations of historical events - Wikipedia presents the range of scholarly views, citing reputable sources for each. It avoids taking sides and instead explains the nature of the disagreement. Phrases like "some scholars argue" or "others contend" are used to reflect uncertainty without favoring any position.

Is Wikipedia’s neutrality just a facade for liberal bias?

Claims of liberal bias often come from people who expect Wikipedia to reflect their own views. But the policy requires sourcing from credible, independent outlets - which includes conservative, libertarian, and centrist publications. Articles on topics like immigration, religion, or economics include perspectives from across the political spectrum, as long as they’re documented in reliable sources. The system is designed to be resistant to ideological dominance, not to promote one ideology.