Public Trust in Wikipedia: How Open Knowledge Earns Credibility

When you think of public trust, the collective confidence people place in an institution or system because it’s seen as honest, reliable, and accountable. Also known as social credibility, it’s what keeps Wikipedia alive even when people know it’s edited by strangers. Most websites try to buy trust with ads or celebrity endorsements. Wikipedia doesn’t have any of that. It doesn’t run ads. It doesn’t pay editors. It doesn’t chase clicks. Instead, it builds trust the hard way—through consistent rules, open processes, and thousands of volunteers who show up every day to fix errors, cite sources, and defend neutrality.

This kind of trust doesn’t happen by accident. It comes from reliable sources, third-party publications like academic journals, books, and established news outlets that have editorial standards and fact-checking—the backbone of every Wikipedia article. When you see a claim on Wikipedia, it’s supposed to be backed by something that already passed a real editor’s test. That’s why journalists use Wikipedia not as a source, but as a map to real evidence. And when mistakes happen, the system doesn’t hide them—it shows them. Edit histories are public. Talk pages are open. Disputes are documented. This transparency is what makes Wikipedia different from any other encyclopedia in history.

Wikimedia Foundation, the nonprofit that supports Wikipedia’s infrastructure, servers, and legal protections, but doesn’t control content plays a quiet but vital role. It doesn’t write articles. It doesn’t decide what’s true. But it does protect the platform from legal threats, builds tools that help editors spot vandalism, and fights for policies that keep knowledge free and open. When AI companies scrape Wikipedia to train their models, the Foundation pushes back—not to block access, but to demand credit, transparency, and ethical use. That’s not PR. That’s accountability.

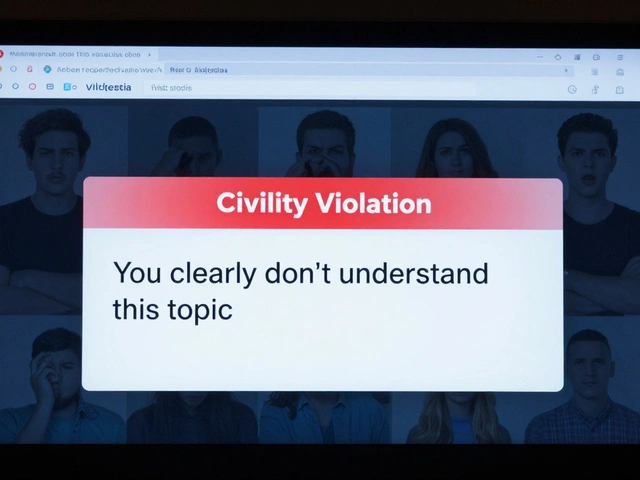

Public trust in Wikipedia isn’t about being flawless. It’s about being repairable. When a biased edit slips through, someone catches it. When a source gets outdated, someone updates it. When a community feels ignored—like Indigenous editors or small-language speakers—task forces form to fix the gaps. This isn’t magic. It’s a system designed to self-correct, and that’s rare in the digital world.

You won’t find this kind of trust in a corporate wiki, a paid encyclopedia, or an AI-generated summary. Those systems optimize for speed, profit, or control. Wikipedia optimizes for accuracy, fairness, and openness. That’s why, even as misinformation spreads everywhere else, millions still turn to it first—not because they believe it’s perfect, but because they believe in the process behind it.

Below, you’ll find real stories from inside Wikipedia’s ecosystem—how policies are made, how volunteers protect the site, how funding decisions are debated, and how the community fights to keep knowledge honest. These aren’t abstract ideas. They’re the daily work that keeps public trust alive.

Public Perception of Wikipedia vs Emerging AI Encyclopedias in Surveys

Surveys show people still trust Wikipedia more than AI encyclopedias for accurate information, despite faster AI answers. Transparency, source verification, and human editing keep Wikipedia ahead.