Wikipedia fake accounts: How sockpuppets undermine trust and what’s being done about them

When someone creates a Wikipedia fake accounts, user profiles made to secretly control edits while hiding their true identity. Also known as sockpuppets, these accounts are designed to trick the community into thinking multiple people agree with a single agenda. It’s not just about spam or vandalism—it’s about deception. Someone banned for pushing false claims might return under a new name, vote in arbitration elections they’re not allowed to join, or flood talk pages with fake support to sway consensus. This isn’t rare. In 2022, the Wikimedia Foundation reported over 1,200 confirmed sockpuppet networks were shut down globally in a single year.

These fake accounts don’t just break rules—they break trust. When a well-meaning editor sees ten users all supporting the same edit, they assume it’s community consensus. But if nine of those users are controlled by one person, that’s not consensus—it’s manipulation. Sockpuppets, fake identities used to circumvent bans or manipulate discussion often target controversial topics: politics, corporate figures, celebrities, and local history. They’re used to erase criticism, inflate importance, or bury inconvenient facts. And they’re hard to catch because they mimic real behavior: they comment thoughtfully, cite sources, and avoid obvious spam.

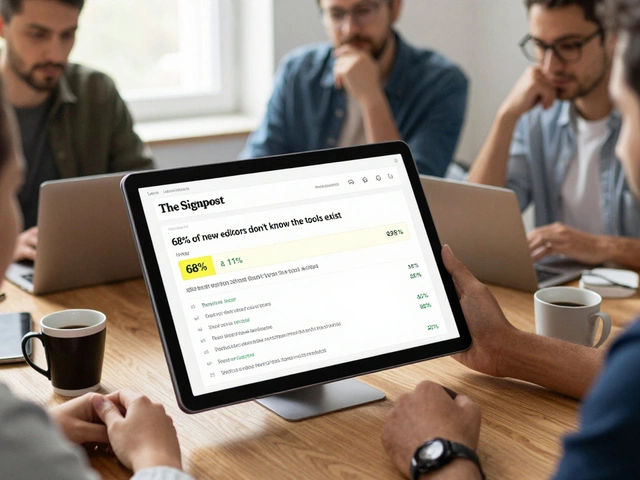

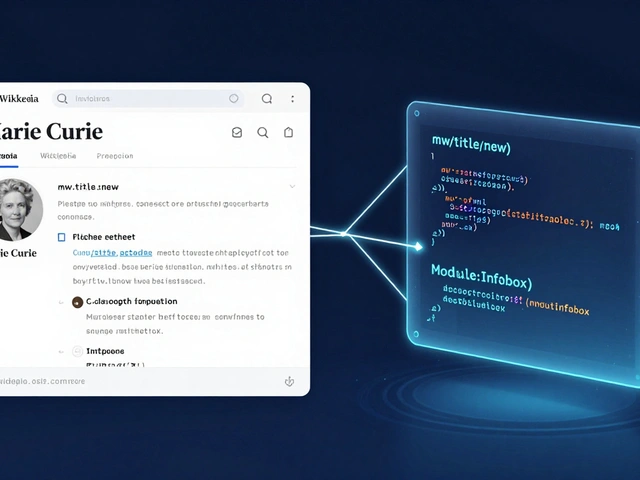

Wikipedia fights back with tools and people. Wikipedia bots, automated programs that detect patterns of suspicious editing scan for telltale signs: identical writing styles across accounts, overlapping edit times, shared IP addresses, or edits that follow a banned user’s old patterns. Volunteers in the Arbitration Committee, a group of experienced editors who handle serious disputes and bans investigate suspected networks, often tracing edits back to a single person across dozens of accounts. When caught, these accounts get blocked permanently, and the real user may face a site-wide ban.

It’s not perfect. Some fake accounts slip through, especially when they’re patient—editing slowly over months, avoiding detection. Others are run by organizations trying to shape coverage without revealing their ties. But the system works because it’s open. Anyone can check edit histories, flag suspicious activity, or join the fight on the Wikipedia fake accounts noticeboards. You don’t need to be an expert—just observant.

What you’ll find in the posts below isn’t just theory. It’s real cases, real tools, and real people who’ve spent years untangling deception on Wikipedia. From how bots catch sockpuppets to how editors spot fake consensus, these stories show the quiet, relentless work behind keeping Wikipedia honest.

Sockpuppetry on Wikipedia: How Fake Accounts Undermine Trust and What Happens When They're Caught

Sockpuppetry on Wikipedia involves fake accounts manipulating content to push agendas. Learn how investigations uncover these hidden users, the damage they cause, and why this threatens the platform's credibility.