Wikipedia Integrity: How Trust Is Built, Broken, and Fixed on the World's Largest Encyclopedia

When you read a Wikipedia article, you’re not just reading words—you’re seeing the result of millions of decisions made by real people trying to keep knowledge honest. Wikipedia integrity, the collective effort to ensure accuracy, neutrality, and trustworthiness across all articles. Also known as Wikipedia reliability, it’s what makes the site usable for students, journalists, and curious minds worldwide. But this integrity isn’t automatic. It’s fragile. It’s fought for. And sometimes, it’s broken by people who don’t want the truth to win.

One of the biggest threats to Wikipedia integrity, the collective effort to ensure accuracy, neutrality, and trustworthiness across all articles. Also known as Wikipedia reliability, it’s what makes the site usable for students, journalists, and curious minds worldwide. is sockpuppetry, the use of fake accounts to manipulate content and deceive the community. Also known as Wikipedia fake accounts, these hidden editors push agendas under false identities, often in service of corporations, political groups, or personal vendettas. When these accounts are caught—through careful investigations and bot alerts—it’s not just one edit that gets reverted. It’s a whole system of trust that gets shaken. And that’s why content moderation, the ongoing process of reviewing, correcting, and enforcing rules on Wikipedia. Also known as Wikipedia governance, it’s the invisible labor that keeps the site from collapsing under bias and spam. matters so much. It’s not about censorship. It’s about fairness. It’s about making sure that the person who edits a page about climate change isn’t paid by an oil company, and that the person writing about a local politician isn’t their cousin.

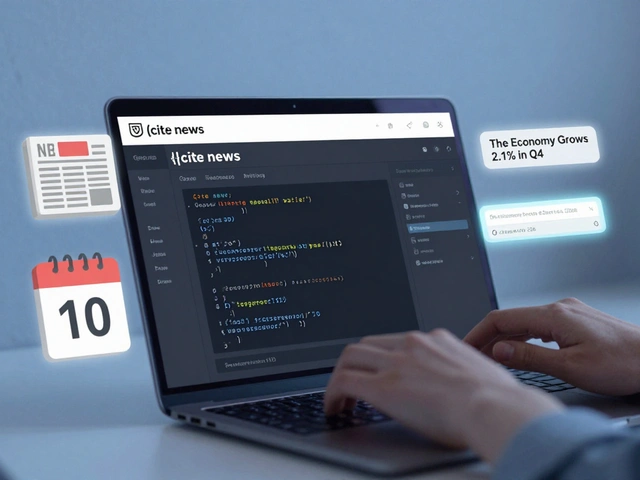

Wikipedia doesn’t have editors in boardrooms. It has volunteers. People who stay up late checking citations. People who argue for hours on talk pages over whether a source is reliable. People who notice when a photo is mislabeled or a quote is taken out of context. reliable sources, third-party, published materials that back up claims on Wikipedia. Also known as secondary sources, they’re the foundation of every good article. Without them, Wikipedia becomes rumor. With them, it becomes a reference that even experts trust. But here’s the problem: as local newspapers shut down, those sources vanish—especially in Africa, Latin America, and rural Asia. That’s why Wikipedia’s integrity isn’t just a technical issue. It’s a global justice issue.

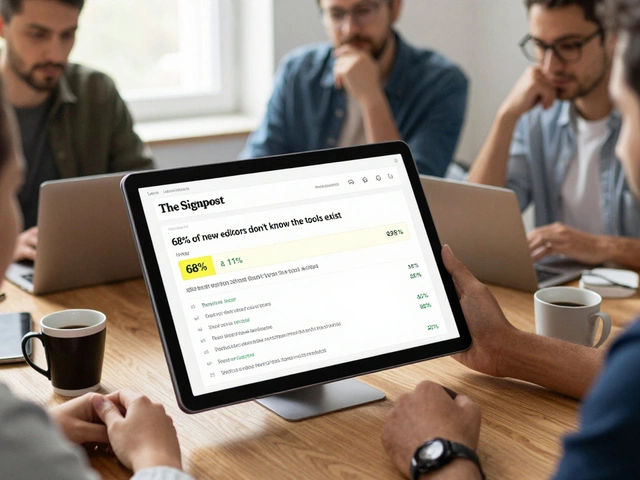

What you’ll find in this collection isn’t theory. It’s real cases. Real tools. Real people. From how bots catch vandalism before you even see it, to how students in universities are helping fix misinformation. From the quiet editors who spend years improving articles nobody notices, to the high-stakes elections that decide who gets to ban others. This isn’t about Wikipedia being perfect. It’s about how hard it works to stay honest—and what happens when it doesn’t.

Safety and Integrity Investments: Protecting Wikipedians

Wikipedia relies on volunteers who face real threats for editing controversial topics. The Wikimedia Foundation is investing in safety tools, legal aid, and anonymity features to protect these editors and preserve the integrity of free knowledge.