Wikipedia moderation: How volunteers keep the encyclopedia accurate and safe

When you read a Wikipedia article, you’re seeing the result of Wikipedia moderation, the collective effort of volunteers who review edits, enforce policies, and remove harmful content. Also known as content oversight, it’s not done by algorithms alone—it’s powered by people who spend hours checking sources, reverting spam, and calming heated debates. Unlike commercial sites that rely on paid moderators or automated filters, Wikipedia’s system runs on trust, transparency, and a shared belief that knowledge should be open—but not reckless.

This system relies on a few key tools and practices. Watchlists, personalized tracking tools that let editors monitor changes to articles they care about help catch vandalism before it spreads. Policies and guidelines, the rulebook that separates mandatory rules from helpful advice guide how edits should be made and disputes resolved. And the Signpost, Wikipedia’s volunteer-run newspaper that reports on community decisions and emerging issues keeps editors informed about what’s changing behind the scenes. These aren’t just features—they’re the backbone of a system that handles over 600 million page views a day without ads or corporate oversight.

Wikipedia moderation isn’t perfect. It struggles with burnout, off-wiki harassment, and systemic bias. But it works because it’s transparent. Every edit is visible. Every policy is public. Every dispute has a paper trail. That’s why, even as AI encyclopedias promise faster answers, people still trust Wikipedia more. It’s not because it’s flawless—it’s because you can see how the truth was built, one edit at a time.

Below, you’ll find real stories from the front lines: how volunteers clear thousands of copy-editing backlog articles, how editors handle harassment that spills beyond the site, how AI is being used—and misused—in content moderation, and how the community fights to include underrepresented voices. These aren’t abstract debates. They’re daily choices that shape what the world sees as fact.

Sockpuppet Detection and Prevention on Wikipedia: Key Methods

Wikipedia combats sockpuppet accounts through technical tools and volunteer vigilance. Learn how detection works, what signs to watch for, and why this matters for online trust.

Current Wikipedia Requests for Comment Discussions Roundup

Wikipedia's community-driven decision-making through Requests for Comment shapes how content is created and moderated. Current RfCs are tackling bias, bot edits, institutional influence, and global representation.

What Wikipedia Administrators Do: Roles and Responsibilities Explained

Wikipedia administrators are unpaid volunteers who maintain the site by enforcing policies, handling vandalism, and mediating disputes. They don't decide what's true-they ensure rules are followed.

Bans of High-Profile Wikipedia Editors: What Led to Them

High-profile Wikipedia editors have been banned for abuse of power, sockpuppeting, and paid editing. These cases reveal how the community enforces fairness-even against its most experienced members.

Living Policy Documents: How Wikipedia Adapts to New Challenges

Wikipedia's policies aren't static rules-they're living documents shaped by community debate, real-world threats, and constant adaptation. Learn how volunteers keep the encyclopedia accurate and trustworthy.

How Wikipedia Enforces Behavioral Policies: Civility, Harassment, and Blocks

Wikipedia enforces civility and fights harassment through volunteer moderation, public blocks, and transparent policies. Learn how editors are warned, blocked, and held accountable without corporate oversight.

Autopatrolled Status on Wikipedia: What It Takes and What It Means

Autopatrolled status on Wikipedia lets trusted editors skip manual review of their changes. Learn the criteria, responsibilities, and why this quiet system keeps the encyclopedia running.

How Wikipedia Administrators Evaluate Unblock Requests

Wikipedia administrators must carefully evaluate unblock requests by reviewing user history, assessing genuine change, and applying policy with fairness. Learn how to distinguish between sincere apologies and repeated disruption.

Governance Models: How Wikipedia Oversees AI Tools

Wikipedia uses AI to help edit articles, but strict community-led oversight ensures accuracy and fairness. Learn how volunteers, policies, and transparency keep AI in check on the world’s largest encyclopedia.

How Wikipedia Resolves Editorial Disagreements: The Real Process Behind Editorial Consensus

Wikipedia resolves editorial disagreements through consensus, not votes. Editors use policies, talk pages, and mediation to agree on accurate, sourced content. Learn how real people build reliable information together.

How Wikipedia Handles Vandalism Conflicts and Edit Wars

Wikipedia handles vandalism and edit wars through a mix of automated bots, volunteer moderators, and strict sourcing rules. Conflicts are resolved by community consensus, not votes, and persistent offenders face bans. Transparency and accountability keep the system working.

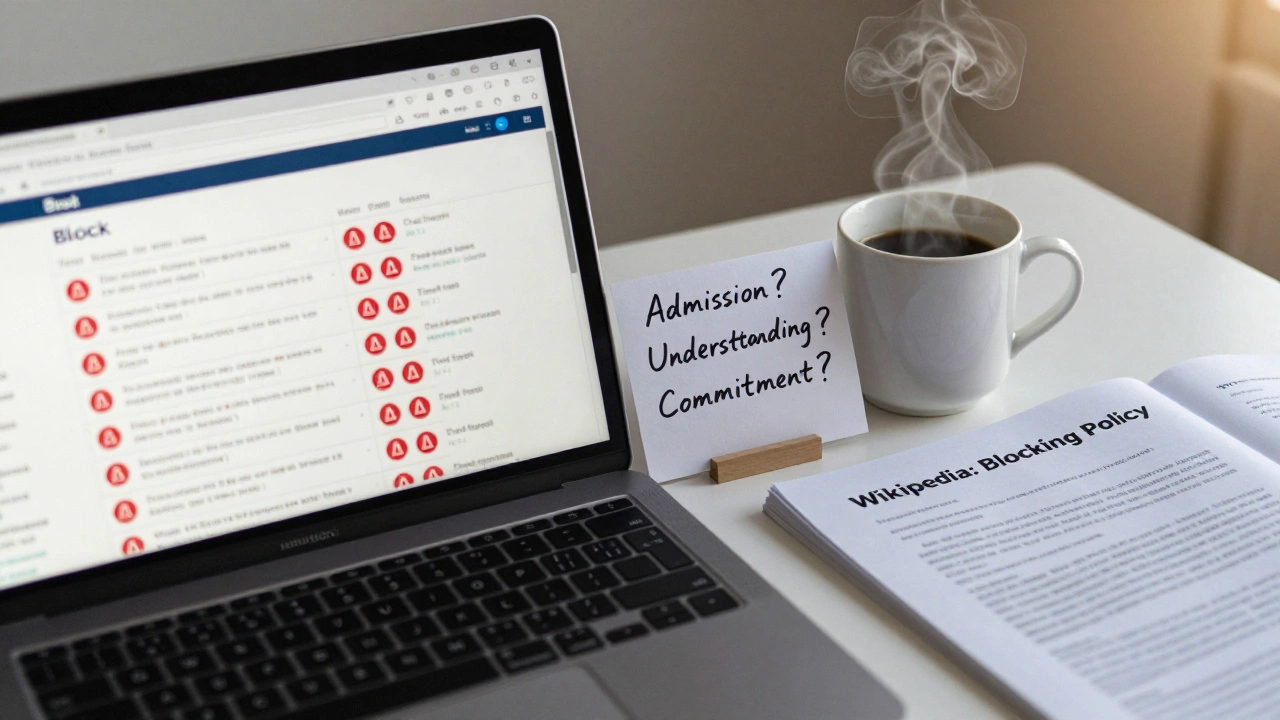

Blocking Policy Case Studies from Wikipedia Administrator Noticeboards

Real case studies from Wikipedia's administrator noticeboards show how blocks are decided, appealed, and refined. Transparency, policy, and community oversight drive moderation-not secrecy.