Academic Research Wikipedia: How Scholars Study and Shape the Encyclopedia

When you think of academic research Wikipedia, systematic studies of how Wikipedia works as a knowledge platform, including its editors, policies, and technical systems. Also known as Wikipedia scholarship, it’s not just about counting edits—it’s about understanding how truth, bias, and collaboration play out at scale. This isn’t theory in a vacuum. Real researchers are watching how students rewrite articles for class, how bots catch spam before humans even see it, and how geopolitical edit wars twist facts about Ukraine or Taiwan. Their findings don’t stay in journals—they change how Wikipedia is built.

One key player is the Wikipedia community, the global network of volunteers who write, edit, and enforce rules on the platform. Researchers found that librarians and educators make up a surprisingly large chunk of top contributors—not because they’re paid, but because they’re trained to verify sources and spot bias. That’s why Wikipedia’s sourcing standards are so strict: they’re not just guidelines, they’re defenses against AI-generated lies. And when editors hide their conflicts of interest, studies show it doesn’t just break rules—it breaks trust. The Wikipedia policy, the set of rules governing content, behavior, and editing practices on the platform exists because real people got burned, and smart researchers stepped in to fix it.

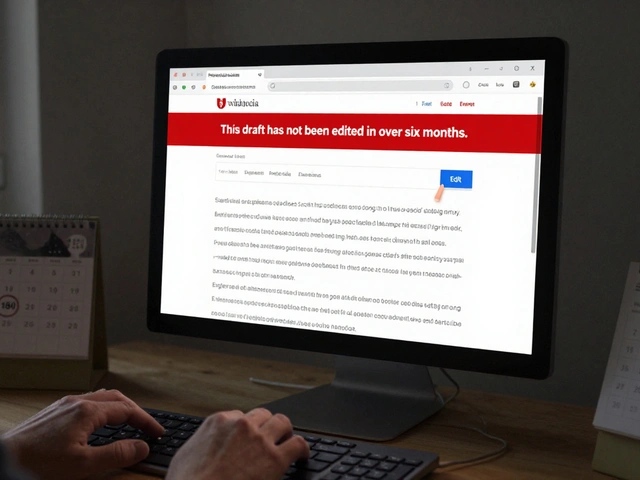

It’s not all about conflict. Academic work has helped design tools like TemplateWizard, which cuts editing errors by 80%, and CirrusSearch, which ignores popularity and ranks articles by structure and links. These aren’t magic—they’re responses to data. Researchers tracked how mobile editing changed who contributes, and how local news closures hurt coverage in developing regions. They even studied The Signpost’s readers and found people wanted shorter stories about quiet editors, not just drama. That feedback got acted on. The online encyclopedia research, the field of scholarly study focused on understanding Wikipedia’s social, technical, and cultural systems isn’t just watching Wikipedia—it’s helping it evolve.

What you’ll find below isn’t a list of random articles. It’s a curated window into how research drives real change: from how sockpuppet accounts are caught, to how AI misinformation is blocked by citation rules, to how students are now trained to improve articles as part of their coursework. These are the stories behind the numbers—the people, tools, and policies shaped by those who study Wikipedia not as a curiosity, but as a living system that needs constant care.

How Signposts Guide Academic Research on Wikipedia

Wikipedia signposts guide researchers to reliable information by flagging gaps in citations, bias, or quality. Learn how these community tools help academic work and how to use them effectively.