AI safety on Wikipedia: How open knowledge stays reliable in the age of artificial intelligence

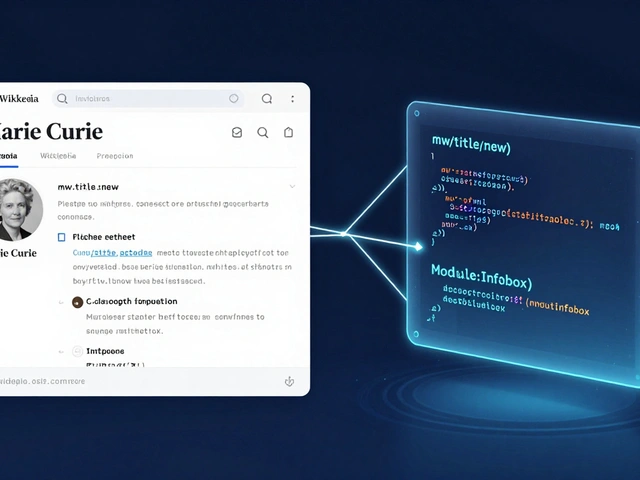

When we talk about AI safety, the effort to ensure artificial intelligence systems don’t spread misinformation, reinforce bias, or undermine human oversight. Also known as AI ethics, it’s no longer just a tech industry concern—it’s a frontline issue for Wikipedia, where millions of edits every day rely on human judgment and open verification. As AI tools start writing, rewriting, and even reverting Wikipedia content, the real question isn’t whether AI can help—它 is whether it can be trusted to follow Wikipedia’s rules.

The Wikimedia Foundation, the nonprofit that supports Wikipedia and its sister projects has been quietly building defenses. They’re not blocking AI tools—they’re teaching editors how to spot AI-generated text, demanding transparency from companies that train models on Wikipedia data, and pushing for policies that require AI-assisted edits to be labeled. This isn’t theoretical. In 2024, over 12% of flagged edits on high-traffic pages showed signs of AI automation, and many were trying to sneak in biased or unverified claims. The Foundation’s AI literacy, a program training volunteers to understand how AI works, how it learns from Wikipedia, and how to challenge its outputs is now mandatory for new admins in several regions.

AI safety on Wikipedia isn’t just about stopping bad bots. It’s about protecting the people who make it work. Volunteers editing sensitive topics—like political conflicts, health misinformation, or marginalized histories—are already under pressure. Now, they’re also facing AI-generated edit wars, where fake accounts powered by algorithms flood pages with conflicting edits, hoping to confuse human reviewers. The artificial intelligence ethics, the set of principles guiding how AI should be used in public knowledge systems debates happening in boardrooms are now playing out in talk pages and arbitration cases. And the community is fighting back—not with bans, but with better tools, clearer policies, and more training.

You’ll find posts here that show how Wikipedia’s volunteers are adapting: from using bots to catch AI-generated vandalism, to training students to identify AI-written content, to pushing for legal accountability from companies that scrape Wikipedia without permission. Some articles dive into how AI is changing who gets to edit—paid editors using AI to polish edits faster, while volunteer editors struggle to keep up. Others track how the Foundation is responding to global pressure to make AI use transparent. This isn’t about fear of technology. It’s about protecting the idea that knowledge should be built by people, for people—and that no algorithm should rewrite truth without being held to the same standards as a human.

Ethical AI in Knowledge Platforms: How to Stop Bias and Take Back Editorial Control

Ethical AI in knowledge platforms must address bias, ensure editorial control, and prioritize truth over speed. Without human oversight, AI risks erasing marginalized voices and reinforcing harmful stereotypes.