Editor Safety on Wikipedia: Protecting Volunteers Who Keep Knowledge Free

When you think of Wikipedia, you might picture someone quietly editing an article at midnight. But behind that quiet work is a reality many don’t see: editor safety, the effort to protect Wikipedia volunteers from harassment, threats, and real-world harm. These aren’t just online disputes—they’re life-altering events. Editors who write about controversial topics—politics, religion, abuse, or marginalized communities—often get targeted with doxxing, death threats, and coordinated abuse campaigns. The Wikimedia Foundation, the nonprofit that supports Wikipedia’s infrastructure and policy framework has started taking this seriously, not as a side issue, but as a core part of keeping the encyclopedia alive.

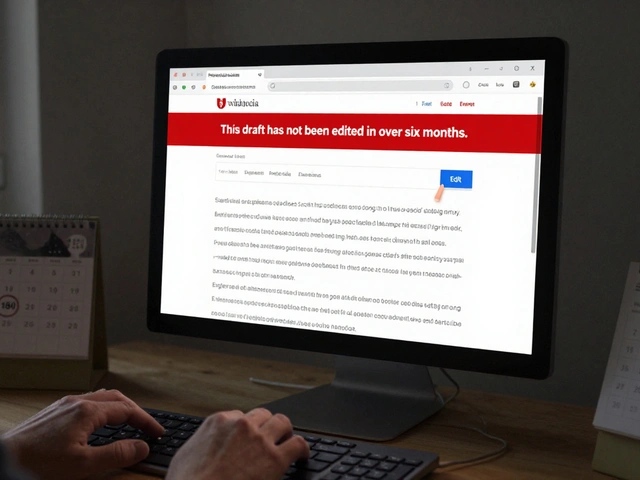

Editor safety isn’t just about blocking trolls. It’s about systems. It’s about how Wikipedia harassment, the pattern of targeted abuse against editors based on their edits or identity is tracked, reported, and responded to. It’s about tools like confidential reporting channels, IP masking for vulnerable editors, and legal support for those facing real-world danger. It’s also about culture: the difference between a community that says "just ignore it" and one that says "we’ve got your back." Many editors have left Wikipedia because they felt unsafe. Others stay, but live in fear. That’s not sustainable. The editor protection, the collective policies, tools, and support structures designed to shield Wikipedia contributors from harm effort is trying to change that.

This isn’t theoretical. In 2023, a Wikipedia editor in India was physically threatened after writing about a local politician. In 2022, a volunteer in the U.S. received a package containing a dead rat and a note after editing an article on a controversial religious group. These aren’t outliers—they’re signals. The people who fix broken links, update birth dates, and add citations to obscure historical events are also the ones most likely to be attacked when their work challenges power. And yet, most of these stories never make headlines. What you see in the posts below is a collection of real efforts: how volunteers are building safety nets, how the Foundation is changing policies, and how small actions—like hiding an editor’s real name or training moderators to spot abuse patterns—can make the difference between someone quitting or staying. You’ll find stories of people who fought back, systems that failed, and new tools being tested right now. This isn’t about tech fixes. It’s about human survival. And if Wikipedia wants to keep growing, it has to make sure no one has to choose between truth and safety.

How to Handle Harassment Off-Wiki That Affects Your Wikipedia Editing

Off-wiki harassment targeting Wikipedia editors is rising. Learn how to recognize, report, and protect yourself from threats that spill beyond the site-so you can keep editing safely.