Neutral Point of View on Wikipedia: How It Shapes Reliable Information

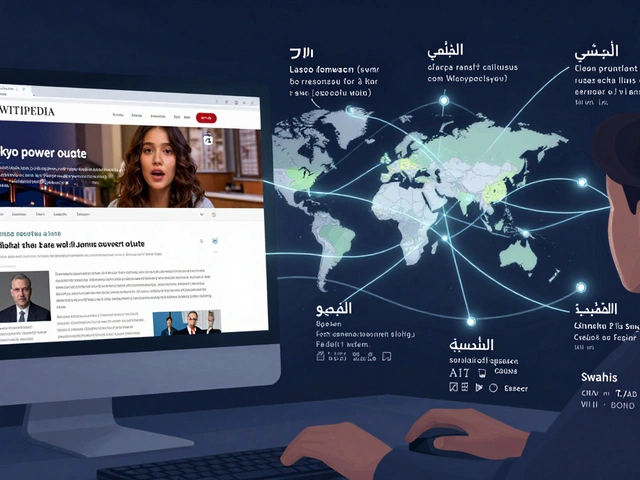

When you read a Wikipedia article, you’re not getting one person’s opinion—you’re getting a summary built on Neutral Point of View, Wikipedia’s foundational policy requiring articles to represent all significant views fairly, without bias. Also known as NPOV, it’s the reason why articles on controversial topics don’t sound like opinion pieces. This isn’t about avoiding strong claims—it’s about making sure every claim is backed by what reliable sources actually say. If a scientific consensus exists, the article says so. If there’s a minority view with credible support, it’s included too—but only in proportion to how much attention it gets in reliable sources. That’s called due weight, the principle that determines how much space each viewpoint gets based on its prominence in credible publications. You can’t give equal space to a fringe theory and a widely accepted fact just because both exist. That’s not fairness—that’s distortion.

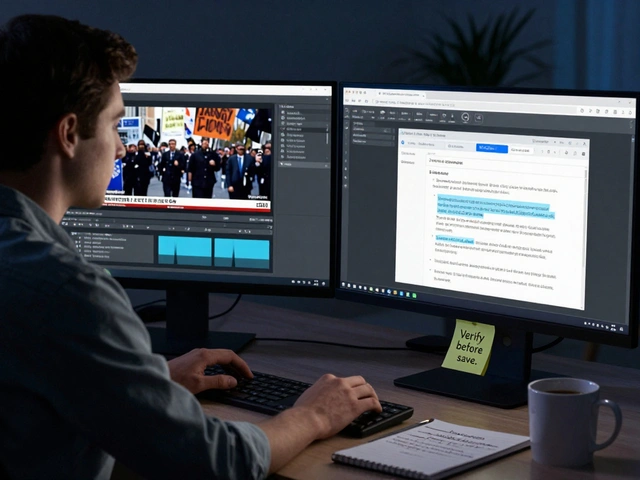

Wikipedia policies, the mandatory rules that guide editing and dispute resolution on the platform like NPOV and due weight exist because Wikipedia has no central editor. Instead, thousands of volunteers rely on clear, consistent standards to agree on what belongs in an article. Without these rules, every edit war would turn into a shouting match. NPOV forces editors to step back from their own beliefs and ask: "What do reliable sources say?" It’s why articles on climate change, politics, or history don’t reflect the loudest voice online—they reflect what’s been published in peer-reviewed journals, major newspapers, and academic books. This is also why reliable sources, published materials with editorial oversight, like academic journals and established news outlets, that Wikipedia requires to verify claims are so important. A blog post, a YouTube video, or a tweet doesn’t count—even if it’s popular. The goal isn’t popularity. It’s accuracy grounded in evidence.

What you’ll find below are real stories about how NPOV works in practice: how it stops misinformation, how it handles heated debates, and how it’s being tested by AI, new voices, and shifting public trust. You’ll see how volunteers use it to fix bias, how journalists rely on it to find facts, and why even the most controversial topics on Wikipedia still feel balanced. This isn’t theory. It’s the daily work of people who care more about getting it right than being right.

How to Write Neutral Lead Sections on Contentious Wikipedia Articles

Learn how to write neutral lead sections for contentious Wikipedia articles using verified facts, proportionality, and clear sourcing-without taking sides or using loaded language.

Neutral Point of View: How Wikipedia Maintains Editorial Neutrality

Wikipedia's Neutral Point of View policy ensures articles present facts and viewpoints fairly, based on reliable sources. It’s the backbone of trust on the world’s largest encyclopedia.

Controversial Policy Debates Shaping Wikipedia Today

Wikipedia's policy debates over neutrality, notability, paid editing, and AI are reshaping how knowledge is curated-and who gets to decide. These conflicts reveal deep tensions between global inclusion and Western-dominated governance.

What Neutral Coverage Means for Polarized Topics on Wikipedia

Wikipedia’s neutral coverage doesn’t ignore controversy - it documents it fairly. Learn how the platform balances polarized topics with facts, sources, and transparency - and why it still works in a divided world.