Reliable AI Sources: What Makes an AI-Generated Source Trustworthy on Wikipedia

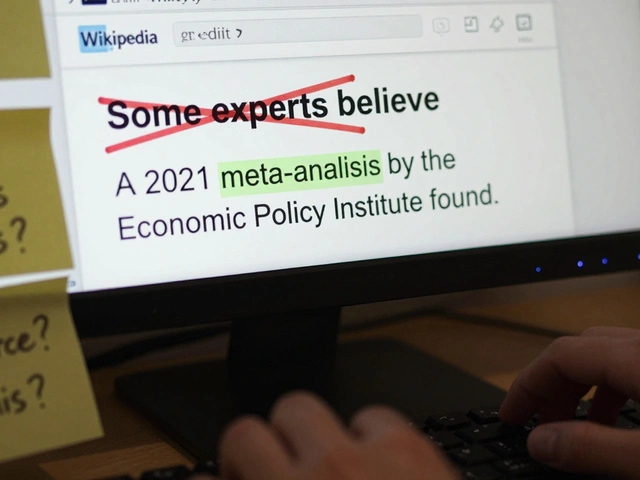

When we talk about reliable AI sources, AI-generated content that meets Wikipedia’s standards for verifiability and neutrality. Also known as AI-assisted references, it’s not enough for an AI to spit out a fact—it needs to trace back to a human-edited, publicly verifiable source. Wikipedia doesn’t accept AI summaries, generated text, or chatbot answers as direct citations. Why? Because AI doesn’t understand context, bias, or nuance—it repeats patterns, and sometimes it repeats lies.

What does count? secondary sources, published books, peer-reviewed journals, and reputable news outlets that have been reviewed by humans. Even if an AI pulls a quote from The New York Times or The Lancet, the source is still the newspaper or journal—not the AI. The AI is just a search tool. That’s why Wikimedia Foundation, the nonprofit that runs Wikipedia and its sister projects is pushing for AI literacy among editors. They’re not trying to ban AI—they’re teaching people how to use it without letting it rewrite history.

Some AI tools claim to be "encyclopedic," but surveys show people still trust Wikipedia more. Why? Because Wikipedia shows its work. Every claim has a link to a real source. AI systems often hide their sources—or worse, invent them. That’s why Wikipedia’s policy on AI ethics, the responsible use of artificial intelligence in knowledge systems focuses on transparency, not speed. An AI can answer a question in two seconds. But if it gets the answer wrong, and no one can check where it came from, the damage lasts forever.

You’ll find posts here that dig into how Wikipedia editors spot AI-generated misinformation, how the Foundation is lobbying tech companies to credit open knowledge, and why even the best AI can’t replace a human who knows how to track down a 1987 government report buried in an archive. You’ll also see how volunteers use annotated bibliographies and watchlists to keep AI noise out of articles. This isn’t about rejecting technology—it’s about protecting the integrity of knowledge. If you edit Wikipedia, or just care about what you read online, understanding what makes an AI source reliable isn’t optional. It’s the line between truth and noise.

How Wikipedia’s Sourcing Standards Fix AI Misinformation

AI often generates false information because it lacks reliable sourcing. Wikipedia’s strict citation standards offer a proven model to fix this-by requiring verifiable sources, not just confident-sounding guesses.

Source Lists in AI Encyclopedias: How Citations Appear vs What’s Actually Verified

AI encyclopedias show citations that look credible, but many don't actually support the claims. Learn how source lists are generated, why they're often misleading, and how to verify what's real.