Wikimedia Foundation

When you use Wikipedia, you’re relying on the Wikimedia Foundation, a non-profit organization that operates Wikipedia and its sister projects. Also known as WMF, it doesn’t write articles — it keeps the servers running, pays for legal defense, and supports tools that help volunteers edit safely and efficiently. The Foundation’s job is simple in theory: make sure free knowledge stays online, accessible, and free from censorship. But behind that simplicity are complex decisions about money, power, and who gets to shape what the world knows.

The Wikimedia Enterprise, a commercial service launched to sell Wikipedia data to big companies, is one of the biggest shifts in its history. It brings in millions, but many volunteers worry it creates a two-tier system — where corporations get fast, paid access while editors still struggle with outdated tools. Then there’s Wikidata, a structured database that connects facts across all language versions of Wikipedia. It’s the quiet engine that lets you search for a scientist in English and see their birthplace in Hindi, Arabic, or Swahili — all updated in one place. Without Wikidata, Wikipedia would be a collection of isolated pages, not a global knowledge graph.

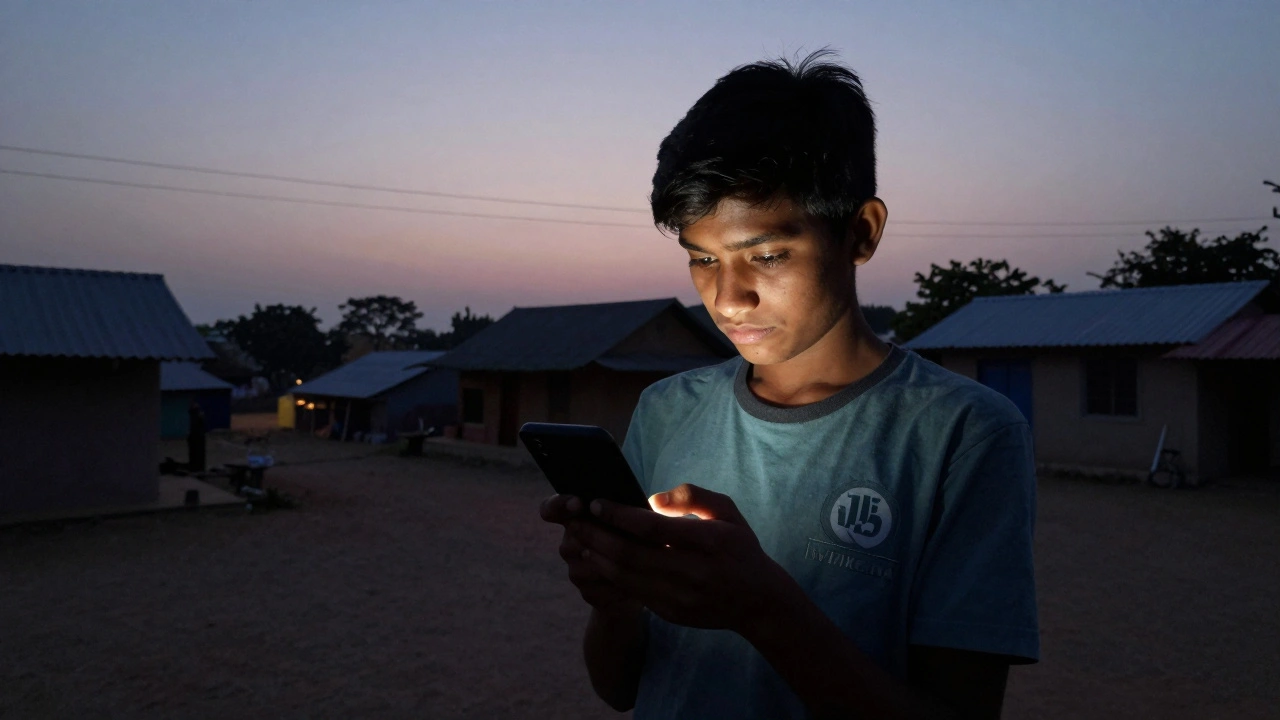

And then there are the people. The Wikimedia Foundation doesn’t employ most of the editors who fix typos, fight vandalism, or write deep articles on climate science or Indigenous history. Those are volunteers — tens of thousands of them — working in their spare time, often under pressure from harassment, burnout, or legal threats. The Foundation tries to protect them with safety policies and legal aid, but the gap between its resources and the scale of the problem keeps growing. When a journalist uses Wikipedia to fact-check a story, or a student in a rural town finds their hometown’s history documented for the first time, they’re benefiting from a system built by unpaid people, supported by a non-profit that’s always one funding cycle away from hard choices.

What you’ll find in the posts below isn’t just news about the Foundation — it’s the real-world ripple effects of its decisions. From how copyright takedowns erase local history, to how AI tools are changing editing workflows, to how volunteers are fighting bias in coverage of Indigenous communities — every story ties back to who holds power, who gets heard, and what happens when free knowledge meets corporate interests, political pressure, and human limits. This isn’t about corporate press releases. It’s about what happens when a global encyclopedia is run by volunteers, funded by donations, and watched by billions.

Executive Appointments at Wikimedia Foundation: New Leadership Takes Charge

Wikimedia Foundation appoints Erik Möller as new CEO, marking a shift toward community-led leadership after Maryana Iskander's departure. Learn how this change impacts Wikipedia's future, AI policies, and global representation.

How Wikimedia Foundation Supports Smaller Language Communities on Wikipedia

The Wikimedia Foundation supports hundreds of small-language Wikipedias through grants, translation tools, and community training - helping preserve languages that tech companies ignore.

Wikimedia Foundation Budget Announcement and Spending Priorities for 2026

The Wikimedia Foundation's 2026 budget prioritizes infrastructure, global access, and editor tools over growth. With 7 million donors and no ads, it's focused on keeping Wikipedia fast, free, and reliable for everyone.

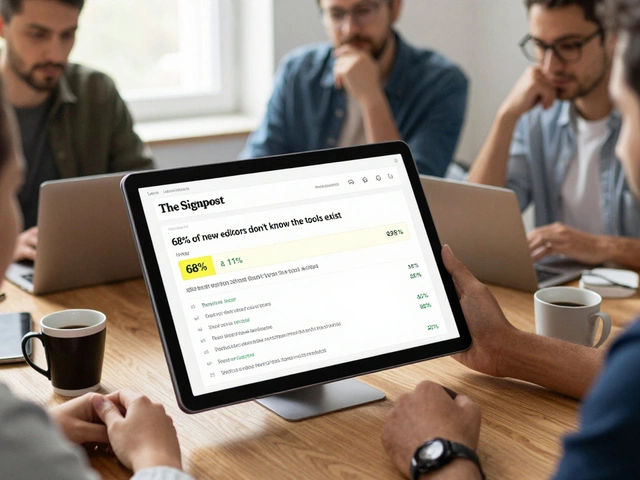

How to Cross-Post Signpost Stories to Wikimedia Diff: Best Practices

Learn how to properly cross-post Signpost stories to Wikimedia Diff with clear guidelines, formatting rules, and submission tips to reach a global audience and support transparency in Wikipedia's community.

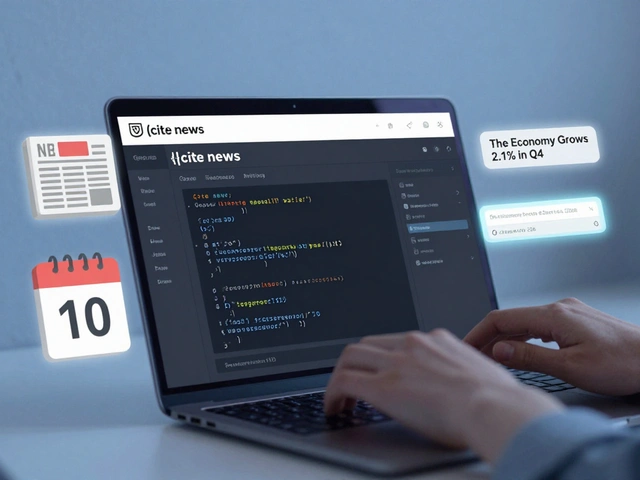

Wikimedia Foundation Legal Updates Affecting Wikipedia Editors

New legal updates from the Wikimedia Foundation shift responsibility to Wikipedia editors, requiring careful sourcing and legal awareness. Editors must now use new tools and follow stricter rules to stay protected.

The Evolution of Wikipedia's Copyright Policies and Licensing

Wikipedia’s shift from GFDL to Creative Commons licensing transformed how global knowledge is shared. Learn how its copyright policies evolved to support free reuse, community enforcement, and AI-era challenges.

How Wikimedia Raises Money to Keep Wikipedia Free and Online

Wikipedia stays free thanks to millions of small donations. Learn how Wikimedia raises money, where it goes, and why this model works better than ads or subscriptions.

How the Wikimedia Foundation Is Tackling AI and Copyright Challenges

The Wikimedia Foundation is fighting to protect Wikipedia's open knowledge from being exploited by AI companies. They're enforcing copyright rules, building detection tools, and pushing for legal change to ensure AI gives credit where it's due.

Notable Press Releases From Wikimedia Foundation: A Historical Review

A historical review of key Wikimedia Foundation press releases that shaped Wikipedia's role in defending open knowledge, fighting censorship, and combating misinformation since 2005.

Privacy Policy: How Wikipedia Protects Editor Information

Wikipedia protects editor privacy by hiding IP addresses, avoiding tracking, and allowing anonymous edits. No personal data is collected unless you create an account - and even then, your real identity stays secret.

The Sister Projects Task Force: Wikimedia Foundation's Review of Wikinews

Wikinews, Wikimedia Foundation's volunteer-run news site, underwent a major review in 2025. The Sister Projects Task Force found declining participation but strong value among educators and researchers - leading to new tools, training, and language support to ensure its survival.

How CentralNotice Banners on Wikipedia Are Approved and Governed

Wikipedia’s CentralNotice banners are carefully approved to maintain neutrality and trust. Learn how fundraising and policy messages are reviewed, who controls them, and why commercial or biased content is never allowed.