Wikipedia inclusion programs: How communities build fair, accurate knowledge

When we talk about Wikipedia inclusion programs, structured efforts by volunteers and organizations to expand underrepresented topics and voices on Wikipedia. Also known as content equity initiatives, these programs are what keep Wikipedia from becoming a mirror of only the most popular or dominant narratives. It’s not just about adding more articles—it’s about fixing who gets to be represented, how they’re described, and whose sources are trusted.

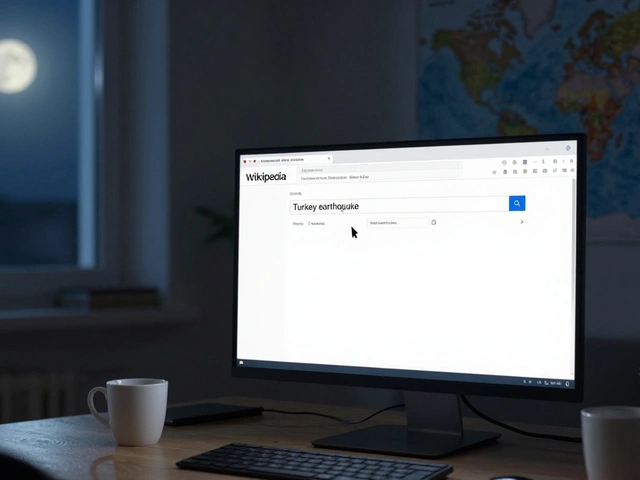

These programs rely on WikiProjects, volunteer-led teams focused on specific topics like medicine, history, or Indigenous cultures to dig into gaps. For example, the Indigenous peoples task force doesn’t just add biographies—it retrains editors on how to cite oral histories, challenge colonial language, and prioritize sources from the communities themselves. Meanwhile, due weight, Wikipedia’s rule for fairly representing all significant viewpoints based on reliable sources stops editors from giving equal space to fringe ideas just because they’re loud. And behind it all, the Wikimedia Foundation, the nonprofit that supports Wikipedia’s infrastructure and funding quietly backs these efforts with grants, training, and tech tools like Wikidata, which helps sync facts across 300+ language versions.

These aren’t abstract ideals. They’re daily work: copy editors clearing backlog articles, volunteers fact-checking disaster coverage, editors defending sources against copyright takedowns that erase vital context. Inclusion isn’t a one-time campaign—it’s a habit. It’s choosing to cite a local scholar over a foreign journalist. It’s using the watchlist to catch biased edits before they spread. It’s building annotated bibliographies so new editors don’t have to start from scratch. You won’t see headlines about this work, but you’ll feel it every time you read a Wikipedia article that feels complete, balanced, and trustworthy.

Below, you’ll find real stories from the front lines—how editors are fixing systemic bias, what happens when AI tries to replace human judgment, and how a quiet November drive cleared over 12,000 articles stuck in the editing queue. This is what inclusion looks like when it’s not performative—it’s persistent, messy, and deeply human.

Women and Non-Binary Editors: Programs That Work on Wikipedia

Women and non-binary editors are transforming Wikipedia through targeted programs that build community, reduce bias, and expand knowledge. Learn which initiatives are making real change-and how you can help.