Wikipedia policy: How rules keep the encyclopedia reliable and fair

When you edit a Wikipedia article, you’re not just adding facts—you’re following a system built on Wikipedia policy, a set of mandatory rules that govern how content is created, edited, and verified on the platform. Also known as Wikipedia guidelines, these rules are what keep the world’s largest encyclopedia from falling into chaos. Unlike most websites, Wikipedia doesn’t rely on editors with special titles or corporate oversight. Instead, it runs on shared rules, open debate, and a deep commitment to neutrality. Without these policies, anyone could insert false claims, promotional content, or biased opinions—and the whole thing would collapse.

What makes Wikipedia policy different is how it’s layered. At the top are policies, binding rules that all editors must follow, like "No original research" and "Neutral point of view". Below them are guidelines, advice that helps editors apply policies in real situations, like how to choose reliable sources or handle edit disputes. And then there are essays—personal opinions that don’t count as rules at all. Knowing the difference matters. Break a policy, and your edit gets reverted. Ignore a guideline, and you might just miss a better way to make your point. This structure keeps things flexible without becoming unpredictable. It’s why a student in Nairobi and a retired professor in Toronto can both edit the same page and still end up with something accurate.

Behind every policy is a community effort. Consensus building, the process where editors discuss and agree on changes before implementing them, is the engine that makes Wikipedia policy work. There’s no boss saying what’s right. Instead, people argue, cite sources, and find middle ground. That’s why some articles change slowly—they’re not being ignored, they’re being carefully reviewed. And when things get heated, policies like reliable sources, the standard that requires information to come from published, credible outlets, act as the tiebreaker. No matter how convincing your argument sounds, if it’s not backed by a trusted source, it doesn’t belong on Wikipedia.

This system isn’t perfect. It’s messy, slow, and sometimes frustrating. But it’s also why Wikipedia still beats AI-generated encyclopedias in trust. People don’t just trust the content—they trust the process. And that’s why the posts below dive into how policies shape everything: from how AI edits are handled, to how Indigenous knowledge gets included, to how volunteers fight copyright takedowns that erase real history. You’ll see how policy isn’t just about blocking edits—it’s about protecting knowledge itself.

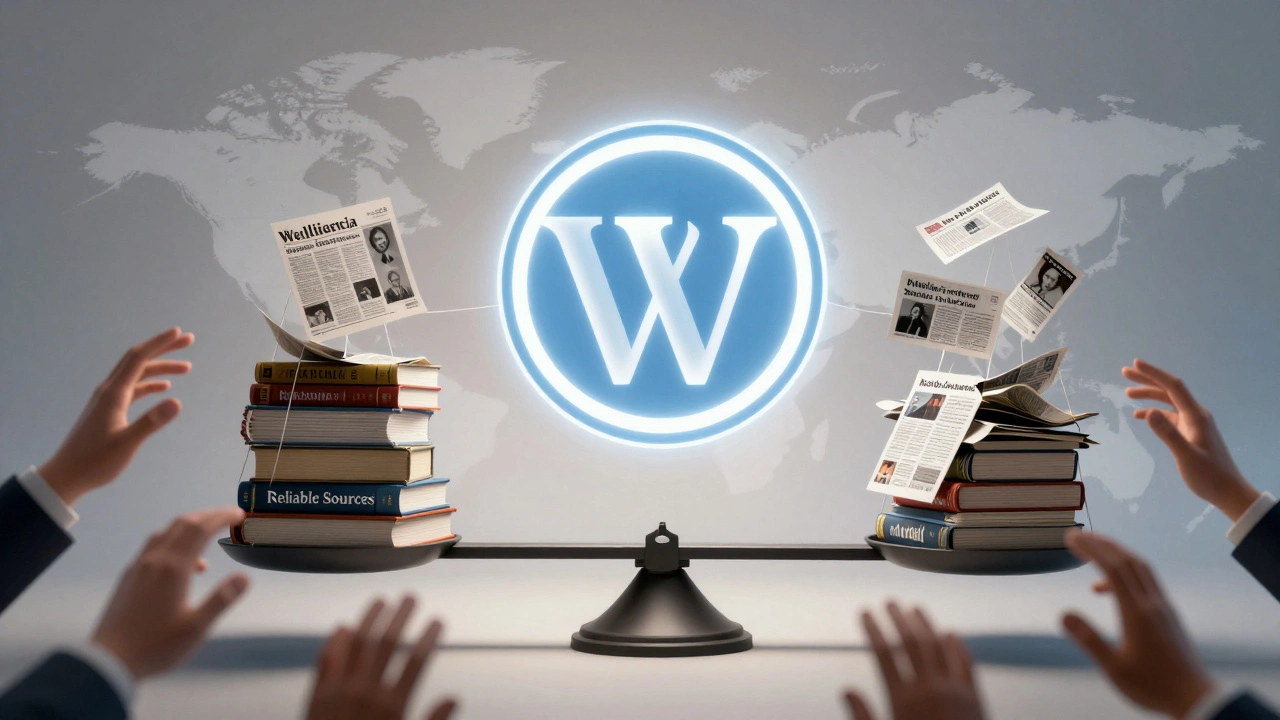

How Wikipedia Handles Official Statements vs. Investigative Reporting Sources

Wikipedia doesn't decide truth - it shows you where facts come from. Learn how it weighs official statements against investigative journalism to build accurate, transparent entries.

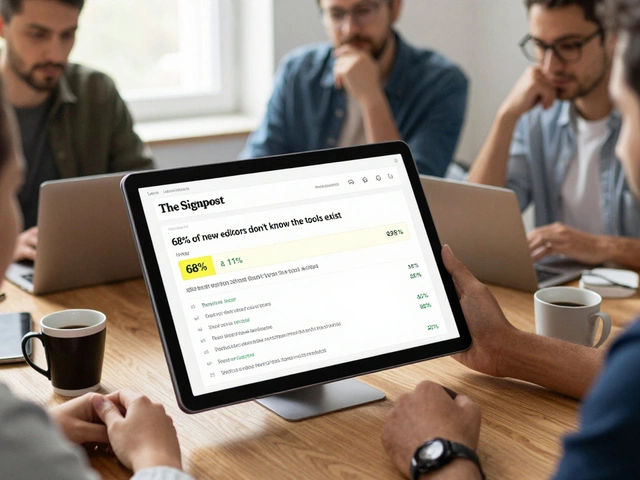

Current Wikipedia Requests for Comment Discussions Roundup

Wikipedia's community-driven decision-making through Requests for Comment shapes how content is created and moderated. Current RfCs are tackling bias, bot edits, institutional influence, and global representation.

Wikipedia Protection Policy: When and How Pages Are Protected

Wikipedia protects pages to prevent vandalism and misinformation. Learn how and why articles get semi-protected, fully protected, or extended confirmed, and what you can do if you can't edit a locked page.

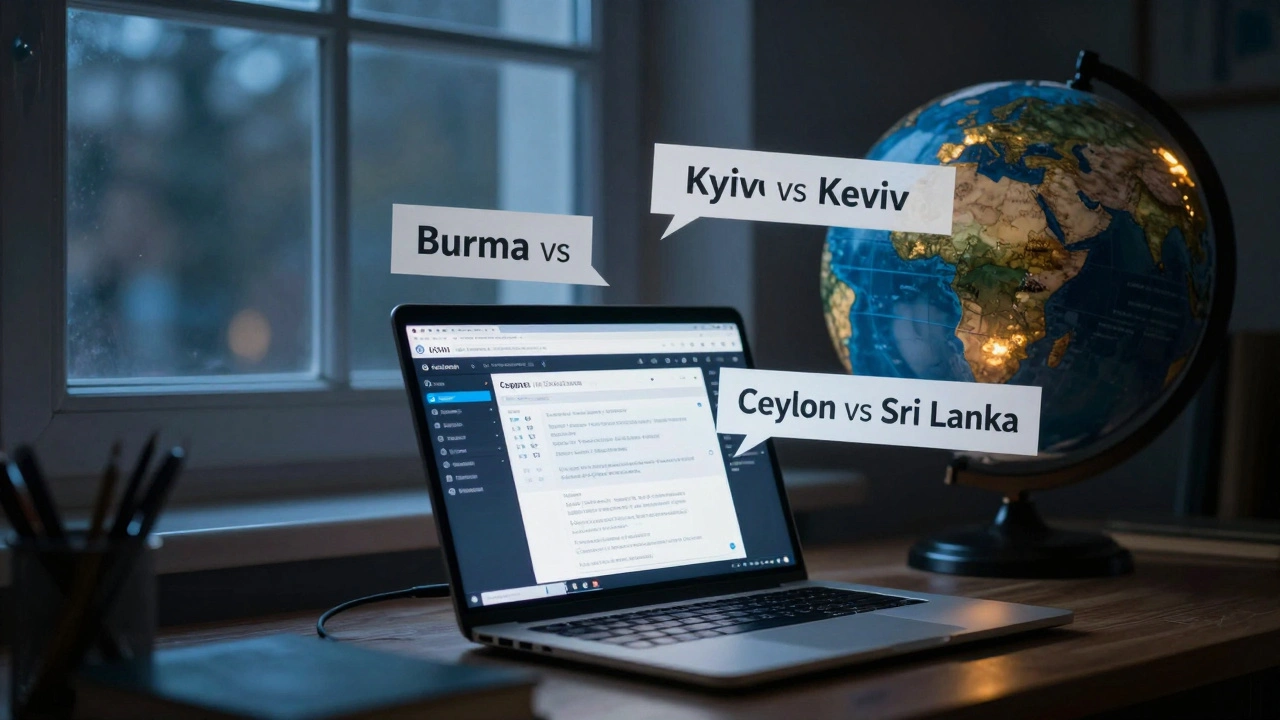

Naming Controversies on Wikipedia: Place Names, Titles, and Bias

Wikipedia's naming rules for places and people often reflect political power, not just language. From Kyiv to Taiwan, how names are chosen reveals deeper biases-and who gets to decide.

How to Build a Newsroom Policy for Wikipedia Use and Citation

A clear policy for using Wikipedia in journalism helps prevent misinformation. Learn how to train reporters, verify sources, and avoid citing Wikipedia directly in published stories.

How to Detect and Remove Original Research on Wikipedia

Learn how to identify and remove original research on Wikipedia - the key policy that keeps the encyclopedia reliable. Understand what counts as unsourced analysis and how to fix it without breaking community rules.

When Wikipedia Allows Self-Published Sources and Why It Rarely Does

Wikipedia rarely accepts self-published sources because they lack independent verification. Learn when exceptions are made and why reliable, third-party sources are required to maintain accuracy and trust.

How Wikipedia Handles Retractions and Corrections in Cited Sources

Wikipedia updates its articles when cited sources are retracted or corrected, relying on community vigilance and strict sourcing policies to keep information accurate and transparent.

How Wikipedia Administrators Evaluate Unblock Requests

Wikipedia administrators must carefully evaluate unblock requests by reviewing user history, assessing genuine change, and applying policy with fairness. Learn how to distinguish between sincere apologies and repeated disruption.

How to Handle Rumors and Unconfirmed Reports on Wikipedia

Learn how Wikipedia handles rumors and unconfirmed reports, why they're removed quickly, and how you can help prevent false information from spreading on the world's largest encyclopedia.

Source Reliability Tables: How to Evaluate Outlets for Wikipedia

Learn how Wikipedia editors evaluate sources using reliability tables to ensure accuracy. Understand what makes a source trustworthy and how to spot unreliable claims.

Neutral Point of View: How Wikipedia Maintains Editorial Neutrality

Wikipedia's Neutral Point of View policy ensures articles present facts and viewpoints fairly, based on reliable sources. It’s the backbone of trust on the world’s largest encyclopedia.