AI Encyclopedias: How AI Is Changing Wikipedia and What It Means for Knowledge

When we talk about AI encyclopedias, encyclopedias that use artificial intelligence to generate, edit, or moderate content. Also known as automated knowledge systems, it's no longer science fiction—Wikipedia is already using AI tools to flag vandalism, suggest edits, and even summarize complex topics. But the real question isn’t whether AI can help—it’s whether it should be writing history.

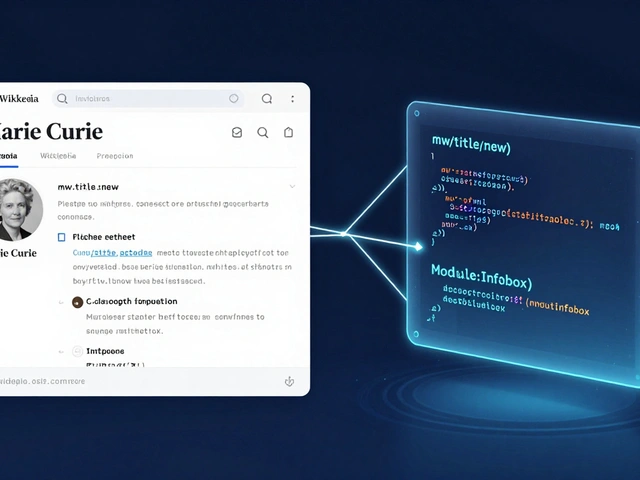

Behind the scenes, Wikipedia AI, automated systems deployed by the Wikimedia Foundation to assist editors. Also known as bots and AI assistants, it helps clean up spam, fix broken links, and translate articles across languages. But these tools aren’t neutral. They learn from existing content—and if that content is biased, incomplete, or outdated, the AI will copy and amplify those flaws. That’s why AI bias in knowledge, the tendency of AI systems to reproduce systemic inequalities in information. Also known as algorithmic discrimination in encyclopedias, it’s one of the biggest threats to Wikipedia’s goal of free, accurate knowledge for everyone.

The Wikimedia Foundation, the nonprofit that runs Wikipedia and its sister projects. Also known as WMF, it’s caught in the middle. On one hand, it’s building AI literacy programs to help editors understand how these tools work. On the other, it’s pushing back against big tech companies that scrape Wikipedia data to train their own AI models—without giving back, without credit, and without transparency. This isn’t just about copyright. It’s about who controls the world’s collective memory.

Some AI tools are already editing articles before most human editors even notice. Others are quietly removing content flagged by copyright bots—sometimes deleting valuable historical records in the process. Meanwhile, volunteer editors are fighting to keep marginalized voices from being erased by algorithms trained mostly on English-language, Western sources. You can’t fix bias by adding more data—you have to fix the system that decides what data matters.

What you’ll find below isn’t a list of tech specs or hype. It’s a real look at how AI is changing Wikipedia—from the bots that clean up edits to the policy battles over who gets to decide what’s true. You’ll read about volunteer efforts to fix biased coverage, how AI is used to track vandalism, why some edits get reverted before a human even sees them, and what happens when a machine tries to summarize Indigenous history or climate science without context. These aren’t hypotheticals. They’re happening right now, on a site millions rely on every day.

What AI Hallucination Means for Encyclopedia Reliability

AI hallucinations are making online encyclopedias dangerously unreliable. Learn how false facts are generated, why they spread, and how to protect yourself from being misled by AI that sounds smart but isn't truthful.

Comparing Wikidata Integration in Wikipedia and AI Encyclopedias

Wikipedia uses Wikidata for structured, community-verified facts. AI encyclopedias rely on proprietary knowledge graphs. Learn how they differ in accuracy, updates, and trustworthiness.

Public Perception of Wikipedia vs Emerging AI Encyclopedias in Surveys

Surveys show people still trust Wikipedia more than AI encyclopedias for accurate information, despite faster AI answers. Transparency, source verification, and human editing keep Wikipedia ahead.

Source Lists in AI Encyclopedias: How Citations Appear vs What’s Actually Verified

AI encyclopedias show citations that look credible, but many don't actually support the claims. Learn how source lists are generated, why they're often misleading, and how to verify what's real.