Editorial Control on Wikipedia: How Decisions Are Made and Who Really Holds Power

When you think of editorial control, the collective process by which Wikipedia decides what stays, what gets changed, and who gets to decide. Also known as content governance, it’s not a single person or team making calls—it’s a messy, loud, often frustrating system of rules, votes, and hidden power plays. This isn’t like a newspaper where an editor signs off on a story. On Wikipedia, editorial control is distributed across thousands of volunteers, automated bots, and a handful of elected arbitrators. It’s a system designed to be open, but in practice, it’s shaped by who shows up, who stays quiet, and who’s willing to fight for weeks over a single word.

Behind every article you read, there’s a quiet war over Wikipedia governance, the informal and formal structures that determine how content is managed and conflicts are resolved. Some battles are public, like the yearly ArbCom election, the process where volunteers vote for arbitrators who settle the most heated disputes on Wikipedia. Others happen in the shadows, like sockpuppetry, the use of fake accounts to manipulate edits and create false consensus. These aren’t theoretical problems—they’ve derailed articles on politics, science, and even celebrity biographies. And while bots handle routine cleanup, the real power lies in who can sway discussion on talk pages, block users, or push policy changes through.

Editorial control isn’t about who edits the most. It’s about who understands the unwritten rules. A new editor might fix a typo and get reverted. A veteran might rewrite half a page using obscure policy language and have it locked as "final." That’s because editorial control on Wikipedia is less about truth and more about consensus, persistence, and knowing where to file a complaint. The people who win these battles aren’t always the most knowledgeable—they’re the ones who show up day after day, who know which policy to cite, and who aren’t afraid to drag a dispute into the spotlight.

What you’ll find in this collection isn’t just a list of articles—it’s a map of where power really lives on Wikipedia. From how community surveys shape The Signpost’s coverage, to how grants empower underrepresented editors, to how AI is changing the game, these posts show you the real mechanics behind the scenes. You’ll see how policies are born, how fake accounts get caught, and why some topics vanish while others grow. This isn’t theory. This is how knowledge gets made—and sometimes, unmade—on the world’s largest encyclopedia.

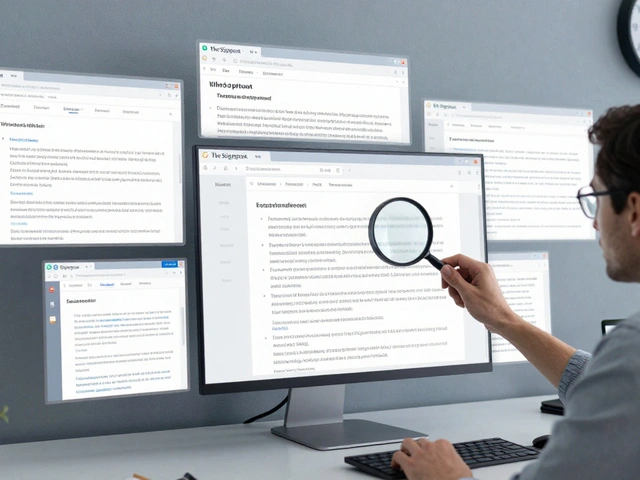

Ethical AI in Knowledge Platforms: How to Stop Bias and Take Back Editorial Control

Ethical AI in knowledge platforms must address bias, ensure editorial control, and prioritize truth over speed. Without human oversight, AI risks erasing marginalized voices and reinforcing harmful stereotypes.