Online Source Verification: How to Check What’s Real on Wikipedia and Beyond

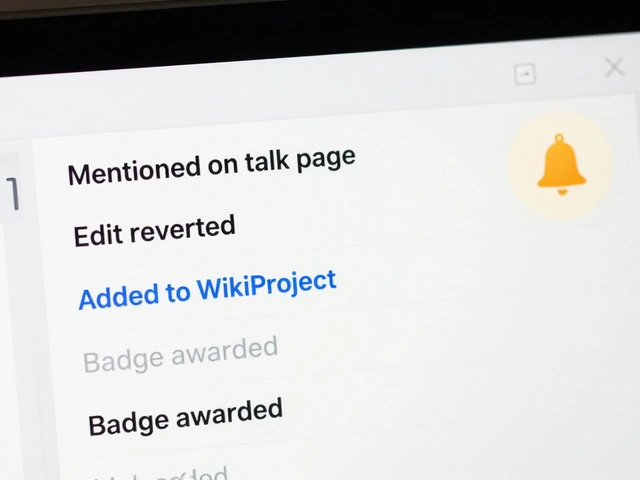

When you’re trying to figure out if something you read online is true, you’re doing online source verification, the process of tracing claims back to their original evidence to confirm accuracy and credibility. Also known as fact-checking, it’s not just for journalists—it’s something every reader should know how to do. Wikipedia doesn’t let you cite itself as a source for a claim, but it’s one of the best tools you have to find real sources. That’s because every solid Wikipedia edit is backed by a reliable source, a published, authoritative reference like academic journals, books, or trusted news outlets that have editorial oversight. These aren’t blogs, social media posts, or press releases—they’re sources that can be independently checked.

What makes secondary sources, interpretations or analyses of primary data written by experts who didn’t create the original event or data so important? Because they filter noise. A news report quoting a study is a secondary source. The study itself is primary. Wikipedia prefers secondary sources because they’ve already been reviewed, summarized, and placed in context. AI tools might spit out a citation that looks legit—but if the source doesn’t actually say what the AI claims, you’ve been misled. That’s why human editors on Wikipedia spend hours tracking down whether a quote matches the original text, or if a study was misrepresented. This isn’t bureaucracy—it’s protection.

Online source verification isn’t just about avoiding fake news. It’s about fixing gaps in knowledge. When Wikipedia editors add information about Indigenous history, medical research, or local politics, they don’t guess. They find sources that were overlooked before. Task forces and volunteer groups work to bring in sources from underrepresented communities, not just because it’s fair, but because truth needs multiple perspectives. If you’ve ever seen a Wikipedia article that felt balanced and thorough, that’s the result of someone verifying sources across languages, regions, and disciplines.

And it’s not just Wikipedia. The same rules apply when you’re reading an AI-generated summary, a news site, or even a textbook. Who wrote it? Where did they get their facts? Are those sources still available? Can you find them yourself? If you can’t trace it back, it’s not proof—it’s noise. The tools are free. The skills are learnable. And the stakes? Higher than ever.

Below, you’ll find real examples from Wikipedia’s front lines: how journalists use it to find verified facts, how volunteers fight misinformation, how AI messes up citations, and why some of the most trusted pages on the internet are built by people who refuse to accept anything without proof.

Wikipedia Talk Pages as Windows Into Controversy for Journalists

Wikipedia Talk pages reveal the hidden battles over facts, bias, and influence behind controversial topics. Journalists who learn to read them uncover leads, detect misinformation, and understand how narratives are shaped - often before they hit the headlines.