Wikipedia AI: How Artificial Intelligence Is Changing the Encyclopedia

When we talk about Wikipedia AI, the use of artificial intelligence tools to interact with, analyze, or generate content based on Wikipedia’s open knowledge base. Also known as AI and Wikipedia, it’s not just about chatbots summarizing articles—it’s about who controls the knowledge behind the answers. The Wikimedia Foundation, the nonprofit that supports Wikipedia and its sister projects is stepping in because AI companies are scraping Wikipedia without permission, using its content to train models, and then presenting answers that look right but have no clear source. This isn’t just unfair—it’s dangerous. If AI systems learn from Wikipedia but don’t credit it, or worse, distort its facts, the world’s largest free encyclopedia becomes invisible fuel for misinformation.

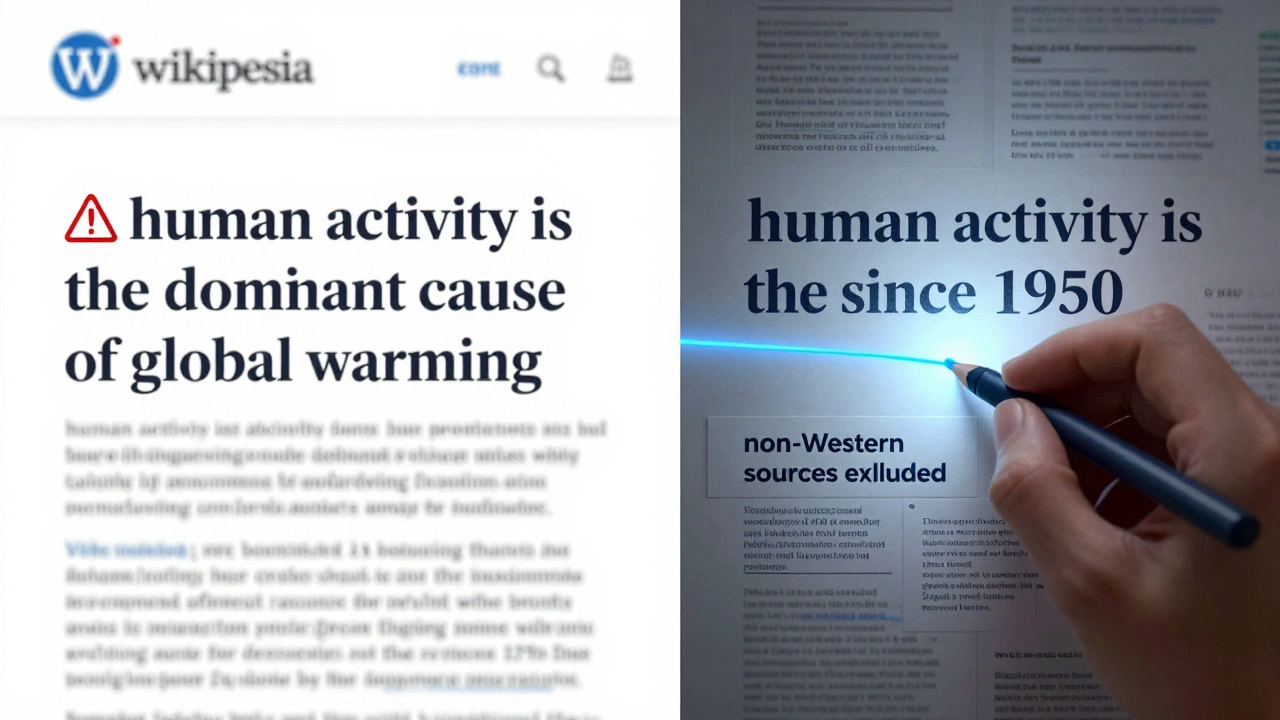

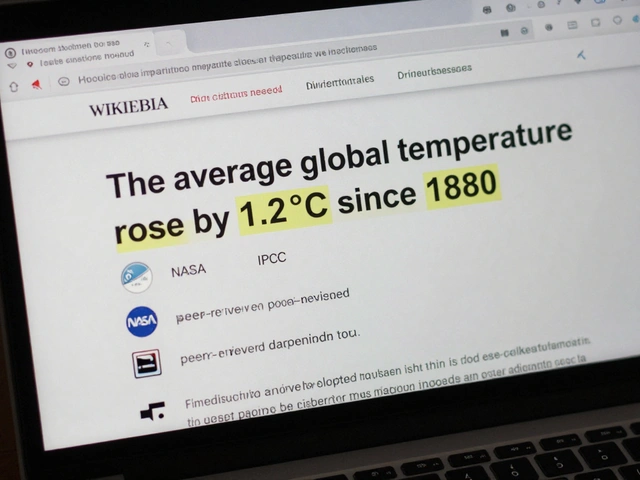

That’s why AI literacy, the ability to understand how AI systems work, where their data comes from, and how to spot when they’re wrong is now a core part of the Foundation’s mission. They’re not asking for special treatment—they’re asking for honesty. AI companies need to disclose when they use Wikipedia data, and they need to make sure their outputs reflect Wikipedia’s standards: verifiable, neutral, and sourced. Meanwhile, AI encyclopedias, automated knowledge platforms that generate answers without human oversight are popping up everywhere, promising fast results. But surveys show people still trust Wikipedia more—because they know who wrote it, how it’s checked, and what sources back it up. AI encyclopedias often show fake citations or misrepresent sources. Wikipedia doesn’t.

The fight isn’t just about tech—it’s about values. Open knowledge means anyone can edit, but it also means anyone can build on it. The Wikimedia Foundation wants AI to respect that. They’re pushing for policies that protect Wikipedia’s integrity, training volunteers to spot AI-generated vandalism, and helping editors understand how AI tools can assist—without replacing human judgment. You’ll find posts here that explain how AI literacy programs are being rolled out, how AI encyclopedias mislead users with fake citations, and why Wikipedia’s human-driven model still wins in trust and accuracy. You’ll also see how the Foundation’s tech team handles AI-related infrastructure changes, how volunteers are adapting their editing habits, and what’s really behind the scenes when an AI scrapes a Wikipedia page. This isn’t science fiction. It’s happening now. And you need to know how it affects what you read, who gets credited, and whether the world’s knowledge stays free—or becomes locked behind corporate walls.

Designing Explainability for AI Edits in Collaborative Encyclopedias

AI is quietly editing Wikipedia and other collaborative encyclopedias-but without explanations, these changes erode trust. Learn how transparent AI edits can preserve accuracy and public confidence in shared knowledge.

Human-in-the-Loop Workflows: How Real Editors Keep Wikipedia Accurate Amid AI Suggestions

Human-in-the-loop workflows keep Wikipedia accurate by combining AI efficiency with human judgment. Editors review AI suggestions, ensuring neutrality, sources, and consensus guide every change.

How the Wikimedia Foundation Is Tackling AI and Copyright Challenges

The Wikimedia Foundation is fighting to protect Wikipedia's open knowledge from being exploited by AI companies. They're enforcing copyright rules, building detection tools, and pushing for legal change to ensure AI gives credit where it's due.

AI as Editor-in-Chief: Risks of Algorithmic Control in Encyclopedias

AI is increasingly used to edit encyclopedias like Wikipedia, but algorithmic control risks erasing marginalized knowledge and freezing bias into the record. Human oversight is still essential.