Wikipedia governance: How volunteers, policies, and tools shape the world's largest encyclopedia

When you think of Wikipedia governance, the system of rules, roles, and processes that guide how content is created and maintained on Wikipedia. Also known as Wikipedia community governance, it's not run by a board of executives or an algorithm—it's held together by volunteers who follow written policies, debate in talk pages, and vote on changes. Unlike corporate platforms, Wikipedia doesn't have ads, paid content teams, or corporate owners. Its structure relies on Wikipedia policies, mandatory rules that editors must follow to ensure neutrality, reliability, and consistency, and volunteer editors, a global network of unpaid contributors who monitor, edit, and defend the encyclopedia. These aren't just suggestions—they're enforced through edit reverts, warnings, blocks, and even formal arbitration.

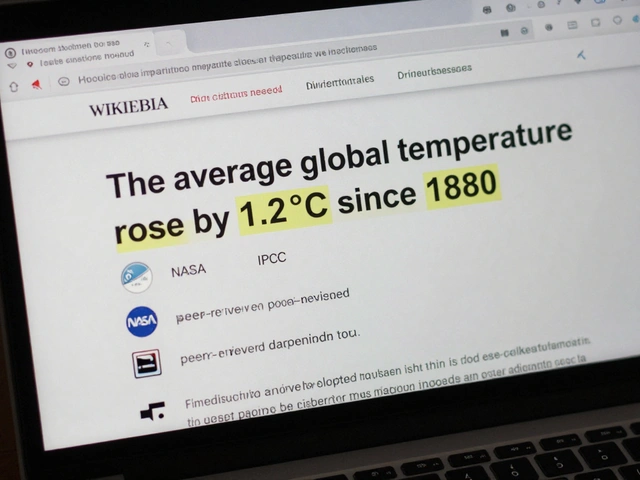

How does this system stay stable? It’s built on layers: Wikipedia governance starts with core policies like Neutral Point of View and Verifiability, then moves to guidelines that give advice, and finally to essays that reflect community opinion. Tools like watchlists and talk pages let editors track changes and resolve disputes without top-down control. Meanwhile, the Wikimedia Foundation, the nonprofit that provides technical infrastructure and legal support for Wikipedia but doesn't control content stays out of editorial decisions. This separation is key. The Foundation runs the servers and handles copyright takedowns, but it doesn’t decide if an article about climate change should mention 97% consensus—or if a local history page should be deleted. That’s up to the editors.

Real governance happens in the quiet spaces: in the back-and-forth on a talk page, in the careful sourcing of a disputed fact, in the volunteer who spends hours undoing vandalism from a botnet. It’s not glamorous. No one gets paid. But it works. Surveys show people still trust Wikipedia more than AI encyclopedias—not because it’s perfect, but because you can see how every edit was debated, sourced, and reviewed. You’ll find stories here about how WikiProjects coordinate article improvements, how paid editors clash with volunteers, how AI tools are being tested, and how harassment off-wiki is forcing new safety policies. This isn’t theory. It’s the daily reality of keeping the world’s largest encyclopedia honest, accurate, and open. Below, you’ll see how real editors navigate this system—what works, what breaks, and what’s changing next.

Using Mediation and Third Opinion in Wikipedia Disputes

Wikipedia disputes are common, but mediation and third opinion processes help editors resolve conflicts without edit wars. Learn how these tools work, when to use them, and how they keep articles moving forward.

Governance Experiments: How Citizen Juries Are Shaping Wikipedia’s Future

Wikipedia is testing citizen juries and randomized panels to make its knowledge more fair and representative. These experiments bring everyday people into decision-making, improving accuracy and trust in the world's largest encyclopedia.

Community Governance on Wikipedia vs Corporate Editorial Control

Wikipedia relies on volunteers and open collaboration, while corporate encyclopedias like Britannica use paid editors and strict control. Which model delivers better, more accurate knowledge? Here’s how they really compare.

Arbitration Committee on Wikipedia: How Contentious Disputes Are Settled

The Wikipedia Arbitration Committee handles the most heated editor disputes, enforcing community rules to keep content neutral and editing civil. Learn how it works, what cases it handles, and why it's vital to Wikipedia’s survival.

Why Wikipedia Avoids Top-Down Editorial Control Despite Global Scale

Wikipedia thrives without top-down control by relying on community norms, transparent processes, and open collaboration. Millions of edits daily are guided by policy, not authority - making it one of the most resilient knowledge systems ever built.

How CentralNotice Banners on Wikipedia Are Approved and Governed

Wikipedia’s CentralNotice banners are carefully approved to maintain neutrality and trust. Learn how fundraising and policy messages are reviewed, who controls them, and why commercial or biased content is never allowed.

How Wikipedia Administrators Are Elected in 2025: Key Changes

In 2025, Wikipedia changed how its administrators are elected to prioritize experience over popularity. New rules require proven editing history, limit voting to active users, and replace majority votes with consensus-based approval.

How Wikipedia Policies Are Developed and Approved

Wikipedia policies are created and updated by volunteers through open discussion, not top-down decisions. Learn how consensus, transparency, and community experience shape the rules behind the world's largest encyclopedia.

Sockpuppetry on Wikipedia: How Fake Accounts Undermine Trust and What Happens When They're Caught

Sockpuppetry on Wikipedia involves fake accounts manipulating content to push agendas. Learn how investigations uncover these hidden users, the damage they cause, and why this threatens the platform's credibility.

Controversial Policy Debates Shaping Wikipedia Today

Wikipedia's policy debates over neutrality, notability, paid editing, and AI are reshaping how knowledge is curated-and who gets to decide. These conflicts reveal deep tensions between global inclusion and Western-dominated governance.

ArbCom Election Controversies: Campaigns, Alliances, and Outcomes on Wikipedia

ArbCom elections on Wikipedia are high-stakes battles over power, bias, and control. Learn how campaigns, alliances, and voter turnout shape the future of the world's largest encyclopedia.