Wikipedia monitoring: How the community tracks edits, bots, and policy changes

When you read a Wikipedia article, you’re seeing the result of constant Wikipedia monitoring, the ongoing process of tracking edits, detecting bias, and enforcing policies to protect the integrity of free knowledge. Also known as content oversight, it’s not done by algorithms alone—it’s a quiet army of volunteers watching every change, every edit war, and every suspicious account.

Behind the scenes, Wikipedia bots, automated tools that handle repetitive tasks like reverting vandalism and fixing broken links work nonstop. They catch 80% of obvious spam before a human even sees it. But bots can’t judge intent. That’s where sockpuppetry, the use of fake accounts to manipulate content under false identities comes in. These hidden users push agendas, silence dissent, or boost corporate pages—and they’re hunted down by teams using IP logs, writing style analysis, and community tips. When caught, the damage isn’t just to one article—it erodes trust in the whole system.

Then there’s The Signpost, Wikipedia’s volunteer-run newspaper that reports on policy shifts, election drama, and community conflicts—not headlines. It’s not a news site you’d find on Google Trends, but it’s where the real battles are documented: who got banned for paid editing, which regional language projects are growing, and how ArbCom elections decide who holds power. This isn’t gossip. It’s accountability. Without this layer of monitoring, Wikipedia would be just another open wiki—easily hijacked, biased, or broken.

Monitoring isn’t about censorship. It’s about fairness. It’s why a student in Lagos can edit a Swahili Wikipedia page and know their changes won’t be erased by a paid editor from New York. It’s why a journalist can use Wikipedia as a research tool without fearing hidden agendas. And it’s why, despite having no budget for paid staff, Wikipedia stays one of the most trusted sources online—because thousands of people care enough to watch, report, and fix it every single day.

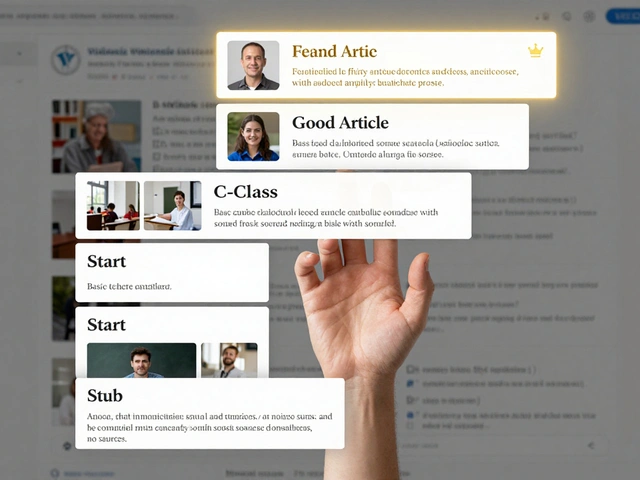

What follows is a collection of deep dives into how this system actually works—the tools, the people, the controversies, and the quiet victories. You’ll see how policy debates shape what stays online, how communities fight for representation, and how even the smallest edit can trigger a chain reaction across the globe.

How to Monitor Wikipedia Article Talk Pages for Quality Issues

Monitoring Wikipedia talk pages helps identify quality issues before they spread. Learn how to spot red flags, use tools, and contribute to better information across the platform.