Wikipedia trust: How reliability is built, challenged, and protected

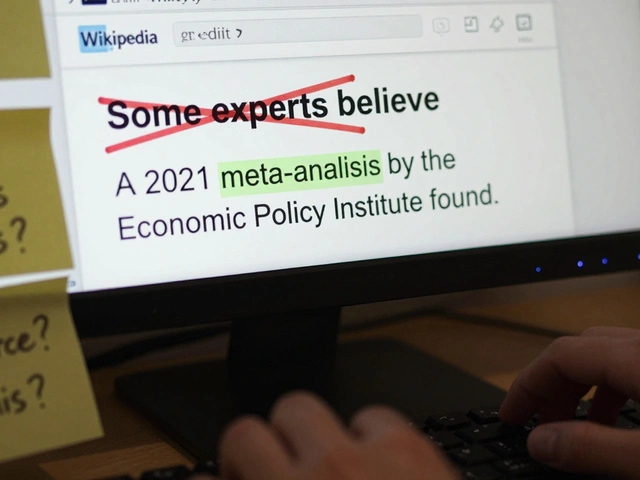

When you read a Wikipedia article, you're not just reading words—you're seeing the result of millions of decisions made by people trying to get it right. Wikipedia trust, the collective confidence readers place in Wikipedia’s accuracy and fairness. Also known as information reliability on Wikipedia, it’s not guaranteed by any official stamp. It’s earned, one edit, one citation, one talk page discussion at a time. This trust doesn’t come from the platform itself. It comes from the people who spend hours checking sources, arguing over neutrality, and fixing vandalism before it spreads. It’s fragile. One bad source, one biased edit, one ignored warning can chip away at it.

Behind every trusted article is a system designed to catch mistakes. Wikipedia policies, the rules that guide what can be added and how it’s verified are the backbone. Neutral point of view, verifiability, no original research—these aren’t suggestions. They’re the filters that keep opinion out and evidence in. But policies only work if people follow them. That’s where Wikipedia editors, the volunteers who create, update, and defend content come in. Some are perfectionists who fix commas. Others are experts who add peer-reviewed studies. And then there are the bots—automated tools that undo vandalism in seconds. Without them, the site would drown in spam. But bots can’t judge context. Only humans can decide if a local newspaper is reliable, or if a claim about a controversial topic needs more proof.

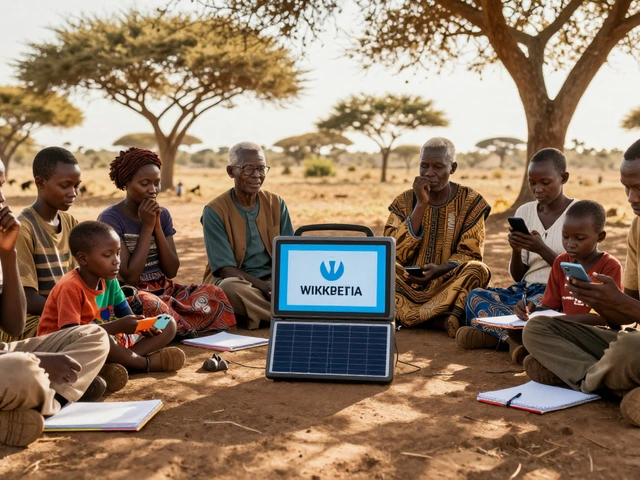

Trust also depends on what’s missing. In places without strong local journalism, Wikipedia struggles. If there’s no credible source to cite, events don’t get recorded. That’s why reliable sources, the external publications that back up Wikipedia’s claims are so critical. A news article from a major outlet carries weight. A blog post doesn’t. But what if the only source is a community newsletter? That’s where the real work begins—evaluating, debating, and sometimes fighting to include underrepresented voices without breaking the rules. It’s messy. It’s slow. But it’s the only way to keep the knowledge honest.

And then there’s the pressure. Paid editors, AI-generated content, political campaigns—all of them test Wikipedia’s defenses. The community doesn’t always agree on how to respond. Some edits get reverted. Some debates drag on for months. But every time someone steps in to fix a false claim, cite a better source, or explain why a change doesn’t belong, they’re rebuilding trust. You won’t see that work in the final article. But it’s there. And that’s why, despite everything, Wikipedia still works.

Below, you’ll find real stories from inside the system—the policy fights, the source debates, the quiet editors who keep things running. These aren’t abstract ideas. They’re the daily actions that make Wikipedia more than just a website. They’re what keep you coming back.

Sockpuppetry on Wikipedia: How Fake Accounts Undermine Trust and What Happens When They're Caught

Sockpuppetry on Wikipedia involves fake accounts manipulating content to push agendas. Learn how investigations uncover these hidden users, the damage they cause, and why this threatens the platform's credibility.