Wikipedia doesn’t write news. It reports what news outlets have already reported-and only if those reports come from sources that meet strict standards. If you’ve ever wondered why a breaking story doesn’t show up on Wikipedia right away, or why some headlines get removed after a few days, the answer lies in one rule: verifiable sources.

Why Wikipedia Won’t Publish What You Saw on Twitter

You see a viral tweet about a major political event. A celebrity is arrested. A company just filed for bankruptcy. You rush to Wikipedia to check the facts. Nothing’s there. Frustrating? Maybe. But that’s by design.Wikipedia’s editors don’t trust unverified claims, even if they’re trending. A tweet, a blog post, or a YouTube video doesn’t count as a source. Not because they’re always wrong, but because they’re not accountable. Anyone can post anything. Wikipedia needs sources that can be checked, traced, and confirmed by others.

That’s why the policy says: “Material challenged or likely to be challenged, and all quotations, must be attributed to a reliable, published source.” It’s not about being slow. It’s about being accurate.

What Counts as a Reliable Source for News?

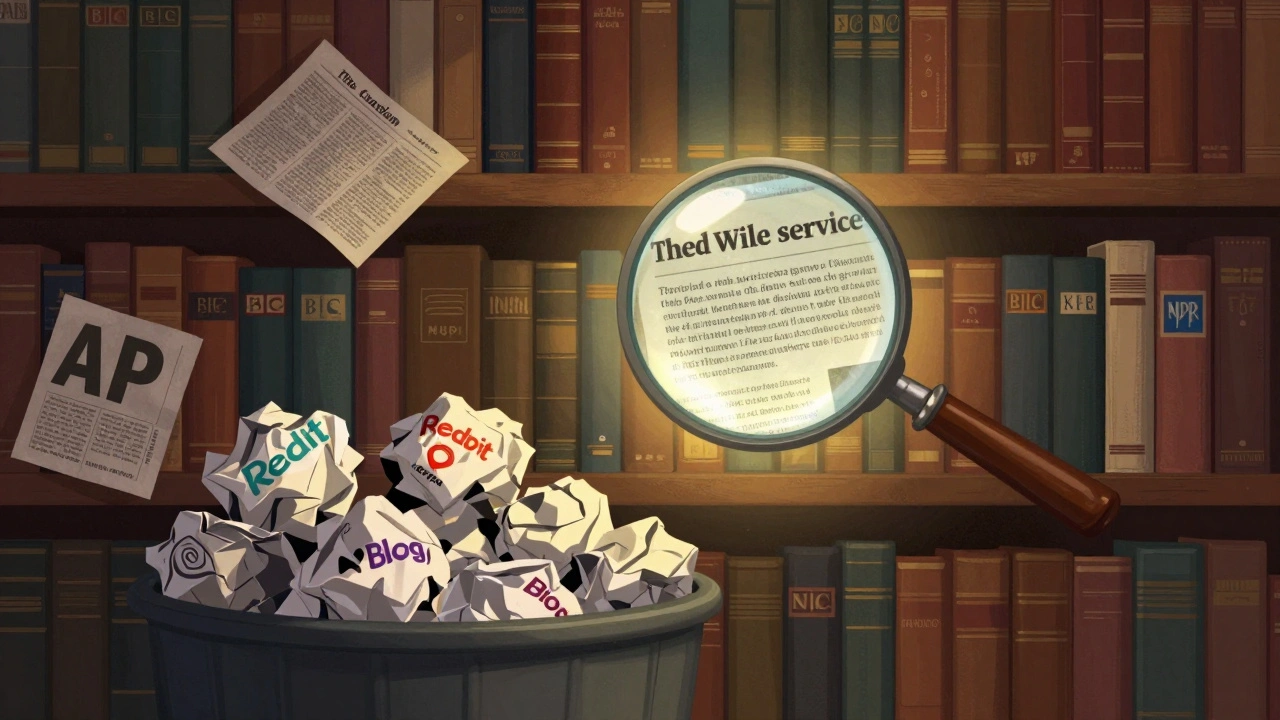

Not every newspaper, website, or TV station qualifies. Wikipedia has a list of what it considers reliable for news stories. These aren’t arbitrary-they’re based on editorial independence, fact-checking standards, and track record.Reliable sources include:

- Major national newspapers like The New York Times, The Guardian, or The Washington Post

- Established wire services like Associated Press (AP) or Reuters

- Peer-reviewed academic journals when reporting on research tied to news events

- Official government press releases or public statements from verified accounts

- Reputable broadcast networks like BBC, CNN, or NPR

What’s not reliable? Personal blogs, Reddit threads, Instagram posts, corporate press releases without independent verification, and tabloids like The National Enquirer. Even if a story appears in multiple places, if all of them are just copying each other without original reporting, Wikipedia still won’t accept it.

Here’s how it works in practice: if a story breaks and only one small blog reports it, Wikipedia waits. If two major news outlets confirm the same detail independently, then it becomes eligible for inclusion. That’s why Wikipedia stories often lag behind breaking news-they’re waiting for confirmation.

How Wikipedia Handles Controversial or Unconfirmed Reports

Sometimes, a major event happens and the facts are still unclear. A fire, a shooting, a natural disaster-initial reports are messy. In these cases, Wikipedia doesn’t stay silent. It says so.Editors use phrases like “according to unconfirmed reports,” “as claimed by local authorities,” or “allegedly.” These qualifiers aren’t fluff. They’re essential. They tell readers: we’re reporting what someone said, not what we know to be true.

For example, during the 2023 Maui wildfires, early social media posts claimed the entire town of Lahaina was gone. Wikipedia didn’t add that until the Hawaii Emergency Management Agency confirmed it officially. Until then, the article said: “Reports from residents and emergency responders indicate widespread damage, but official figures are pending.”

This approach protects Wikipedia from spreading misinformation-even unintentionally. It also teaches readers how to think critically about news. If you see a Wikipedia article with qualifiers, it’s not a flaw. It’s a feature.

Why This Matters for Readers and Journalists

Wikipedia is one of the most visited websites in the world. Millions of people use it to learn about current events. If it started publishing unverified claims, it would become part of the problem-not the solution.For readers, this means Wikipedia is a safety net. It doesn’t give you the first tweet about a story. It gives you the story after it’s been checked. That’s why students, researchers, and even professional journalists often use Wikipedia as a starting point for deeper investigation.

For journalists, Wikipedia’s standards act as a mirror. If your story isn’t good enough to be cited on Wikipedia, maybe it needs more reporting. The platform doesn’t reward speed. It rewards accuracy. That’s a high bar-but it’s the right one.

Wikipedia doesn’t want to be the first to break news. It wants to be the last to get it wrong.

What Happens When Sources Conflict?

Sometimes, two reputable outlets report different versions of the same event. One says a politician resigned. Another says they were fired. Who’s right?Wikipedia doesn’t pick a side. It presents both claims, clearly attributed. The article might say: “According to The New York Times, the official resigned due to personal reasons. Bloomberg reported that the resignation followed pressure from party leadership.”

This is called “neutral point of view,” and it’s one of Wikipedia’s core policies. It’s not about being wishy-washy. It’s about showing the full picture when the truth isn’t settled. Readers get the context. They can decide for themselves.

Compare that to many news sites that frame stories with headlines designed to provoke. Wikipedia doesn’t have headlines. It has facts-with sources.

How to Check if a Source Is Acceptable

If you’re editing Wikipedia or just curious why something was removed, here’s how to test a source:- Is it published? (Not just posted online)

- Does it have editorial oversight? (Someone fact-checks before publishing)

- Is it independent? (Not owned by the subject of the story)

- Has it been cited by other reliable sources? (Cross-verification matters)

- Is it widely recognized? (Not a niche blog or obscure website)

If you answer “no” to any of these, it’s probably not acceptable. You can also check Wikipedia’s own Reliable Sources Noticeboard, where editors debate whether a source meets standards. It’s public. You can see the reasoning behind every decision.

Why This System Works-And Why It’s Hard to Replace

There are thousands of websites that report news faster than Wikipedia. But none of them combine speed with reliability the way Wikipedia does.Its strength isn’t in having reporters on the ground. It’s in having a global network of volunteers who cross-check every line. Every edit is public. Every source is visible. Every dispute is documented.

This system isn’t perfect. Mistakes happen. But the correction process is built in. If a source is later proven wrong, the article gets updated. If a source is added retroactively, the edit history shows it.

Wikipedia’s verifiable sources rule isn’t about control. It’s about trust. It says: we won’t tell you what happened. We’ll show you who said it, and how we know.

What You Can Do

If you care about accurate information:- Don’t assume a Wikipedia article is outdated just because it’s not updated instantly.

- Use Wikipedia to trace claims back to their original sources.

- If you find a claim that seems wrong, check the references. If they’re missing or unreliable, edit the article-or at least flag it.

- Support journalism that meets Wikipedia’s standards. Those outlets are the backbone of the platform.

Wikipedia doesn’t need you to write articles. It needs you to demand better sources. The more we expect verification, the less room there is for misinformation.