Wikipedia doesn’t show you the same page as your neighbor. Not because it’s broken, but because it’s starting to change-quietly, behind the scenes. AI-driven personalization is creeping into how articles are displayed, what links are highlighted, and even which versions of a topic you see first. This isn’t science fiction. It’s happening now. And no one asked you if you wanted it.

What Does AI Personalization Look Like on Wikipedia?

Right now, Wikipedia’s AI tools are mostly internal. They help editors flag vandalism, suggest edits, and sort through thousands of changes every minute. But experiments are underway to personalize the reader experience. Imagine searching for "climate change" and seeing a summary tailored to your location, reading level, or past clicks. Or getting a sidebar that says, "People like you also read this article about renewable energy policies in your state." AI-driven personalization is the use of machine learning algorithms to adapt content presentation based on individual user behavior, preferences, or demographic data. On Wikipedia, this could mean adjusting article complexity, reordering sections, or surfacing related content based on your browsing history-even if you’re not logged in.

Some of this is already happening in beta. In 2024, the Wikimedia Foundation tested a feature called "Read More" that used lightweight AI to recommend related articles based on how long users spent on a page. In one trial, users who saw AI-suggested links stayed 17% longer. That sounds good-until you realize the system didn’t tell them why those links appeared.

Why Wikipedia Shouldn’t Be Like Amazon or YouTube

Amazon recommends products. YouTube recommends videos. Both are built to keep you scrolling, to maximize engagement, to sell you something. Wikipedia’s mission is different. It’s not there to keep you hooked. It’s there to give you facts-fairly, neutrally, and transparently.

Personalization breaks that promise. If you’re shown a simplified version of "evolution" because you’re 14, and your neighbor sees a peer-reviewed summary because they’re a biology professor, you’re not getting the same knowledge. You’re getting different versions of the truth. And that’s not neutrality. That’s fragmentation.

Wikipedia’s core principle-the neutral point of view-isn’t just a guideline. It’s the foundation of its credibility. When AI starts filtering what you see based on who you are, that foundation cracks. You might think, "But it’s just a suggestion," but suggestions become defaults. Defaults become norms. Norms become expectations. And soon, you won’t even know what you’re missing.

Who Gets Left Out?

AI systems don’t work in a vacuum. They learn from data-and that data is biased. Wikipedia’s editor base is 85% male, mostly from North America and Europe. The AI models trained on this data will naturally favor perspectives from that group. If you’re a woman in rural India, or a non-binary teenager in Brazil, or someone who speaks Swahili as a first language, the personalized version of "gender equality" or "healthcare" you see might be shallow, outdated, or outright wrong.

There’s no public audit trail for these AI decisions. No way to know if your version of an article was filtered because of your IP address, your browser, or your past clicks. And if you don’t know what’s being hidden, you can’t question it.

In 2023, researchers at the University of Toronto analyzed 12,000 Wikipedia article views across 27 countries. They found that users in low-income regions were 32% less likely to see high-quality, cited versions of articles-even when they searched for the same terms as users in wealthier areas. The AI, trained mostly on data from English-speaking, high-income users, assumed those were the "standard" versions. That’s not personalization. That’s exclusion.

Transparency Is the Only Ethical Path

There’s a way forward-but it’s not about adding more AI. It’s about being honest about what AI does.

Here’s what ethical AI personalization on Wikipedia could look like:

- Opt-in only. No one gets personalized content unless they actively choose it. No sneaky defaults.

- Clear labels. If a section is reordered or summarized by AI, it says so: "This summary was generated using AI based on your reading history."

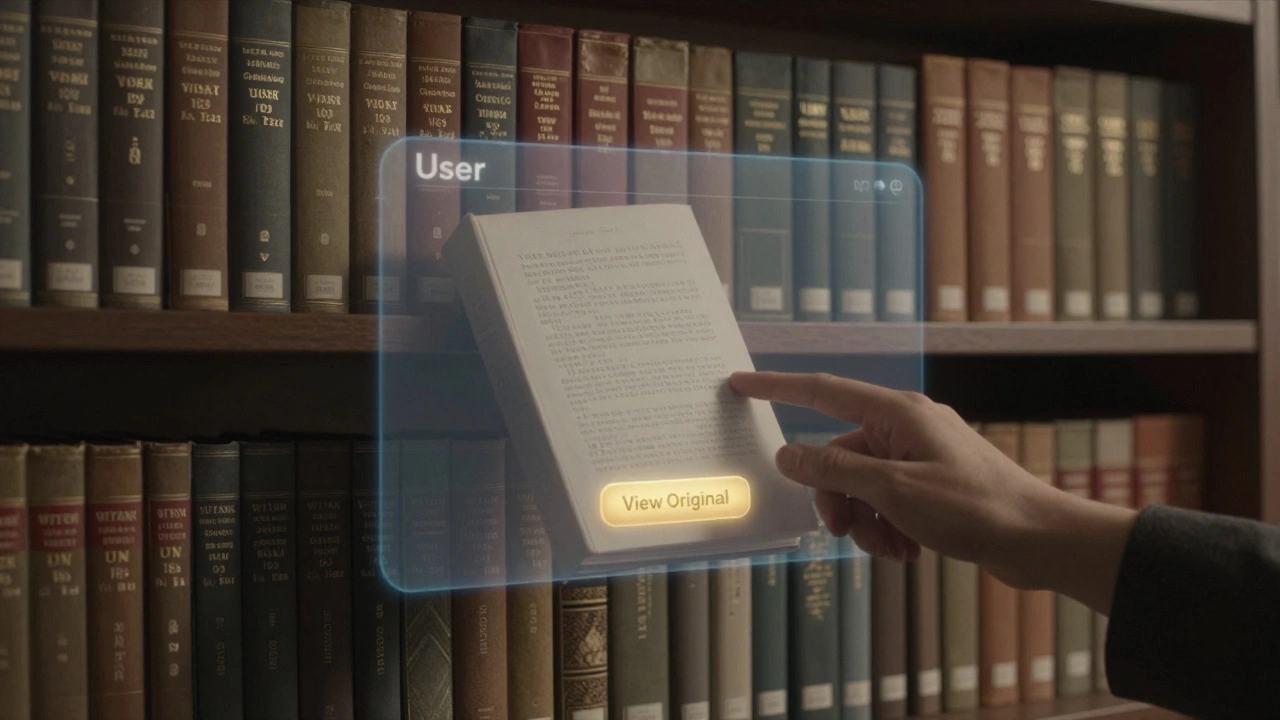

- Full access to the original. One click, and you see the unmodified, community-vetted version. No hidden "real" article.

- Public data logs. Anyone can see what data the AI used to make decisions-without compromising privacy.

- Community oversight. Editors from diverse regions review AI suggestions before they roll out.

The Wikimedia Foundation has the technical ability to do this. They’ve done it before-with the "Mobile View" toggle, the "Simple English" version, and the "Printable Version" button. Those features respect user choice. They don’t assume they know better.

What Happens If We Don’t Act?

If Wikipedia becomes another algorithm-driven platform, it loses what makes it unique: trust. People still turn to Wikipedia because they believe it’s the closest thing to an unbiased, collective knowledge base. That trust isn’t built on perfect articles. It’s built on consistency. On knowing that the same facts are there for everyone.

Once personalization takes hold, the next step is monetization. Ads. Sponsored links. Data collection. The same path every other free service took. And then, Wikipedia becomes just another corporate product dressed up as public good.

It’s not about stopping innovation. It’s about protecting a public resource. Wikipedia isn’t a startup. It’s a library. A museum. A shared archive of human knowledge. Libraries don’t personalize your books based on your income. They don’t hide the hard parts because you might find them uncomfortable. They give you everything-and let you decide what to do with it.

What You Can Do

You don’t need to be a coder or a policy expert to protect Wikipedia’s integrity. Here’s how you can help:

- Use the "View History" tab. See how articles change over time. Notice if your version looks different from others.

- Report suspicious edits or biased summaries through Wikipedia’s feedback system.

- Join the discussion on Meta-Wiki. The Wikimedia Foundation listens to users who speak up.

- Don’t assume your version is the only version. Compare your view with someone else’s.

- Support organizations that fund independent Wikipedia research, like the Digital Public Library of America or the Internet Archive.

The future of Wikipedia isn’t written by algorithms. It’s written by people who care enough to ask: "Who is this for? And who is being left out?"

Is Wikipedia already using AI to personalize what I see?

Not officially, and not in a widespread way. But small-scale tests are happening. For example, AI has been used to suggest related articles based on reading time, and to flag content that might be outdated. These are experimental and not yet standard for all users. There’s no public dashboard showing what personalization you’re getting-so if you notice differences in what you see compared to others, it could be early-stage testing.

Can I turn off AI personalization on Wikipedia?

Currently, there’s no option to turn off AI personalization because it’s not broadly deployed. But if it ever is, the ethical approach would be to make it opt-in, not opt-out. That means you’d have to actively choose to use it. Until then, you can minimize influence by using incognito mode, clearing cookies, or accessing Wikipedia through different devices or networks to compare views.

Does Wikipedia track my browsing history?

Wikipedia does not track individual users by default. It collects anonymous, aggregated data about page views to understand traffic patterns, but it doesn’t store your search history, login behavior, or personal details unless you create an account. Even then, it doesn’t use that data for personalization unless you explicitly agree to participate in research programs.

Why does Wikipedia need AI at all?

AI helps Wikipedia manage its scale. With over 60 million articles and millions of edits daily, human editors alone can’t catch everything. AI assists with detecting vandalism, suggesting missing citations, and translating content. The problem isn’t AI-it’s using AI to change what readers see, rather than just helping editors keep the site running.

What’s the difference between AI for editors and AI for readers?

AI for editors helps improve content quality-fixing grammar, suggesting sources, flagging inconsistencies. That’s supportive. AI for readers changes what content you see-reordering sections, hiding complex parts, or highlighting certain links. That’s manipulative. The first helps the encyclopedia. The second changes your understanding of truth.