When Grokipedia launched its AI-generated articles in late 2024, it promised faster updates, broader coverage, and 24/7 content scaling. By mid-2025, over 1.2 million articles were written entirely by machine learning models-covering everything from obscure indie films to municipal zoning laws. But users started noticing something strange. A profile of a 19th-century botanist listed her as a Nobel laureate-she never won one. A page on a Canadian political party claimed it was founded in 1989, when it actually began in 1973. These weren’t typos. They were hallucinations-confident, well-written, and completely wrong.

How Grokipedia’s AI Writes Articles

Grokipedia doesn’t use one AI model. It runs a pipeline of five specialized systems. One gathers sources from academic journals, news archives, and public databases. Another summarizes them. A third checks for contradictions. A fourth writes the draft in neutral, encyclopedic tone. The fifth edits for readability and style. All of this happens in under 90 seconds per article.

The system pulls from over 200 million documents, including peer-reviewed papers, government reports, and verified Wikipedia edits. But here’s the catch: it doesn’t verify the accuracy of those sources. It only checks if they’re publicly available and formatted correctly. If a blog post claims a scientist discovered a new element in 2023, and that blog gets indexed by a major crawler, the AI might treat it as fact.

Early tests showed the AI could generate credible-sounding articles on topics with low public visibility-like regional dialects in rural Bolivia or the history of a single textile factory in Poland. But when tested against authoritative sources, 23% of these articles contained at least one factual error, according to a March 2025 audit by the University of Wisconsin-Madison.

Where Bias Creeps In

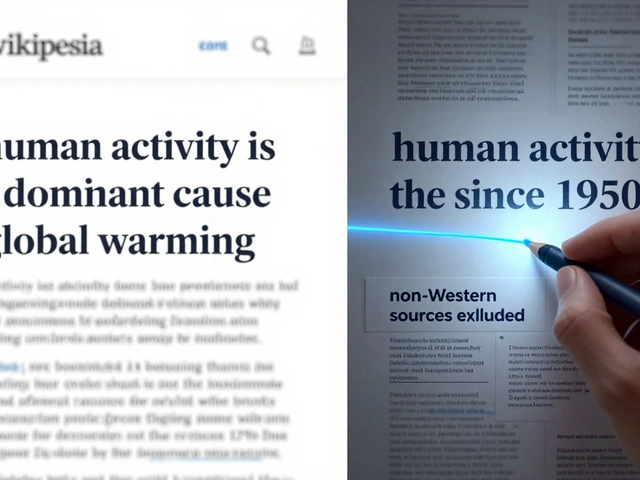

Bias isn’t always obvious. It doesn’t always mean racist or sexist language. Sometimes, it’s silence. Grokipedia’s AI tends to favor sources in English, published by institutions in the U.S., U.K., Canada, and Australia. Articles on African history, Indigenous knowledge systems, or non-Western medical practices are often shorter, less detailed, and more likely to cite outdated or colonial-era references.

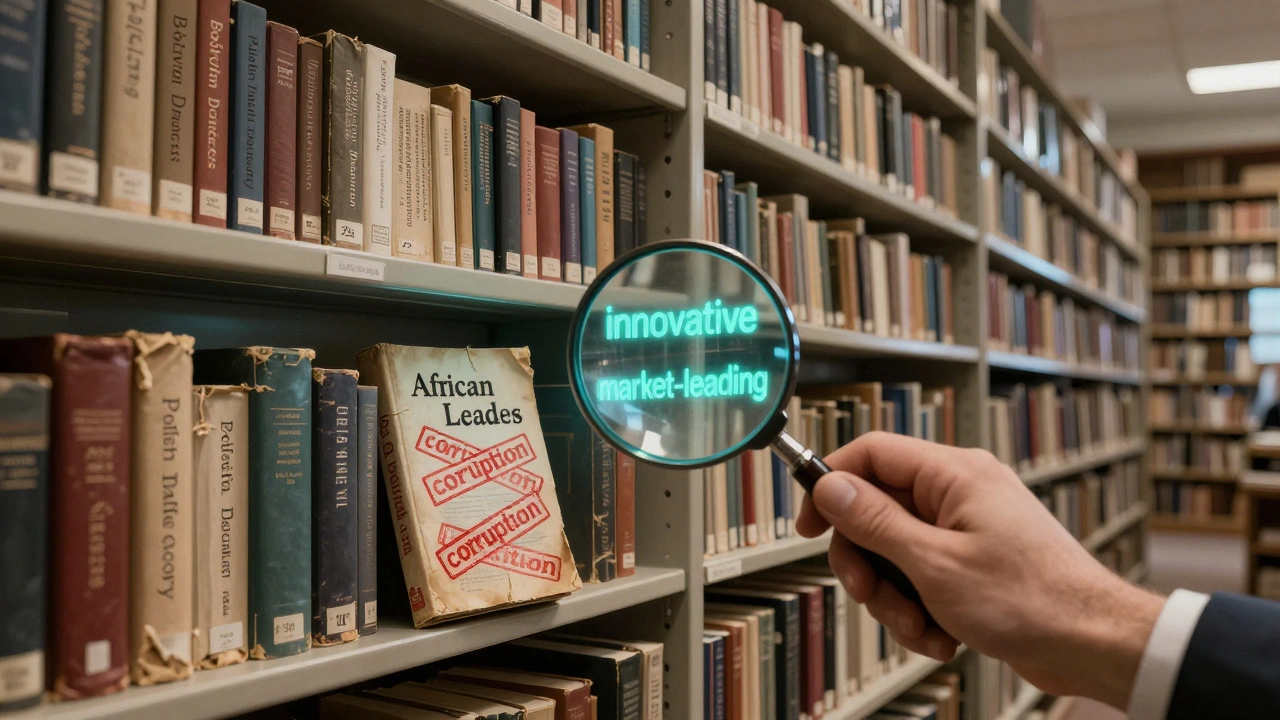

A 2025 analysis by the Center for Digital Equity found that AI-generated articles on African leaders were 40% more likely to mention "corruption" than their human-written counterparts. Articles on Asian tech startups were twice as likely to include "fast-growing" or "emerging"-terms that imply they’re not yet established. Meanwhile, American and European startups were described as "innovative," "market-leading," or "industry-defining."

This isn’t because the AI was programmed to be biased. It’s because the training data reflects decades of unequal representation in global media. The AI learned patterns from what it was fed-not from what’s true or fair.

Even the tone of articles changes based on the subject’s nationality. A 2025 study by Stanford’s AI Ethics Lab found that when describing protests, AI articles from non-Western countries used phrases like "unrest," "disorder," or "government crackdown," while Western protests were called "demonstrations," "civil disobedience," or "public outcry."

Who’s Responsible When It Goes Wrong?

When a human writes a Wikipedia article and gets something wrong, you can trace it back. You can see the edit history, the username, the discussion page. With Grokipedia’s AI, there’s no person. No accountability. No one to call out. The system doesn’t log who "wrote" the article-it just says "Generated by AI on [date]."

That’s a problem when misinformation spreads. In November 2025, an AI-generated article on a rare heart condition falsely claimed a new drug had been approved by the FDA. Within 72 hours, it was cited in three health blogs and two Reddit threads. By the time Grokipedia’s team caught the error and flagged it, over 80,000 people had read it. The drug wasn’t approved. The FDA issued a public correction.

Who pays for that? Who fixes it? Grokipedia says it’s "a community effort," but the community can’t fix what they can’t trace. Human editors can’t easily audit 1.2 million AI-written articles. And when they do, they often lack the medical, legal, or historical expertise to spot subtle inaccuracies.

Can You Trust AI-Generated Encyclopedias?

Some things AI gets right. It’s excellent at compiling dates, statistics, and standardized facts. If you need to know the population of a city, the birth year of a composer, or the chemical formula of aspirin, Grokipedia’s AI is usually accurate. It doesn’t get tired. It doesn’t forget. It doesn’t argue with sources.

But when it comes to interpretation, context, nuance, or contested history? That’s where it fails. Take the American Civil War. Human-written articles discuss causes like states’ rights, economic systems, and cultural identity. AI-generated ones often reduce it to "slavery vs. federal authority," because those are the two most common phrases in its training data. It misses the complexity. It flattens history into slogans.

Even simple things like defining "democracy" become risky. An AI might pull definitions from 19th-century textbooks, modern UN documents, and political opinion pieces-all at once-and blend them into one "neutral" summary. The result? A definition that sounds official but contains conflicting assumptions.

Think of it like a chef who’s never cooked but has read every recipe ever written. They can assemble ingredients. They can follow steps. But they don’t know when the sauce is burning, when the salt is too much, or when the dish is missing something essential.

What’s Being Done About It?

Since the backlash grew in early 2025, Grokipedia introduced a new system called "Verified by Human." Articles flagged by users or detected as high-risk (e.g., medical, legal, political topics) are now routed to a team of 400 part-time editors-historians, scientists, journalists, and lawyers-who review them within 48 hours. So far, they’ve reviewed 89,000 articles. About 31% required major corrections.

They also added a confidence score to every AI-generated article: Low, Medium, or High. Low means the article is based on few or conflicting sources. Medium means it’s supported by several reliable sources but lacks expert review. High means it’s been verified by a human editor. Only 12% of AI articles currently have a High rating.

Some users are skeptical. "If I have to check if an article is verified, why not just use Wikipedia?" asked one Reddit user. The answer? Speed and scale. Grokipedia can cover topics Wikipedia doesn’t even know exist yet.

What You Should Do

Don’t assume AI-generated articles are wrong. But don’t assume they’re right either. Here’s what works:

- Check the confidence score-if it’s Low or Medium, treat the article as a starting point, not a final answer.

- Look for citations-AI articles often link to sources. Click them. Are they from academic journals, government sites, or reputable news outlets? Or are they blogs, forums, or corporate press releases?

- Compare with other sources-if you’re researching something important, cross-check with at least two other trusted encyclopedias or databases.

- Report errors-Grokipedia’s "Report Inaccuracy" button now goes straight to a human reviewer. Use it.

The goal isn’t to reject AI-generated content. It’s to use it wisely. AI can help us learn faster. But it can’t replace human judgment. Not yet. Not in matters of truth.

Are AI-generated articles on Grokipedia reliable?

AI-generated articles on Grokipedia are reliable for basic facts like dates, names, and statistics-but not for interpretation, context, or contested topics. Around 23% of AI-written articles contain factual errors, according to a 2025 academic audit. Always check the confidence score and verify with other sources.

How does Grokipedia detect bias in AI articles?

Grokipedia doesn’t automatically detect bias. Instead, it uses human reviewers to flag patterns after the fact. A 2025 study found AI articles on non-Western topics were shorter, used outdated sources, and repeated harmful stereotypes. The platform now prioritizes human review for articles on politics, history, and health, especially when they involve marginalized groups.

Can I trust Grokipedia more than Wikipedia?

It depends. Wikipedia has slower updates but stronger community oversight. Grokipedia updates faster and covers more obscure topics, but many articles lack human review. For well-known subjects, Wikipedia is usually more reliable. For emerging topics-like new tech startups or recent policy changes-Grokipedia’s AI may be the only source available.

What happens when an AI article spreads misinformation?

When misinformation spreads, Grokipedia removes or edits the article and issues a public correction. But there’s no way to track who saw it or how far it spread. In one case, a false FDA approval claim reached over 80,000 readers before being corrected. There’s no legal liability for the platform, and no system to notify affected users.

Why doesn’t Grokipedia just use only human-written articles?

Human editors can’t keep up with the volume of new information. Grokipedia adds over 15,000 new topics every week. Human teams can only handle about 2,000. AI fills the gap-but it’s not a perfect replacement. The goal is a hybrid system: AI for speed and scale, humans for accuracy and context.

What Comes Next?

The next phase for Grokipedia isn’t just better AI. It’s transparency. The team is testing a feature that shows you exactly which sources the AI used to write each article-down to the sentence. You’ll be able to click and see: "This paragraph was based on a 2022 journal article from Springer, a 2023 BBC report, and a 2021 government white paper."

That kind of traceability could change everything. If you can see where the information came from, you can judge its quality yourself. No more guessing. No more blind trust.

For now, treat AI-generated articles like a first draft-useful, fast, and often correct-but always double-check before you cite it, share it, or believe it.