Every time you ask an AI chatbot a question, it might be pulling answers from Wikipedia. Not because it’s been told to, but because that’s where the data is-clean, free, and vast. Wikipedia has over 60 million articles in more than 300 languages. It’s the largest collection of human knowledge ever assembled. And now, it’s feeding the machines. But here’s the problem: Wikipedia doesn’t get credit. Not legally. Not consistently. And sometimes, not at all.

How AI Systems Use Wikipedia Without Asking

AI models like GPT, Claude, and Gemini weren’t trained on Wikipedia because someone handed them a license. They were trained on massive public datasets scraped from the internet-Common Crawl, The Pile, and others. Wikipedia is a major part of those datasets. Its content is structured, well-written, and free of paywalls. It’s the perfect training ground.

When you ask an AI, "Who invented the telephone?" or "What caused the fall of the Roman Empire?", it’s not recalling facts from a database. It’s predicting the most likely sequence of words based on patterns it learned from millions of Wikipedia articles. The original text isn’t copied word-for-word, but the structure, phrasing, and even unique turns of phrase often mirror Wikipedia’s style. That’s not coincidence. It’s training.

And here’s the twist: Wikipedia’s own license-the Creative Commons Attribution-ShareAlike license-requires anyone who uses its content to give credit. But AI companies don’t. Not in the way the license demands. They don’t list which articles their models learned from. They don’t link back. They don’t even say "this answer is based on Wikipedia" unless you specifically ask.

Why Attribution Matters More Than You Think

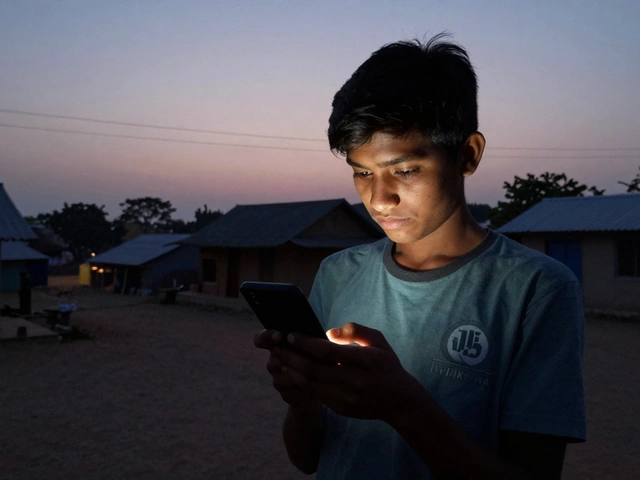

Wikipedia isn’t just a website. It’s a community. Over 200,000 active editors contribute every month. They’re volunteers. Teachers, students, librarians, retirees. They spend hours fact-checking, citing sources, rewriting for clarity. They do it because they believe in open knowledge.

When AI systems use their work without attribution, it’s not just a legal gray area-it’s a moral one. These editors aren’t paid. They don’t get royalties. But they do get visibility. When their edits appear in AI responses, they’re erased from the record. No one knows who wrote the sentence that helped an AI answer a child’s homework question. No one knows who fixed the typo in the article about quantum computing that helped a researcher understand a concept.

There’s a real risk here: if AI companies keep using Wikipedia’s content without credit, the incentive to contribute could shrink. Why spend hours editing a page if your work just disappears into a black box?

What the Law Actually Says (And Doesn’t Say)

Legally, AI companies are walking a tightrope. The Creative Commons Attribution-ShareAlike 4.0 license (CC BY-SA 4.0) requires that any adaptation or derivative work must:

- Give appropriate credit

- Provide a link to the license

- Indicate if changes were made

- Share any derivative work under the same license

But here’s the catch: courts haven’t ruled on whether training an AI on CC BY-SA content counts as creating a "derivative work." Some legal scholars say no-training is like reading a book, not copying it. Others argue that if the AI regurgitates the structure, phrasing, or unique insights from Wikipedia, then it’s a derivative.

The U.S. Copyright Office says AI-generated content isn’t copyrightable unless a human directs it. But that doesn’t mean the input data is free for the taking. Wikipedia’s license doesn’t care about copyright-it cares about attribution. And that’s where the conflict lives.

Germany’s courts have already ruled that scraping Wikipedia for AI training violates the license. In 2024, a German publisher successfully sued an AI firm for using Wikipedia data without attribution. The court said: "The license conditions apply regardless of whether the output is verbatim or paraphrased."

What AI Companies Are Doing (And Not Doing)

Most big AI companies don’t mention Wikipedia at all in their training data disclosures. OpenAI’s training data documentation lists "web text" but doesn’t break it down. Anthropic says it uses "publicly available internet data"-vague enough to include Wikipedia without naming it.

Only a few have taken steps. Hugging Face, the open-source AI platform, includes a "Wikipedia" tag in its dataset cards and links to the original articles. Meta’s Llama models, when trained on Wikipedia, include a note in their model card: "This model was trained on data from Wikipedia under CC BY-SA 4.0. Attribution is required."

But these are exceptions. Most companies treat Wikipedia like a free buffet-take what you want, leave no trace.

Real-World Consequences: When AI Gets Wikipedia Wrong

Wikipedia isn’t perfect. It has gaps, biases, and occasional errors. But it’s transparent. You can see who edited what, when, and why. You can click the "View history" tab and trace the evolution of an article.

AI doesn’t show that. It gives you a polished answer, but you have no idea if it’s based on a well-sourced article or a poorly edited stub. In 2023, an AI assistant told a user that the founder of Wikipedia was a "British computer scientist named John Smith." That’s not true. The real founder is Jimmy Wales. But the AI had learned from a fake edit that had been up for two weeks before being reverted. It didn’t know the edit was wrong. It just repeated what it had seen.

When AI systems use Wikipedia without attribution, they also inherit its blind spots. Articles on women scientists, Indigenous knowledge, or non-Western history are often underdeveloped. AI learns from that-and then reinforces it. Without knowing the source, users assume the AI’s answer is neutral, when it’s actually echoing systemic gaps.

What Needs to Change

There are three clear paths forward.

First: AI companies must include attribution in outputs. If an answer is clearly based on Wikipedia, the system should say so. Not as a footnote. Not as an option. As a standard feature. For example: "This answer draws from the Wikipedia article on [topic], licensed under CC BY-SA 4.0."

Second: Create a standardized way to track Wikipedia usage. Imagine a system where every AI model trained on Wikipedia logs which articles it used and how often. That data could be made public. Editors could see which of their edits are being used. Communities could track impact. This isn’t science fiction-it’s already being piloted by the Wikimedia Foundation in partnership with academic researchers.

Third: Legal clarity is needed. Legislators and courts need to define whether AI training falls under "fair use" or requires compliance with open licenses. Without that, Wikipedia’s community will keep being exploited.

Wikipedia doesn’t need money from AI companies. It needs recognition. It needs to be seen as the public good it is-not a free data mine.

What You Can Do

You don’t have to wait for corporations or lawmakers to act. Here’s how you can help:

- If you use AI tools, ask: "Where did this information come from?" If the answer is vague, push back.

- Support Wikipedia. Edit a page. Donate. Share articles you find useful.

- Report AI outputs that use Wikipedia content without credit. Tools like Wikipedia’s AI Detection Bot are starting to flag these cases.

- Advocate for transparency. Ask your favorite AI provider to disclose training data sources.

The future of knowledge isn’t just in the hands of tech giants. It’s in the hands of the people who write, edit, and care about what’s true. If we let AI swallow Wikipedia without credit, we lose more than a source-we lose the culture that made it possible.

Do AI companies legally have to credit Wikipedia?

Legally, it depends. Wikipedia’s content is licensed under CC BY-SA 4.0, which requires attribution. But current copyright law doesn’t clearly say whether training an AI on that content counts as creating a "derivative work." Some courts, like in Germany, have ruled that it does. Others haven’t. So while it’s not universally enforced, the license still applies-and ignoring it risks legal and ethical consequences.

Can I use AI answers that came from Wikipedia without citing it?

If you’re using an AI-generated answer for personal use, you don’t need to cite it. But if you’re publishing it, sharing it publicly, or using it in academic or professional work, you should trace the source. Many AI answers are based on Wikipedia. Use tools like Reverse Image Search or prompt the AI: "Show me the source." Then cite Wikipedia directly if it matches.

Why don’t AI companies just pay Wikipedia for data?

Wikipedia doesn’t sell data. It’s a nonprofit that believes knowledge should be free. Paying for access would go against its mission. The issue isn’t payment-it’s respect. Wikipedia’s license allows free use as long as credit is given. AI companies can use the data legally without paying, but they’re choosing not to credit it.

Does Wikipedia know how much its data is used by AI?

Not exactly. Wikipedia doesn’t track which AI systems use its content. But researchers are starting to detect patterns. A 2024 study from the University of Oxford found that over 70% of AI-generated responses on factual topics matched Wikipedia’s phrasing closely enough to be traced back. The Wikimedia Foundation is now working on tools to better monitor and document this usage.

What happens if AI starts replacing Wikipedia?

If people start trusting AI answers more than Wikipedia, fewer people will edit or donate. That could lead to lower quality, outdated articles, and fewer perspectives. Wikipedia thrives on community. AI doesn’t have a community-it has algorithms. Without active human editors, Wikipedia could fade. And then we’d lose the only free, editable, transparent source of knowledge that’s been built by millions.