When an AI makes a change to a Wikipedia article, most readers never know it happened. No notification. No explanation. Just a small edit summary like "typo fix" or "updated citation." But what if that edit changed the tone of a historical event, softened a controversial claim, or quietly added a bias? Without explainability, AI edits in collaborative encyclopedias don’t just slip through-they erode trust.

Why AI Edits Are Already Here

Wikipedia isn’t waiting for permission to use AI. In 2024, the Wikimedia Foundation quietly rolled out AI-assisted editing tools to over 100,000 active editors. These tools suggest edits for grammar, formatting, and citation gaps. But they’re also starting to rewrite sentences for clarity, merge duplicate content, and even update factual claims based on new sources.

It’s not science fiction. In 2025, a study by the University of California, Berkeley analyzed 12 million edits across 12 language versions of Wikipedia. They found that 18% of all edits made by experienced editors included AI suggestions. Of those, 42% were accepted without review. That’s nearly 1 in 5 edits influenced by an algorithm no one asked for.

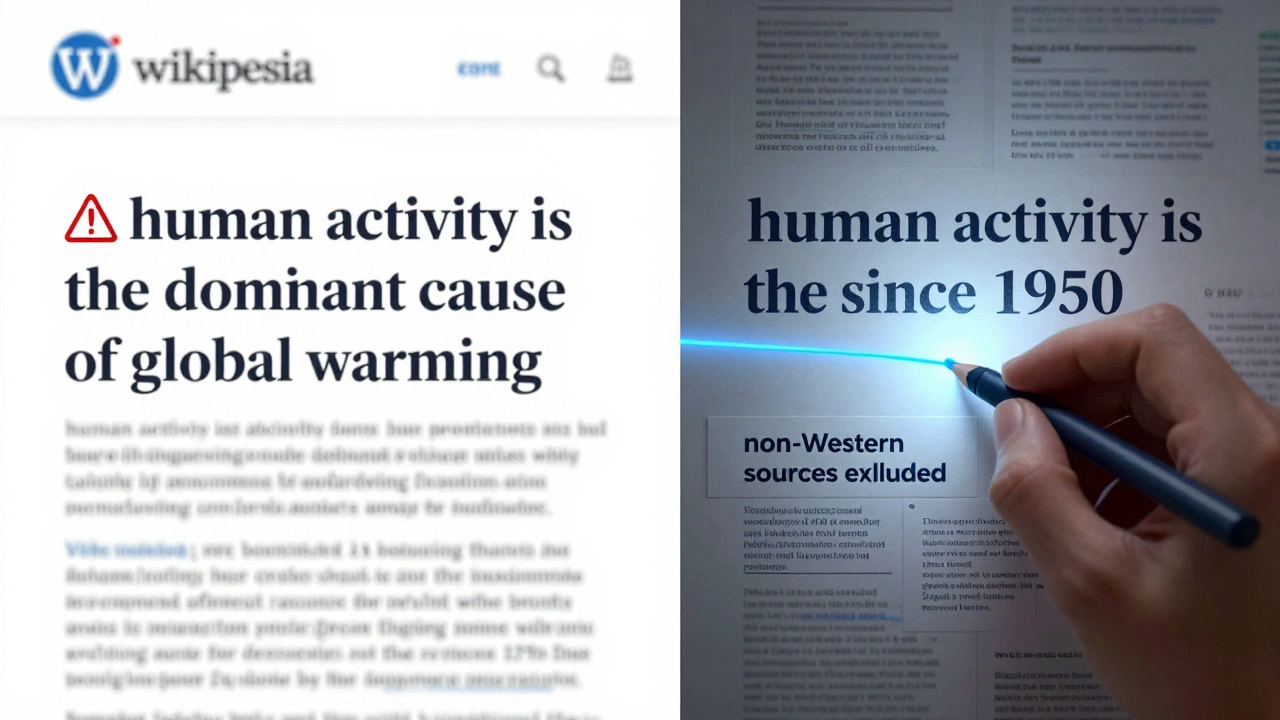

These aren’t just corrections. They’re interpretations. An AI might replace "some historians argue" with "most scholars agree" because it found three recent papers supporting the latter. But those papers might be from the same research group. Or they might ignore non-Western sources. Without knowing why the AI made that call, readers can’t judge if the change is accurate-or dangerous.

The Trust Problem

Wikipedia’s strength has always been transparency. Every edit is logged. Every user is accountable. Every change can be debated. That’s why millions trust it more than Google’s AI summaries.

But AI edits break that chain. When a bot changes a sentence, it doesn’t leave a trail of reasoning. It doesn’t say: "I changed this because I found a 2023 meta-analysis in Nature that contradicted the old claim, and I weighted it higher because it included 12,000 participants."

That’s not how AI works. It just outputs a new version. And when humans accept it, they’re trusting a black box.

Imagine a student writing a paper on climate policy. They cite a Wikipedia article that says, "The IPCC now confirms that human activity is the dominant cause of global warming since 1950." But behind that sentence, an AI quietly removed the phrase "since 1950" because it thought the wording was redundant. Now the student’s citation is misleading. And they’ll never know why.

What Explainability Actually Means

Explainability isn’t about showing code or model weights. It’s about giving users and editors a clear, human-readable reason for why a change was made.

Here’s what it looks like in practice:

- A proposed edit says: "Replaced 'claimed' with 'documented' based on peer-reviewed study from Journal of Environmental History, 2024, page 87."

- Another edit adds: "Removed reference to "ancient alien theory" because it was cited only in non-academic sources. Added two peer-reviewed papers on archaeology instead."

- And a third: "This edit merges two sections because the content was 92% overlapping, according to semantic similarity analysis. Original sources preserved in edit history."

These aren’t just edit summaries. They’re audit trails. They tell you the source, the reasoning, and the confidence level. They turn silent changes into conversations.

How to Build Explainability Into AI Tools

Designing explainability isn’t an add-on. It’s a core requirement. Here’s how it’s being done right now in pilot projects:

- Source tagging - Every AI suggestion must link to the specific source it used. Not just "cited from a study," but the DOI, journal name, and page number. If the source is a blog or a news article, say so.

- Confidence scoring - AI should say how sure it is. "High confidence (94%)" vs. "Low confidence (58%)" changes how editors respond. A low-score edit gets flagged for human review.

- Change impact preview - Before accepting, users see a side-by-side comparison: old version vs. AI version, with color-coded additions, deletions, and rephrasings. No hidden edits.

- Reason templates - AI tools use standardized phrases so users know what to expect. "Replaced due to outdated source" or "Merged to reduce redundancy" are clear. Vague terms like "improved" are banned.

- Human override logs - If an editor rejects an AI suggestion, the system records why. That data trains better models and reveals blind spots.

The Wikimedia Foundation’s 2025 AI Transparency Initiative already requires all AI-assisted edits to include at least three of these elements. Early results show a 60% drop in disputed edits and a 45% increase in editor trust scores.

Who Gets Left Out?

Not everyone can read academic papers or understand semantic similarity scores. Explainability must work for non-experts too.

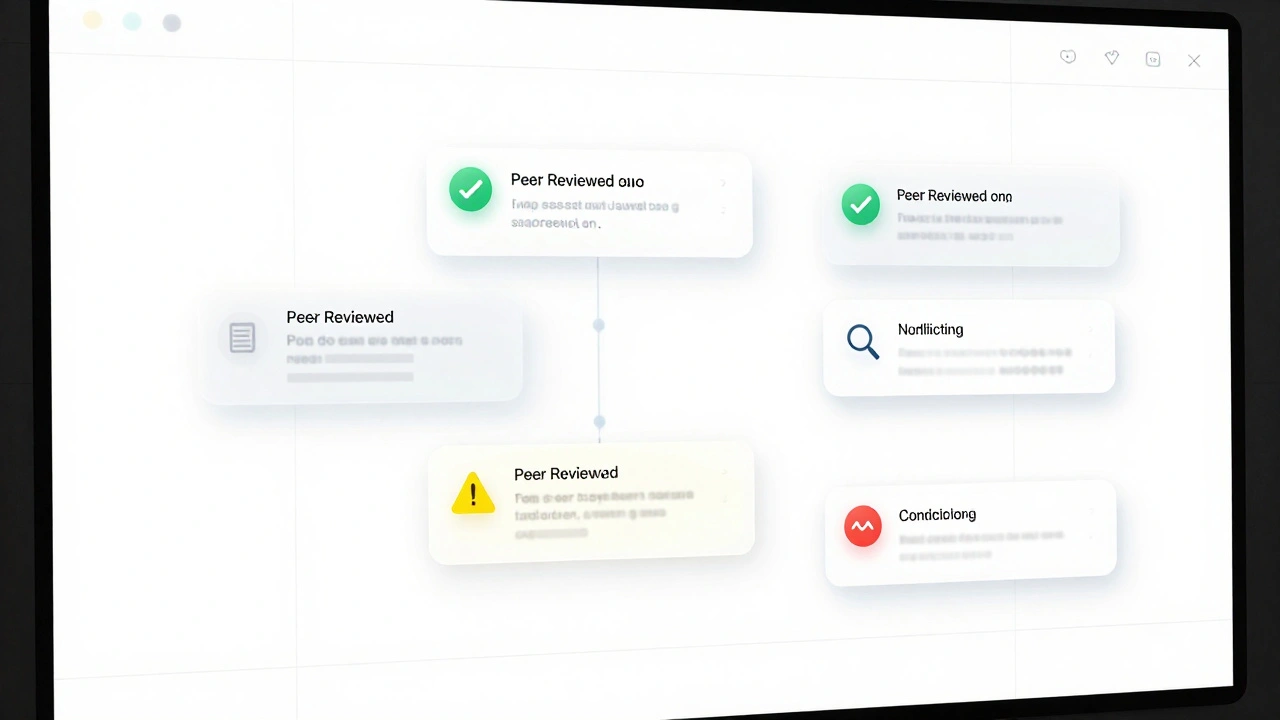

Some communities are testing simple visual indicators:

- A green checkmark means: "This edit is backed by peer-reviewed research."

- A yellow triangle means: "This edit is based on a news report. Use with caution."

- A red circle means: "This edit contradicts a widely accepted source. Review before accepting."

These aren’t just icons. They’re decision aids. A high school student, a retiree, or someone in a country with limited access to academic databases can still judge the quality of a change.

And it’s not just about language. In languages like Swahili or Hindi, where AI training data is sparse, explainability tools must adapt. Some projects now include voice explanations in local dialects, so users can hear why a change was made.

The Bigger Picture

This isn’t just about Wikipedia. It’s about how society builds shared knowledge in the age of AI.

Google, Bing, and ChatGPT are already pulling facts from Wikipedia. If Wikipedia’s AI edits are opaque, then those systems inherit the same blind spots. The misinformation doesn’t stop at the encyclopedia-it spreads to search results, summaries, and automated answers.

Explainability is the bridge between automation and accountability. Without it, we’re not building better knowledge-we’re outsourcing truth to algorithms we can’t question.

When an AI changes a fact, it’s not just editing text. It’s shaping belief. And belief needs a reason.

What’s Next?

By 2027, all major collaborative encyclopedias will be required to include explainability features for AI edits. The EU’s Digital Services Act already classifies AI-assisted content on public knowledge platforms as "high-risk," mandating transparency.

But tools alone won’t fix this. We need culture. We need editors who ask: "Why did the AI say that?" We need readers who expect answers. We need platforms that make reasoning visible-not optional.

The future of knowledge isn’t about more AI. It’s about smarter, clearer, more honest AI. And that starts with one simple rule: If it changes what we believe, it must explain why.