When you type "Wikipedia" into a search engine, you see a clean, neutral page full of facts. But behind that calm surface, governments around the world have been quietly trying to shape what shows up there. Not always with bold edits. Sometimes with emails, subpoenas, or quiet requests. And a growing number of journalists are using the Freedom of Information Act (FOIA) to pull back the curtain.

What FOIA-Driven Journalism Actually Looks Like

FOIA isn’t just for whistleblowers or investigative reporters chasing corruption. It’s also a tool for understanding how public institutions interact with platforms that billions rely on every day. In 2023, a team of reporters at The Markup filed over 200 FOIA requests across U.S. state and federal agencies. Their goal? To find out if government employees were contacting Wikipedia editors to change content about themselves, their agencies, or controversial policies.

The results weren’t shocking-but they were revealing. In one case, a mid-level official at the Department of Veterans Affairs sent an email to a Wikipedia administrator asking to "correct misinformation" about a recent policy delay. The email didn’t demand deletion. It didn’t threaten. It simply attached a press release and asked, "Could you update this?" The edit was made. No public record of the request existed-until FOIA uncovered it.

These aren’t rogue actors. They’re bureaucrats following internal guidelines. Many agencies have training manuals that tell staff how to "engage with online encyclopedias." Some even have designated liaisons. The problem? That engagement rarely appears in public logs. And when it does, it’s often buried under layers of redaction.

Why Wikipedia? Why Now?

Wikipedia isn’t just a website. It’s the default source for facts in the digital age. A 2024 study by the Pew Research Center found that 64% of U.S. adults use Wikipedia as a first step when researching government policies. That makes it a prime target for influence-not because governments want to lie, but because they want to control the narrative.

Think about it: if a citizen is trying to understand how their tax dollars are being spent, and the official Wikipedia page on "Federal Housing Grants" is vague or outdated, they turn elsewhere. But if that page is quietly updated with clearer language, approved by agency lawyers, and scrubbed of criticism-that’s not just editing. It’s information management.

And it’s not limited to the U.S. In 2022, journalists in Germany used their equivalent of FOIA to uncover that over 300 government employees had edited Wikipedia articles related to climate policy, immigration, and public health. Most edits were minor-fixing typos, adding sources. But 17% removed critical quotes from experts. None of those changes were disclosed.

How Journalists Are Using FOIA to Track This

FOIA requests for Wikipedia-related records follow a pattern. Journalists don’t ask for "all edits." That’s impossible. Instead, they ask for:

- Emails between government employees and Wikipedia administrators

- Logs of IP addresses used to edit articles about public agencies

- Internal memos about Wikipedia editing policies

- Requests for "content review" or "fact-checking assistance" from Wikipedia’s official channels

One of the most revealing cases came from a reporter in Illinois who requested records from the state’s Department of Public Health. The department had received a complaint about Wikipedia’s article on vaccine side effects. The response? A memo from a senior analyst recommending edits to "tone down" language about rare adverse reactions. The memo was approved. The edit was made. The FOIA request got the memo. The public didn’t know.

Wikipedia’s own policies say edits should be transparent. But transparency doesn’t mean public disclosure of who asked for a change. It means editors should cite sources. That’s where the gap opens. A government employee can quietly push for a change. An editor, trusting the source, makes it. No one knows the request came from inside the agency.

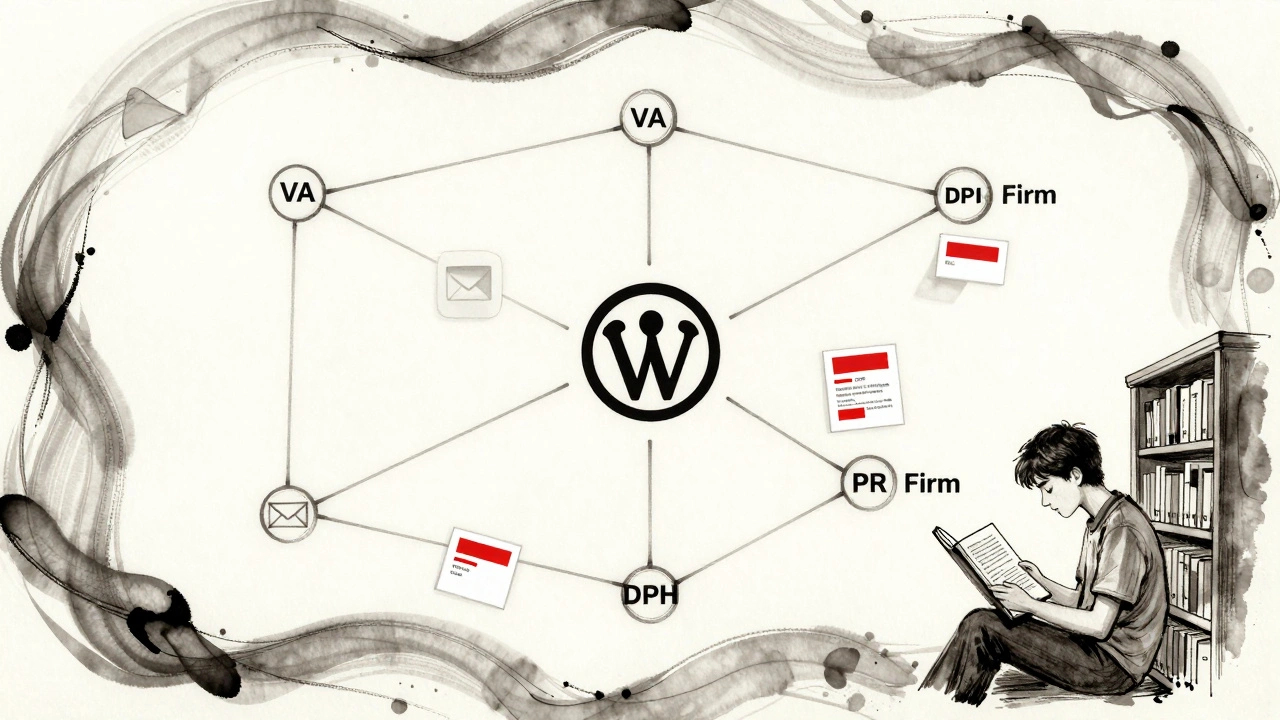

The Hidden Infrastructure of Influence

There’s a quiet network behind these edits. It includes:

- Wikipedia’s Office of Dispute Resolution: A team that handles sensitive requests from institutions, including governments. They don’t publish statistics.

- Government Media Liaisons: Staff hired specifically to manage online presence, including Wikipedia. Their names are rarely public.

- Contracted Editors: Some agencies outsource Wikipedia editing to PR firms. One firm in Virginia was found to have edited over 1,200 articles about federal contractors between 2020 and 2023.

These aren’t illegal. But they’re invisible. And that’s the problem.

Compare this to how governments handle press releases. Those are published. Attributed. Logged. Wikipedia edits? No. They’re treated like private conversations-even when they’re about public policy.

What’s at Stake?

It’s not about bias. It’s about trust. When people believe Wikipedia is neutral, they believe it’s a shared public resource. But when they learn that government officials quietly shaped the information they relied on, that trust cracks.

Imagine a student writing a paper on police reform. They read Wikipedia’s article on "Body Cameras and Accountability." It says: "Most studies show body cameras reduce complaints against officers." But a 2023 meta-analysis in the Journal of Criminal Justice found mixed results, with some studies showing no effect. If that nuance was removed after a police department requested it-how does the student know?

Wikipedia’s strength has always been its openness. Anyone can edit. Anyone can see the history. But when external actors-especially those with power-start influencing edits without disclosure, that openness becomes a facade.

What’s Being Done?

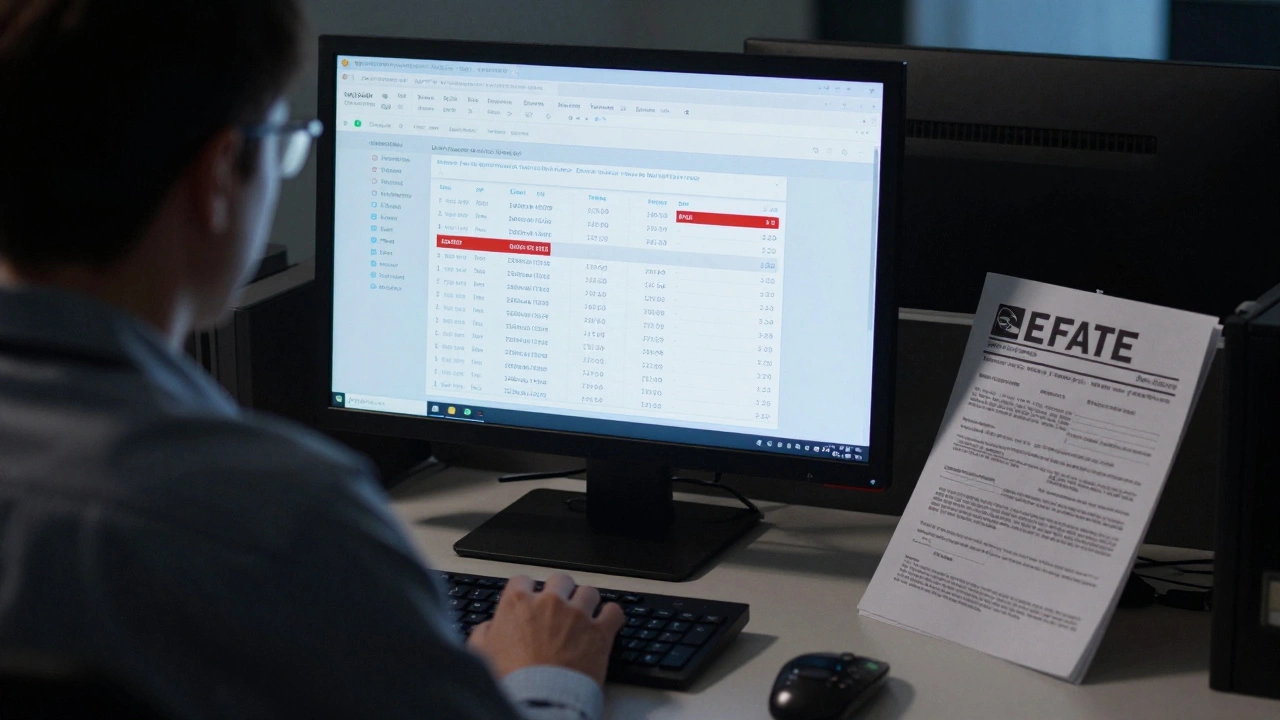

Wikipedia’s foundation has taken small steps. In 2024, they began publishing quarterly reports on "institutional editing"-a term they coined to describe edits from government, corporate, or nonprofit accounts. The reports show that government-related edits increased by 28% between 2022 and 2023. Most were labeled as "minor corrections." Less than 5% were flagged as potentially biased.

But without mandatory disclosure, those numbers are incomplete. Journalists are pushing for change. Some are calling for a public registry: if a government employee edits a Wikipedia article about their agency, the edit should carry a tag: "Requested by [Agency Name]."

It’s not about censorship. It’s about accountability.

What You Can Do

If you’re curious about how your government interacts with Wikipedia:

- File a FOIA request asking for any communications between your local agency and Wikipedia administrators.

- Check the history of Wikipedia articles about your city’s public services. Look for edits made from government IP addresses.

- Use tools like WikiWho or WikiScan to track patterns in edits over time.

Transparency doesn’t happen by accident. It happens because someone asked the right question-and refused to take "we can’t share that" as an answer.