Wikipedia has over 300 language editions, but not all of them are equal. Some, like English and German, have millions of articles. Others, like Luganda or Kyrgyz, struggle to reach 100,000. The gap isn’t because people in those regions don’t want to contribute-it’s because they often lack the tools, time, or resources to build content from scratch. That’s where machine translation steps in-not as a replacement for human editors, but as a powerful bridge.

Why Wikipedia Needs Cross-Language Help

Wikipedia’s goal is to give everyone free access to knowledge. But if you search for "climate change" in Swahili, you get a short article with basic facts. In English, you get a 20,000-word deep dive with citations, maps, and historical context. That’s not because Swahili speakers care less about climate change. It’s because the English version was built over years by thousands of contributors. Most smaller-language Wikipedias don’t have that luxury.

Studies from the Wikimedia Foundation show that 60% of articles in low-resource languages are under 500 words. Meanwhile, top-language versions average over 3,000 words per article. The content imbalance isn’t random-it’s structural. Machine translation helps fill that gap by turning well-developed articles from major languages into starting points for others.

How Machine Translation Works on Wikipedia

Wikipedia doesn’t use off-the-shelf Google Translate. Instead, it runs its own system called Content Translation is a tool built by Wikimedia that uses neural machine translation to help editors copy and adapt articles from one language to another. It’s built on open-source models trained specifically on Wikipedia content. That means it learns how Wikipedia articles are structured: lead sections, infoboxes, citations, section headings, and even how to handle cultural references.

When an editor in, say, Bengali wants to start an article about "quantum computing," they open Content Translation, pick the English version, and click "translate." The system doesn’t just dump raw text. It breaks the article into chunks, translates each part, and keeps the formatting intact. The editor then reviews, edits, adds local context, and publishes. It’s not magic-but it cuts the time to create a draft from days to minutes.

Since its launch in 2015, Content Translation has helped create over 1.5 million articles across 150 languages. The biggest gains? Languages like Tamil, Telugu, and Vietnamese, where article counts jumped by 40% or more in just three years.

Real Impact: From Translation to Growth

In 2023, the Nepali Wikipedia had only 12,000 articles. By 2025, thanks largely to machine translation, it hit 58,000. That’s not because Nepal suddenly had 46,000 new editors. It’s because volunteers used Content Translation to import articles from English, Spanish, and Hindi, then adapted them. One editor in Kathmandu translated a 3,000-word article about renewable energy from English, added local data on solar adoption in rural Nepal, and linked it to Nepali government policies. That article became a template. Others followed.

Similar stories happened in Swahili, where translated articles about public health led to a surge in local edits. Nurses, teachers, and students started adding details about clinics, disease outbreaks, and traditional remedies. Machine translation didn’t write those additions-it gave them a foundation to build on.

What Machine Translation Can’t Do

It’s easy to think AI can replace human editors. But that’s not how it works. Machine translation can’t understand cultural nuance. It doesn’t know that "bicycle" in rural Indonesia might mean something different than in Tokyo. It can’t judge if a source is trustworthy in a local context. And it often gets idioms wrong.

Take the English phrase "it’s raining cats and dogs." A literal translation into Arabic or Mandarin would confuse readers. Content Translation tries to avoid these traps by flagging potentially problematic phrases. But it still needs a human to step in and say: "In our region, we say it’s like the sky is crying."

Also, machine translation struggles with technical terms that don’t have established equivalents. "Blockchain," "neuroplasticity," or "feminist jurisprudence" might not exist in many languages. Editors have to create those terms themselves-often through community discussion. That’s where Wikipedia’s collaborative culture matters most.

Who Uses This Tool-and Why

Most users of Content Translation aren’t professional translators. They’re students, librarians, activists, and hobbyists. In the Philippines, a group of high school students used it to translate 200 science articles into Tagalog. In Ukraine, volunteers translated medical guides into Ukrainian after the Russian invasion, replacing Russian-language content that was no longer trusted.

These users don’t need perfect translations. They need accessible knowledge. A 70% accurate translation that gets a child in rural Kenya to understand how vaccines work is better than a flawless 100% translation that never gets published.

Challenges and Fixes

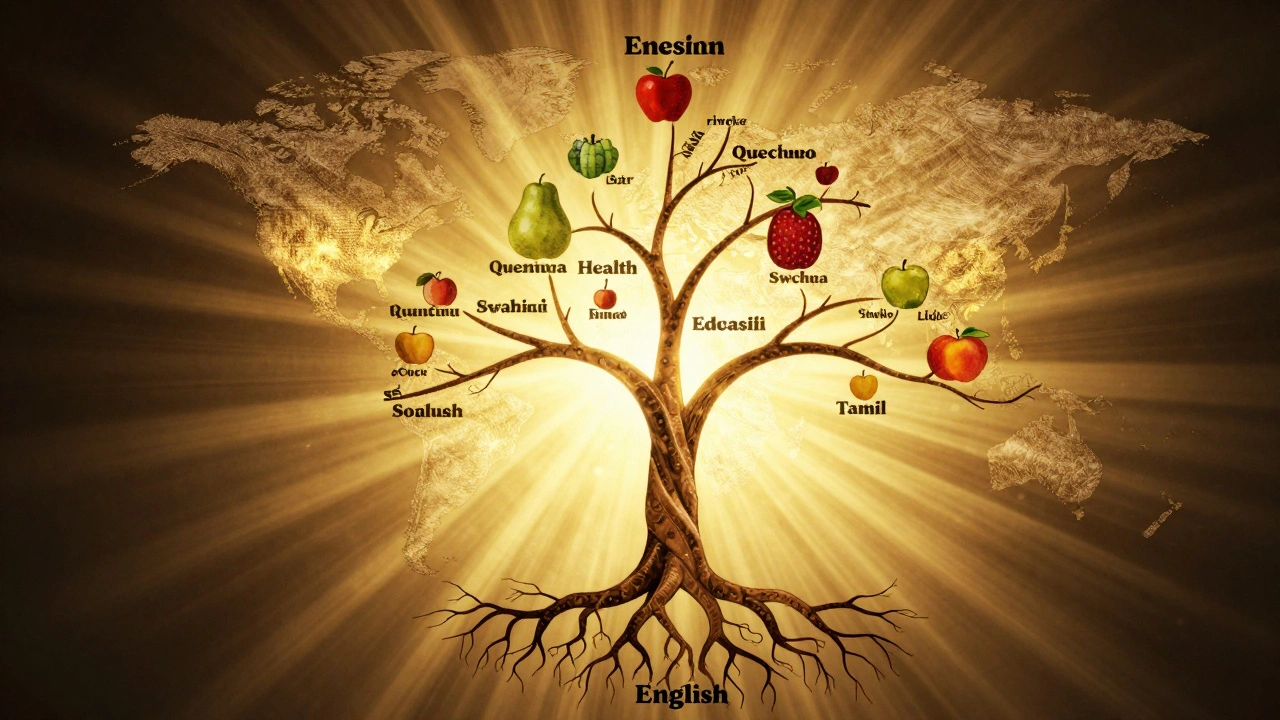

Not all languages benefit equally. Machine translation models are trained on data. And data is uneven. Languages with lots of digital content-like French or Japanese-get better translations. Languages with little online presence-like Ainu or Quechua-get poor results or none at all.

Wikipedia’s solution? Community-driven data collection. Volunteers are encouraged to contribute texts: textbooks, local news, oral histories, government documents. These are used to fine-tune translation models. In 2024, a project in Peru collected 12,000 Quechua sentences from elders. That data improved translation accuracy for Quechua by 38% in just six months.

Another fix? Prioritizing articles that matter. Instead of translating random articles, editors now focus on high-impact topics: health, education, climate, rights. The "1000 Core Articles" project targets the most essential knowledge for every language-basic biology, civic rights, safety tips. These are translated first. Then, the rest follows.

The Bigger Picture

Machine translation on Wikipedia isn’t just about words. It’s about equity. It’s about making sure a kid in Laos has the same chance to learn about the solar system as a kid in London. It’s about giving voice to knowledge that’s been ignored because it wasn’t written in English, Spanish, or Mandarin.

And it’s working. Since 2020, the number of active editors in low-resource languages has grown by 62%. The average article length in those languages has increased by 50%. And the gap between the richest and poorest language editions is narrowing-slowly, but surely.

This isn’t about replacing human effort. It’s about amplifying it. Machine translation doesn’t write Wikipedia. People do. But now, they have a tool that lets them start faster, build broader, and reach farther.

Can machine translation replace human editors on Wikipedia?

No. Machine translation creates drafts, but human editors are still needed to adapt content, fix cultural misunderstandings, add local context, and verify facts. The tool speeds up the process, but quality comes from people.

Which languages benefit most from machine translation on Wikipedia?

Languages with existing digital content-like Tamil, Bengali, Vietnamese, and Swahili-see the biggest gains. These languages have enough training data for translation models to work well. Languages with little online presence, like Quechua or Ainu, still struggle unless communities actively contribute text.

How accurate is Wikipedia’s machine translation?

Accuracy varies by language. For major languages like Spanish or French, it’s around 85-90%. For languages with less data, it can drop to 60-70%. But editors don’t need perfection-they need a usable starting point. Most translated articles are edited before publication, which boosts quality significantly.

Is machine translation used only for translating from English?

No. While English is the most common source, editors can translate from any language to any other. For example, a Spanish speaker might translate from Spanish to Quechua, or a Hindi speaker might translate from Hindi to Gujarati. The tool supports over 150 language pairs.

How do volunteers help improve translation quality?

Volunteers contribute texts-like textbooks, local news, and oral histories-to train translation models. They also review and correct translations, create new terminology for missing words, and build glossaries. In 2024, community efforts improved Quechua translation accuracy by 38% using just 12,000 new sentences.