Wikipedia is supposed to be the free encyclopedia anyone can edit-but that openness also makes it vulnerable to hidden agendas. You’ve probably seen articles that feel off: one-sided, overly enthusiastic about a person or idea, or packed with obscure claims that don’t show up in reliable sources. These aren’t accidents. They’re signs of POV pushing and original research, two of the most common ways Wikipedia’s neutrality gets broken.

What POV Pushing Really Looks Like

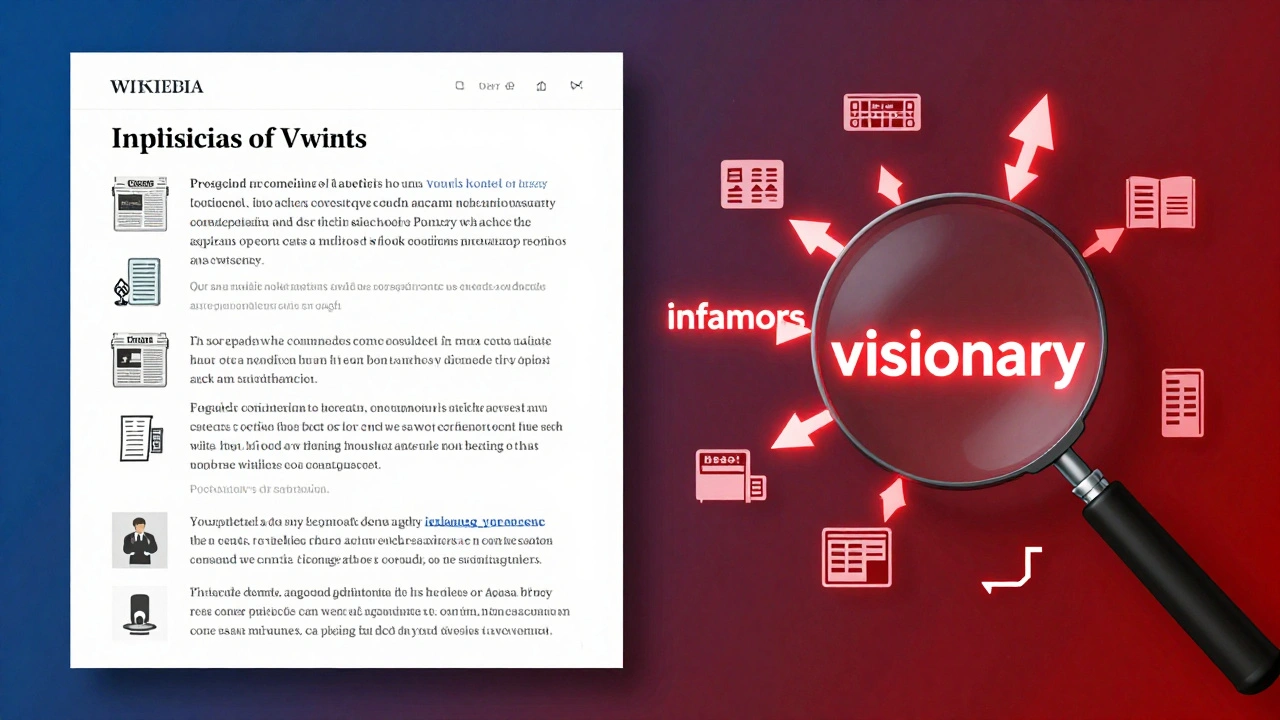

POV pushing-short for point-of-view pushing-is when someone edits Wikipedia to favor a specific perspective, often to promote an agenda, defend a brand, or smear an opponent. It doesn’t always mean outright lies. Sometimes it’s subtle: highlighting one source while ignoring ten others, using loaded language like "infamous" or "visionary," or burying contradictory facts in footnotes.

Take a page about a controversial politician. If the article spends three paragraphs on a single, fringe accusation from a partisan blog but only mentions their policy achievements in one sentence, that’s POV pushing. If the article calls them "a divisive figure" without citing any neutral analysis, but describes their opponent as "a pragmatic leader" using quotes from their campaign website, that’s also POV pushing.

Wikipedia’s policy says neutral point of view (NPOV) means representing all significant views fairly, proportionally, and without editorial bias. That means if 70% of reliable sources describe a person as effective, the article should reflect that-not just quote the 30% who call them corrupt. The problem? Many editors don’t check sources. They just copy what they’ve read elsewhere-often from blogs, opinion pieces, or social media.

Original Research: When Editors Become Authors

Original research is when an editor adds information that isn’t published anywhere else. It sounds simple, but it’s one of the most dangerous violations on Wikipedia. You might think, "I read this in three places, so I’ll put it together." That’s still original research.

Here’s how it happens: An editor reads a study on climate change, reads a news article summarizing it, and then writes a new paragraph combining both with their own interpretation. They add a claim like, "This suggests that rising temperatures will cause a 40% drop in crop yields by 2030." But that exact number isn’t in either source. The study said "up to 35%," and the article didn’t mention 2030. Now you’ve got a made-up statistic on Wikipedia.

Original research also includes unpublished analysis. For example, an editor might take five unrelated Wikipedia articles about different tech companies and conclude that "all major tech firms are now monopolies." That’s not a published finding. It’s their opinion dressed up as fact. Wikipedia doesn’t allow editors to be the first to make connections, draw conclusions, or synthesize data. Only published, reliable sources can be used.

How to Spot These Problems in Practice

You don’t need to be an expert to catch POV pushing or original research. Here’s how to check:

- Check the sources. Click every citation. Are they from reputable outlets? Academic journals, major newspapers, books from established publishers? Or are they personal blogs, forums, YouTube videos, or press releases?

- Look for balance. Does the article give equal weight to opposing views? If one side gets 80% of the space and the other gets a footnote, that’s a red flag.

- Find the original source. If a claim sounds too specific-"a 2023 study found X"-search for that study. If you can’t find it, it’s likely made up.

- Read the talk page. Every Wikipedia article has a "Talk" tab. That’s where editors argue about edits. If you see phrases like "this is common knowledge" or "everyone knows this," that’s a sign someone’s ignoring policy.

- Check edit history. Click "View history" and look for repeated edits by the same user adding the same biased phrasing. If one person keeps reverting changes that remove biased language, they’re likely pushing a POV.

For example, the Wikipedia page for a small cryptocurrency once claimed it was "the most secure digital currency in the world." That claim had no citation. A user added a reference to a blog post written by the coin’s founder. Another editor removed it. The edit war lasted three weeks. Eventually, the claim was deleted because it violated both NPOV and original research rules.

Why This Matters Beyond Wikipedia

People trust Wikipedia. A 2023 study by the Pew Research Center found that 68% of U.S. adults use Wikipedia to get quick facts. Many students cite it in papers. Journalists pull quotes from it. If Wikipedia is full of biased or invented content, that misinformation spreads.

Imagine a student writing a report on mental health treatments. They find a Wikipedia article that says "cognitive behavioral therapy is ineffective for depression," citing a single Reddit thread and a self-published book. They cite it. Their teacher grades it. The myth gets reinforced. That’s the ripple effect.

Wikipedia’s strength is its community. But that community only works if people follow the rules. When editors ignore NPOV and original research policies, they don’t just break Wikipedia-they break trust in knowledge itself.

What You Can Do

You don’t have to be a Wikipedia editor to help fix this. Here’s what you can do right now:

- Report suspicious edits. On any Wikipedia article, click the "Edit" button, then "View history." Find the edit that looks biased or unsupported. Click "View edit" and then "Report this edit" at the bottom. Choose "POV or bias" or "Original research."

- Use the talk page. If you’re unsure about a claim, add a comment on the article’s talk page: "This claim needs a reliable source. Can someone find a peer-reviewed study?" Most editors will respond.

- Don’t edit blindly. If you want to improve an article, don’t just rewrite it. Find a reliable source first. Cite it. Then make your edit.

- Teach others. Show a friend how to check citations. Explain why Wikipedia isn’t a source-it’s a summary of sources.

Wikipedia isn’t perfect. But it’s the closest thing we have to a global public library. And like any library, it only works if people respect the rules. Spotting POV pushing and original research isn’t about being a nitpicker. It’s about protecting the integrity of shared knowledge.

Common Misconceptions

People often misunderstand Wikipedia’s rules. Here are the biggest myths:

- Myth: "If it’s on Wikipedia, it must be true." Reality: Anyone can edit. Just because it’s there doesn’t mean it’s correct.

- Myth: "I read it on three blogs, so it’s reliable." Reality: Blogs aren’t reliable sources unless they’re written by recognized experts and peer-reviewed.

- Myth: "I’m just summarizing what I learned." Reality: Summarizing isn’t allowed unless you’re summarizing published material. Your own summary counts as original research.

- Myth: "This is common knowledge." Reality: Wikipedia doesn’t accept "common knowledge" unless it’s verified in multiple reliable sources.

What’s the difference between POV pushing and bias?

Bias is a general tendency to favor one side. POV pushing is the active, repeated attempt to insert that bias into Wikipedia through edits. All POV pushing is bias, but not all bias is POV pushing-unless it’s being deliberately pushed into articles.

Can I use Wikipedia as a source for my research?

No. Wikipedia is a tertiary source-it summarizes information from primary and secondary sources. Use it to find leads, then go to the original sources cited in its references. Academic guidelines and most journals require you to cite the original source, not Wikipedia.

How do I know if a source is reliable?

Reliable sources are typically peer-reviewed journals, books from academic publishers, major newspapers (like The New York Times, BBC, or Le Monde), and government or university websites. Avoid blogs, personal websites, forums, and press releases unless they’re from a highly reputable organization.

Why doesn’t Wikipedia just delete bad edits automatically?

Wikipedia doesn’t have AI that understands context. A biased edit might look factual to a bot. It takes human editors to spot subtle language, missing context, or misused sources. That’s why community vigilance matters more than automation.

What happens to editors who keep pushing POV or doing original research?

Repeated violations lead to warnings, temporary blocks, and eventually, permanent bans. Wikipedia has a formal dispute resolution process. Editors who ignore policies and harass others are removed-not because they’re "wrong," but because they break the system that keeps Wikipedia neutral.

Next Steps for Better Wikipedia Use

If you use Wikipedia regularly, make it a habit to check citations before accepting any claim. Bookmark the Wikipedia page on reliable sources. When you find a biased article, don’t just walk away-add a note on the talk page. If you’re a student, teacher, or journalist, teach others how to read Wikipedia critically. Knowledge isn’t neutral by default. It’s neutral because people care enough to make it so.