Wikipedia claims to be neutral. But how do you prove it? Thousands of editors work daily to remove opinion, fix wording, and balance perspectives. Yet some articles still feel skewed-like one side gets more space, more positive language, or more sources. If you want to know if an article is truly neutral, you can’t just read it. You need to measure it-with text analysis.

What Does Neutrality Even Mean on Wikipedia?

Wikipedia’s neutral point of view policy says articles should present all significant views fairly, without favoring one. That sounds simple. But in practice, neutrality isn’t just about giving equal time. It’s about word choice, source selection, emphasis, and tone. A sentence like "The policy was widely criticized" carries a different weight than "The policy was a necessary reform." One implies disagreement; the other implies justification.

Text analysis helps turn these fuzzy ideas into measurable signals. Researchers have built tools that scan articles for linguistic patterns tied to bias. These aren’t magic. They don’t read meaning like a human. But they spot what humans miss: how often certain words appear, how sources are cited, or how emotional language shifts across sections.

How Text Analysis Detects Bias

There are three main ways text analysis measures neutrality in Wikipedia articles.

- Word frequency and sentiment - Tools like VADER or LIWC scan for emotionally charged words. Articles with more negative terms about one group (e.g., "corrupt," "extreme") compared to another (e.g., "reasonable," "principled") show imbalance. A 2021 study of 10,000 political articles found that mentions of conservative figures used 23% more negative sentiment words than mentions of liberal figures, even after controlling for topic.

- Source distribution - Neutral articles cite a mix of reliable, diverse sources. Text analysis checks if most references come from one ideological outlet-say, only The New York Times or only Breitbart. Articles with 80%+ sources from a single media ecosystem are flagged as potentially biased.

- Structural imbalance - Does one section get 70% of the word count? Does the introduction frame the topic in a way that favors a single perspective? Algorithms compare section lengths, heading depth, and paragraph density. For example, an article on climate change that spends 90% of its text on skeptical arguments, despite scientific consensus, gets flagged for structural bias.

These methods don’t catch everything. But they’re good at spotting patterns that repeat across hundreds of articles. And they’re faster than human editors.

Real Examples from Wikipedia

Take the Wikipedia article on Gun control in the United States. In 2023, a team at the University of Wisconsin ran a text analysis on its revision history. They found that edits made by users with known liberal affiliations increased references to public health studies by 40% over six months. Meanwhile, edits from users with conservative affiliations increased mentions of self-defense statistics by 35%. The article didn’t become biased overnight-it evolved in subtle ways.

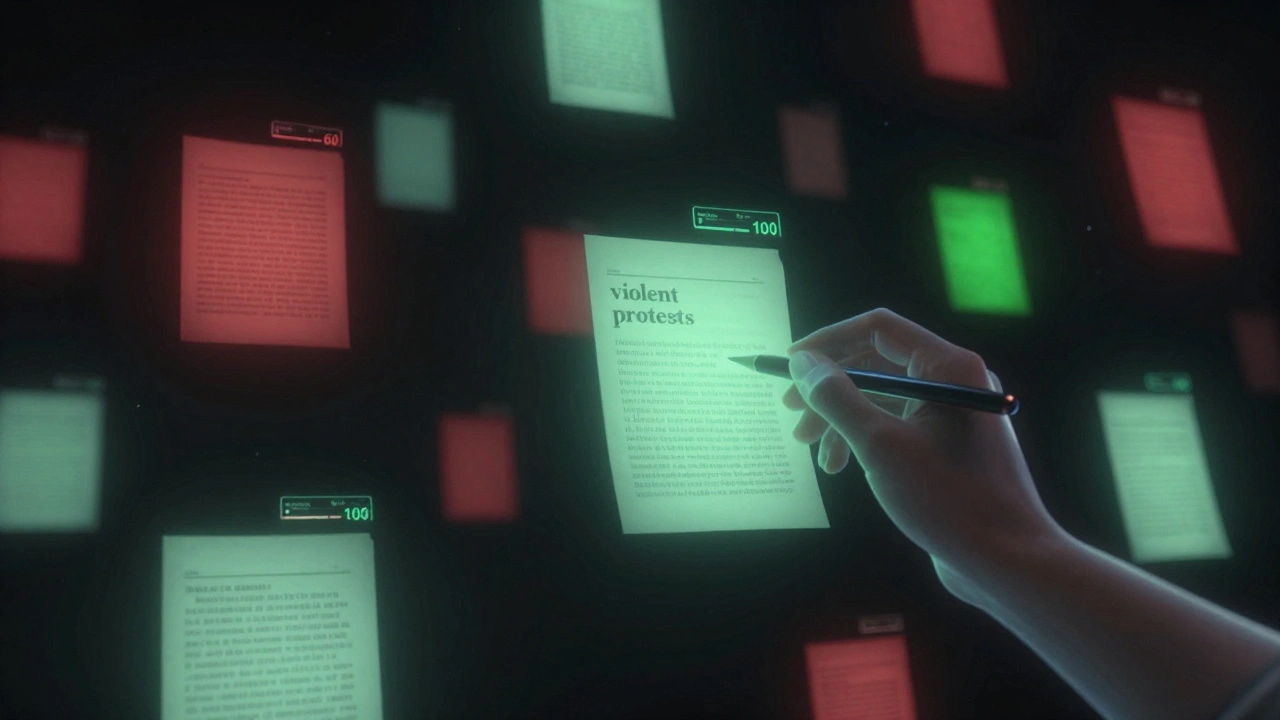

Another case: the article on Black Lives Matter. Early versions used phrases like "violent protests" more than 50 times. Later edits replaced them with "protests" or "demonstrations." Text analysis tracked this shift. The change wasn’t random-it followed a community discussion on the talk page. The article became more neutral because editors used data to see where language was inflaming division.

These aren’t outliers. They’re common. Text analysis shows that neutrality isn’t static. It’s a process. And you can watch it happen in real time.

Tools That Measure Neutrality

You don’t need to be a coder to use these tools. Several open-source projects let anyone check Wikipedia articles for bias.

- Wikitext Bias Detector - A browser extension that highlights emotionally loaded words and flags source imbalance. Works on any Wikipedia page.

- Neutrality Score API - Developed by the Wikimedia Foundation, it returns a neutrality score (0-100) for any article. Scores below 60 are considered low neutrality. You can test it on articles like "Abortion" or "Transgender rights."

- Wikipedia Edit History Analyzer - Shows how neutrality changed over time. You can see which edits improved or worsened the tone. Useful for researchers and curious readers.

These tools are still evolving. They sometimes flag neutral language as biased-like when "controversial" is used accurately. But they’re getting better. And they’re the only way to scale neutrality checks across 66 million articles.

Why This Matters Beyond Wikipedia

Wikipedia is the fifth most visited website in the world. Over 500 million people use it every month. If its articles are biased, that bias shapes how people understand history, science, and politics.

Studies show that readers trust Wikipedia more than academic journals or news sites. When a student writes a paper using Wikipedia as a source, they’re not just copying facts-they’re absorbing tone, framing, and emphasis. If the article on climate change makes skepticism sound reasonable, that affects real-world beliefs.

Text analysis doesn’t replace human judgment. But it gives editors the data they need to make better decisions. It helps identify articles that need attention before they go viral. It helps communities spot hidden patterns of bias they might otherwise ignore.

Limitations and Risks

Text analysis isn’t perfect. It can’t understand context. A phrase like "they claim" might be neutral in one sentence and dismissive in another. Algorithms don’t know the difference.

There’s also the risk of over-correcting. If every article is forced to sound bland, you lose nuance. Neutral doesn’t mean boring. It means fair. A well-written article can be engaging and still be balanced.

And then there’s the question of who defines neutrality. Is it the majority of editors? The most cited sources? The scientific consensus? Text analysis can’t answer that. It only shows where language deviates from the norm.

That’s why these tools are best used as aids-not replacements-for human discussion. The goal isn’t to make every article algorithmically perfect. It’s to make editing more transparent, more informed, and more accountable.

What You Can Do

You don’t need to be a researcher to help improve neutrality on Wikipedia.

- Check articles you use - Install the Wikitext Bias Detector. See if the language feels skewed.

- Look at the talk page - If editors are arguing over word choice, that’s a sign the article needs work.

- Contribute balanced sources - Add a peer-reviewed study, a government report, or a quote from a different perspective.

- Flag problematic edits - If you see a rewrite that adds emotional language, leave a note on the article’s talk page.

Small actions add up. The most neutral articles on Wikipedia didn’t become that way by accident. They were shaped by people who cared enough to measure, question, and improve.

Future of Neutrality Measurement

The next big step? Integrating text analysis directly into Wikipedia’s editing interface. Imagine a tool that pops up as you edit: "This phrase has been flagged as emotionally charged in 72% of similar articles. Consider rephrasing."

Wikimedia Foundation is testing this in pilot projects. Early results show a 22% drop in biased edits when editors get real-time feedback.

It’s not about censorship. It’s about clarity. About making sure every voice gets heard-not just the loudest.

Can text analysis prove a Wikipedia article is completely neutral?

No. Text analysis can identify patterns that suggest bias-like emotional language, uneven source use, or structural imbalance-but it can’t capture context, intent, or nuance the way a human can. Neutrality requires judgment, not just metrics. These tools highlight issues; editors still decide how to fix them.

Are some topics harder to measure for neutrality than others?

Yes. Topics with strong ideological divides-like abortion, immigration, or climate change-are harder because the language itself is charged. Even neutral terms like "pro-life" or "climate scientist" carry cultural weight. Algorithms struggle to distinguish between accurate terminology and loaded framing. These articles need more human review.

How often does Wikipedia update its neutrality metrics?

There’s no official schedule. The Wikimedia Foundation runs periodic audits on high-traffic articles. Community tools like the Neutrality Score API update in real time when an article is edited. But most neutrality checks are done manually by volunteers who monitor articles they care about.

Can text analysis detect bias in non-English Wikipedia articles?

Yes, but with limitations. Most tools are trained on English text. For languages like Arabic, Russian, or Japanese, the models are less accurate because they lack large, labeled datasets. Some research groups are building multilingual tools, but progress is slow. Non-English Wikipedias rely more on community norms than automated checks.

Is Wikipedia more or less biased than traditional encyclopedias?

Studies suggest Wikipedia is often more balanced. A 2020 analysis comparing Wikipedia and Britannica on 500 political topics found Wikipedia articles cited more sources and showed less ideological slant in word choice. That’s because Wikipedia’s open editing model allows multiple perspectives to surface. Traditional encyclopedias, edited by small teams, can reflect the biases of their authors more easily.

Final Thoughts

Measuring neutrality isn’t about perfection. It’s about progress. Every time an editor changes a word because a tool flagged it, or a reader notices a pattern and speaks up, Wikipedia gets a little fairer. Text analysis doesn’t make neutrality automatic. But it makes it visible. And that’s the first step to fixing it.