Wikipedia doesn’t aim to convince you. It doesn’t take sides. It doesn’t cheer for one theory over another. That’s not because it’s boring - it’s because its entire structure depends on staying neutral. The neutral point of view isn’t just a suggestion. It’s the rule that keeps Wikipedia from turning into a battlefield of opinions.

What Neutral Point of View Actually Means

Neutral Point of View (NPOV) doesn’t mean avoiding facts. It doesn’t mean saying "both sides" when one side is wrong. It means presenting all significant viewpoints fairly, proportionally, and without bias. If 85% of historians agree that climate change is human-caused, then Wikipedia says that. But if a minority view has been published in credible sources - even if it’s fringe - it gets mentioned too, with context.

Imagine you’re writing about the moon landing. NPOV doesn’t mean giving equal space to people who think it was faked. It means stating the overwhelming scientific consensus, then noting - briefly and with clear attribution - that a small number of people dispute it, and here’s why they believe that. The difference is in the weight. The facts carry the load. The opinions get labeled.

Why NPOV Was Created

Wikipedia launched in 2001 with no clear rules. Editors argued constantly. One person would write that a political figure was a "corrupt liar." Another would call them a "hero of the people." The site became unusable. In 2002, Jimmy Wales and Larry Sanger introduced NPOV as a core policy to stop the edit wars.

They didn’t invent the idea. Journalists, scientists, and academics had been practicing neutrality for centuries. But Wikipedia needed a simple, enforceable rule for volunteers with no training. NPOV became the glue holding together thousands of conflicting perspectives.

Today, every Wikipedia article must pass a basic NPOV test: Could someone from any side read it and say, "They didn’t twist this"? If not, it gets flagged, edited, or locked.

How NPOV Works in Practice

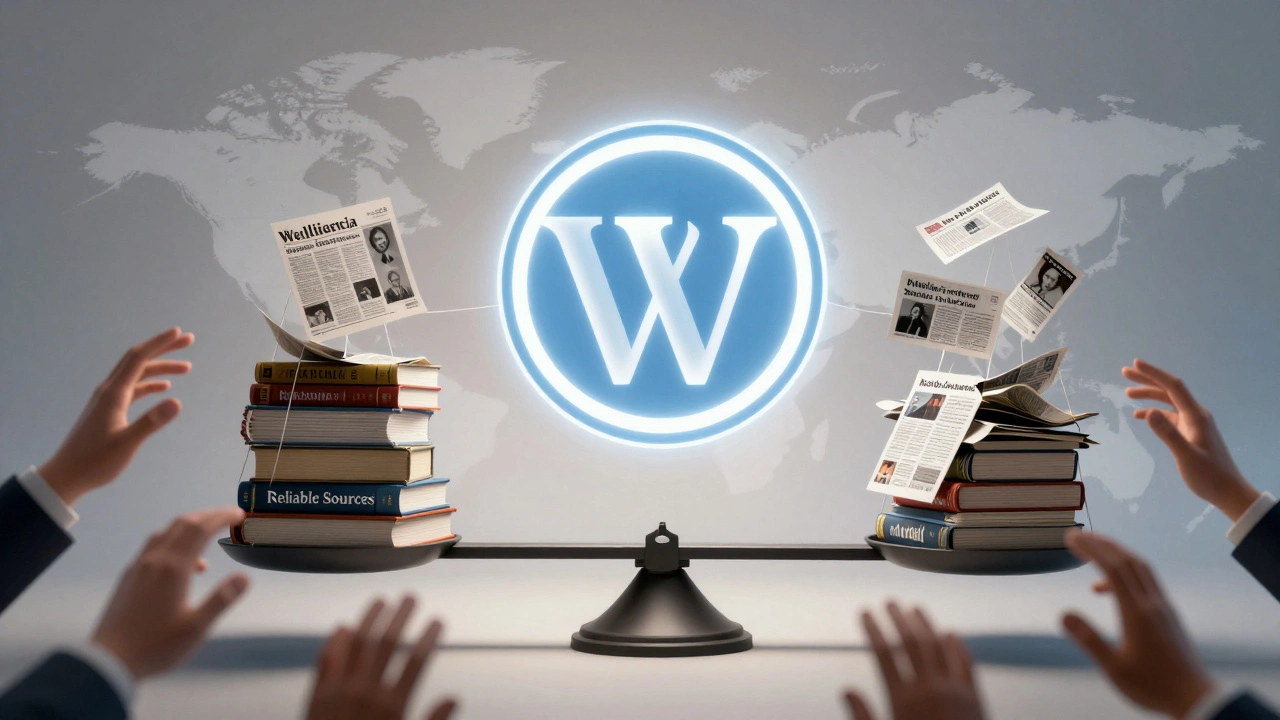

Wikipedia editors don’t decide what’s true. They decide what’s reported. If a study appears in The Lancet, it counts. If a blog post claims the same thing without evidence, it doesn’t. Sources matter more than opinions.

Here’s how it plays out:

- A controversial scientist claims a new cancer cure. If their work hasn’t been peer-reviewed or published in a reputable journal, Wikipedia won’t highlight it as a breakthrough - even if it’s trending on social media.

- A religious group believes the Earth is 6,000 years old. Wikipedia states that belief exists, cites its religious texts, and immediately follows it with the scientific consensus from geology, physics, and biology.

- A political figure is accused of fraud. Wikipedia reports the accusation, the source (e.g., a court filing or investigative report), and whether the claim was proven, dismissed, or pending.

The goal isn’t to silence voices. It’s to give them context. A fringe idea isn’t erased - it’s placed where it belongs: in the shadows of evidence.

What NPOV Doesn’t Do

Many people misunderstand NPOV. Here’s what it’s not:

- Not balance for balance’s sake. You don’t give 50% space to a 2% minority view. That’s false equivalence.

- Not silence. If a claim is widely debunked, Wikipedia says so clearly - "This claim has been repeatedly refuted by experts" - instead of pretending it’s still up for debate.

- Not neutrality about facts. Gravity exists. Vaccines work. The Holocaust happened. These aren’t opinions. NPOV doesn’t require you to pretend otherwise.

Wikipedia’s editors follow a simple rule: If it’s a fact backed by reliable sources, state it. If it’s a belief, attribute it. If it’s a disputed claim, show the evidence on both sides - but weight it by credibility.

The Tools That Enforce NPOV

Wikipedia doesn’t rely on volunteers’ good intentions alone. It has systems:

- Verifiability policy: Every claim must be tied to a published, reliable source. No personal blogs, YouTube videos, or anonymous forums.

- Reliable sources: Academic journals, major newspapers, books from reputable publishers. Wikipedia has a detailed list of what counts.

- Dispute templates: If an article looks biased, editors tag it with {{NPOV}} or {{POV}}, triggering a review process.

- Arbitration committees: For heated disputes, a panel of experienced editors steps in to mediate and enforce policy.

These aren’t perfect. Mistakes happen. But the system is designed to correct itself. An article with a biased edit will often be reverted within minutes. The community watches closely.

Real Examples of NPOV in Action

Take the article on Abortion. It doesn’t say abortion is right or wrong. It states:

- How many procedures occur annually worldwide.

- Which countries allow it and under what conditions.

- What medical organizations say about its safety.

- What religious and political groups argue - and which sources they cite.

- How public opinion has shifted over time.

Every claim is sourced. Every viewpoint is attributed. No editorializing. No emotional language. Just facts, context, and attribution.

Another example: Evolution vs. Creationism. The article explains the scientific theory of evolution in detail, citing peer-reviewed research. Then it notes that some religious groups reject it, and explains their arguments - with sources - without endorsing them.

What Happens When NPOV Breaks Down

When NPOV is ignored, Wikipedia suffers.

In 2018, the article on Gun Control in the United States was edited for months by users pushing extreme views. One side added unverified claims about crime rates. The other deleted all references to mass shootings. The article became a mess. Editors flagged it. A mediation team stepped in. They rewrote it using only verified data from the CDC, FBI, and academic studies. The final version? A calm, factual summary - no shouting, no spin.

That’s NPOV working as intended.

Why NPOV Matters Beyond Wikipedia

Wikipedia is the fifth most visited website in the world. Millions use it as their first source for information. If it were biased, it would distort public understanding.

But because it sticks to NPOV, it remains a rare space where opposing sides can find common ground - not because they agree, but because they can see how the other side thinks. That’s powerful.

Think of it this way: NPOV doesn’t make Wikipedia perfect. But it makes it trustworthy. And in a world full of noise, that’s worth something.

How You Can Help Maintain NPOV

You don’t need to be an expert to help. If you edit Wikipedia:

- Ask: "Is this claim supported by a reliable source?"

- Ask: "Am I stating a fact, or am I expressing an opinion?"

- Ask: "If someone disagrees with me, would they feel this is fair?"

Don’t delete opposing views. Don’t bury inconvenient facts. Don’t use loaded words like "radical," "fraud," or "legendary" unless they’re quoted from a source.

Wikipedia isn’t your blog. It’s a public record. Treat it like one.

Is Neutral Point of View the same as being unbiased?

Not exactly. Being unbiased means you have no opinions. NPOV accepts that editors have opinions - but requires them to set those aside when writing. The article must reflect what reliable sources say, not what the editor believes.

Can Wikipedia articles be completely neutral?

No article is perfectly neutral - humans write them. But NPOV creates a system that pushes articles toward neutrality over time. Through repeated edits, citations, and community review, bias gets corrected. The goal isn’t perfection. It’s progress.

Why doesn’t Wikipedia just delete false claims?

Wikipedia doesn’t delete claims just because they’re false. It deletes claims that aren’t supported by any reliable source. If a false claim has been widely reported - like a conspiracy theory in a tabloid - Wikipedia notes that it exists, cites the source, and explains why it’s not accepted. That’s transparency.

Do all languages on Wikipedia follow NPOV?

Yes. The Neutral Point of View policy is enforced across all language versions of Wikipedia. While cultural differences affect how neutrality is interpreted, the core principle - cite sources, attribute opinions, avoid bias - is universal.

What happens if someone keeps editing an article to push their viewpoint?

Repeated biased edits trigger automated alerts and human review. Editors can be warned, temporarily blocked, or referred to arbitration. The system is designed to protect the article, not the editor.

If you want to understand how knowledge is built in the digital age, look at Wikipedia’s Neutral Point of View. It’s not about silence. It’s about structure. It’s not about avoiding conflict - it’s about managing it so that truth, not outrage, wins.