If you're building a tool that pulls data from Wikipedia - whether it's a bot that updates articles, an app that shows fun facts, or a research script that analyzes edit patterns - you need to know one thing: Wikipedia doesn't want you to crush its servers. The Wikipedia API is free to use, but it comes with rules. Break them, and your tool gets blocked. Not warned. Not slowed. Blocked.

What Are Wikipedia API Rate Limits?

Rate limits are caps on how many requests your tool can make in a given time. Wikipedia sets these to keep the site running for everyone. If you send 10,000 requests per minute, you’re not helping - you’re hurting.

For unauthenticated users (no login), the limit is 500 requests per second per IP address. That sounds like a lot, but it’s easy to hit if you’re looping through thousands of pages. For authenticated users - those who log in with a bot account - the limit jumps to 5,000 requests per second. But even that’s not a green light to go wild.

Wikipedia doesn’t just care about numbers. They care about how you use them. A bot that fetches 100 pages in one second might be fine. A bot that fetches 100 pages, then waits 50 milliseconds, then repeats that for hours? That’s a problem. It’s called "hammering." And it’s against policy.

Why Courtesy Matters More Than Raw Speed

Wikipedia’s API policies are built on respect, not just rules. The community runs on volunteers. Servers are donated. Bandwidth is paid for by donations. You’re borrowing their infrastructure. Treat it like a library, not a free data dump.

Here’s what courtesy looks like in practice:

- Use a custom User-Agent header that identifies your bot and includes a contact email.

- Wait at least 100 milliseconds between requests - even if you’re under the 500-per-second limit.

- Don’t request the same page repeatedly. Cache results locally.

- Use batch operations. Instead of asking for article A, then B, then C, ask for all three in one call using the

action=querywithtitles=A|B|C. - Use the

continueparameter for large data pulls. Don’t restart from the beginning if a request fails.

These aren’t suggestions. They’re requirements. In 2023, a university research team got their entire IP range blocked for 30 days because their script made 12,000 requests in 12 minutes. They weren’t malicious - they just didn’t know how to pace their calls.

Bot Accounts: The Only Way to Play Fair

If you’re doing anything beyond a few dozen requests a day, you need a bot account. Regular user accounts aren’t designed for automation. Bot accounts are. They’re flagged as automated tools, which lets the system treat them differently - and more leniently - but only if you follow the rules.

To get a bot account:

- Create a regular Wikipedia account.

- Go to Wikipedia:Bot policy and read it thoroughly.

- Submit a request at Wikipedia:Bot requests with details: what your bot does, how often it runs, and why it’s useful.

- Wait for approval. It can take days.

Once approved, your bot gets higher rate limits and avoids accidental blocks. But here’s the catch: you’re accountable. If your bot edits incorrectly or causes disruption, it can be banned permanently. No second chances.

Common Mistakes That Get You Blocked

People think they’re being smart. They’re not.

- Using public proxies or cloud services. If you’re running your bot on AWS, Google Cloud, or a free proxy, you’re sharing an IP with hundreds of others. One person breaks the rules, everyone gets blocked. Use a dedicated server or VPS.

- Ignoring HTTP 429 errors. If you get a 429 Too Many Requests response, stop. Wait. Don’t retry immediately. Wait at least 10 seconds. Retry with exponential backoff.

- Fetching entire page histories. Don’t request the full edit history of 500 articles unless you absolutely need it. Use the

prop=revisionswithrvlimit=1to get only the latest edit. - Not checking robots.txt. The file at

https://en.wikipedia.org/robots.txttells you what paths are off-limits. Some APIs are restricted to logged-in users only. Don’t try to bypass that. - Assuming the API is stable. Wikipedia changes its API endpoints. Always use the latest version (v1.38+ as of 2026). Don’t hardcode old URLs.

In 2025, a popular Wikipedia-based trivia app was taken offline after 14 months of smooth operation. Why? They’d been using a deprecated endpoint that was quietly retired. No warning. No notice. Just a 404. They didn’t monitor API changelogs.

How to Monitor Your Usage

Wikipedia doesn’t give you a dashboard. You have to build your own tracking.

Log every request. Track:

- Timestamp of each call

- Response code (200, 429, 503)

- Time between requests

- Number of requests in the last 60 seconds

Set up alerts. If you hit 80% of your limit in a minute, pause for 30 seconds. Use a simple queue system. Python’s queue.Queue or Node.js’s bull library can help throttle your calls automatically.

Test your bot in sandbox mode first. Use https://test.wikipedia.org - a mirror of Wikipedia where you can break things without consequences. Run your full script there. See how it behaves. Fix the issues. Then move to the real site.

What Happens When You Get Blocked?

If you’re blocked, you’ll see one of these:

- A 403 Forbidden error with a message like "You have been blocked from editing due to automated edits."

- A 429 Too Many Requests error with a Retry-After header telling you when to try again.

- No response at all - your requests just time out.

Don’t panic. Don’t spam support. Don’t try to bypass the block with a new IP. That makes it worse.

Instead:

- Stop all automated activity immediately.

- Review your code. Did you miss a delay? Are you fetching too much data?

- Check your User-Agent. Is it clear and contactable?

- Send an email to

[email protected]with: - Your bot’s name

- Your User-Agent string

- When the block started

- What you were trying to do

- What you’ve changed to fix it

- Wait. It can take 2-7 days for a manual review.

Most blocks are lifted if you show you understand the problem. If you blame Wikipedia? You’re probably blocked forever.

Alternatives to Direct API Calls

If you’re still struggling with rate limits, consider these options:

- Wikidata: It’s the structured data backend for Wikipedia. It has higher rate limits and cleaner APIs. Use it for facts, not full articles.

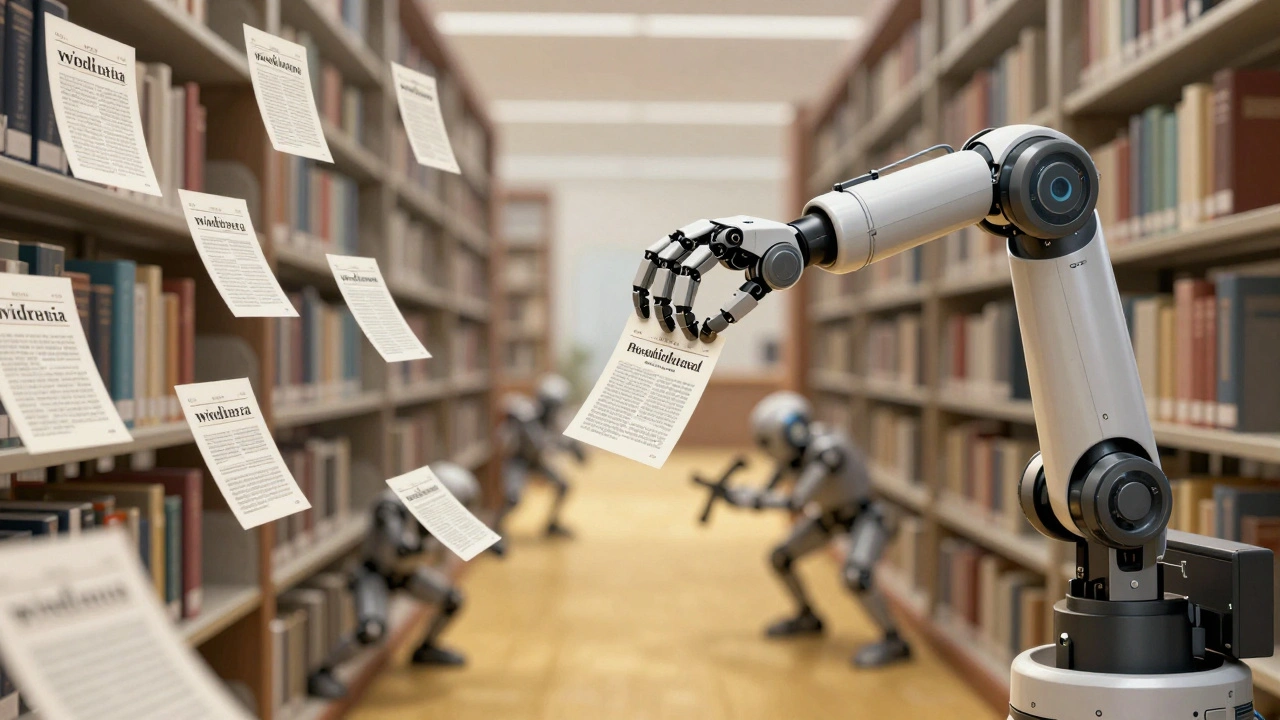

- Wikipedia dumps: Download the entire English Wikipedia (100+ GB) once a month. Parse it locally. No API calls needed.

- Third-party mirrors: Sites like MediaWiki API mirrors or academic caches may have looser limits - but always check their terms.

- Web scraping (with caution): If you only need a few pages, scraping HTML can be faster than API calls. But don’t scrape heavily. Use

robots.txtand respect delays.

Many research teams now use Wikipedia dumps. They download the data, index it locally with Elasticsearch, and query it offline. It’s slower to update, but zero risk of being blocked.

Final Rule: Be a Good Neighbor

Wikipedia isn’t a company. It’s a community. The servers aren’t owned by a tech giant. They’re hosted by volunteers and funded by small donations. Your bot isn’t just code - it’s a guest.

Slow down. Be polite. Document your work. Respect the limits. The API will be there tomorrow. But if you burn it out, it might not be there next week.

Build smart. Build slow. Build right.

What happens if I exceed the Wikipedia API rate limit?

You’ll get an HTTP 429 Too Many Requests error. Your requests will be blocked temporarily. If you keep pushing, your IP or bot account may be blocked for hours or days. Repeated violations can lead to permanent bans. Always wait and retry with exponential backoff.

Do I need a bot account to use the Wikipedia API?

No, you don’t need one for small, occasional use. But if you’re making more than 100 requests per hour, you should get one. Bot accounts get higher rate limits and are less likely to be accidentally blocked. They also show Wikipedia you’re serious about following the rules.

Can I use the Wikipedia API for commercial purposes?

Yes, as long as you follow the API terms and the CC BY-SA license for content. You can build apps, sell services, or use data in products. But you can’t claim ownership of Wikipedia content. Always credit Wikipedia and link back. And never overload the servers - even if you’re making money.

What’s the difference between Wikipedia and Wikidata APIs?

Wikipedia’s API returns full articles, page histories, and edit metadata. Wikidata’s API returns structured data - like facts, numbers, and relationships - in JSON format. Wikidata has higher rate limits and is easier to parse for automated systems. Use Wikidata for data; use Wikipedia for full articles.

How often should I update my cached Wikipedia data?

It depends on how fresh your data needs to be. For most apps, updating once a day is enough. For real-time tools like news aggregators, update every 6-12 hours. Never poll more than once per hour unless you have a bot account and explicit permission. Use the lastmodified timestamp in API responses to check if content changed.

Are there tools that help manage Wikipedia API usage?

Yes. Libraries like wikipedia-api for Python and wikidata-sdk for JavaScript include built-in rate limiting and retry logic. Tools like mwclient and mwparserfromhell help parse responses safely. Always use a well-maintained library - don’t write your own HTTP client from scratch.