Bot tasks on Wikipedia: How automated tools keep the encyclopedia running

When you edit a Wikipedia article, you’re not alone. Behind the scenes, bot tasks, automated scripts that perform repetitive edits on Wikipedia without human input. Also known as Wikipedia bots, they fix broken links, revert vandalism, update templates, and even translate articles across languages. These aren’t sci-fi robots—they’re code, written by volunteers, running 24/7 on servers. Without them, Wikipedia would collapse under the weight of spam, errors, and updates too fast for humans to keep up.

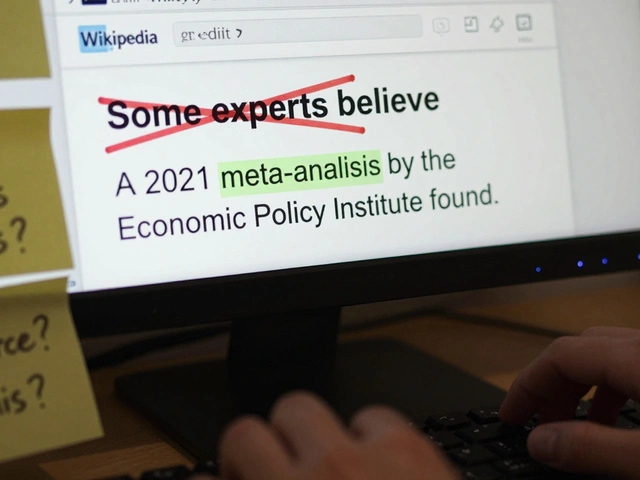

Bot tasks cover everything from the tiny—like correcting a missing comma in a citation—to the massive, like syncing data from public databases into thousands of infoboxes. One bot might fix hundreds of broken external links every hour. Another scans new pages for copyright violations. Some even patrol edit wars, rolling back conflicting changes until humans can sort things out. These tools don’t replace editors; they free them up to do the hard stuff: writing deep articles, debating policy, and building trust in content. automated editing, the use of software to make systematic changes to Wikipedia content is tightly regulated. Every bot needs approval, a clear purpose, and ongoing oversight. The community doesn’t just tolerate bots—they demand them to be transparent, documented, and reversible.

Not all bot tasks are perfect. Sometimes they break things. A bot might misinterpret a template and overwrite useful text. Or it might accidentally delete a well-written section because it matched a pattern meant for spam. That’s why Wikipedia maintenance, the ongoing work of keeping articles accurate, well-sourced, and properly formatted relies on humans watching the bots. There are dedicated pages where bot operators report activity, and editors can flag mistakes. It’s a partnership: machines handle scale, humans handle nuance.

Bot tasks are especially vital in high-traffic areas like current events, where misinformation spreads fast. During elections, disasters, or breaking news, bots help lock down pages to prevent edit wars, update timelines, and flag unverified claims. They’re the silent first responders of Wikipedia’s ecosystem. And while you might never see them, their work shows up in every clean, reliable article you read.

What you’ll find below are real stories about how these tools work, the debates around their limits, and the volunteers who keep them running. From bots that fix grammar to ones that track geopolitical changes in real time, this collection shows how automation isn’t just helpful—it’s necessary to keep Wikipedia alive.

How Wikipedia Bots Support Human Editors with Maintenance Tasks

Wikipedia bots handle thousands of daily maintenance tasks-from fixing broken links to reverting vandalism-freeing human editors to focus on content quality and accuracy. These automated tools are essential to keeping Wikipedia running smoothly.