Edit Tracking on Wikipedia: How Volunteers Monitor Changes and Keep Accuracy

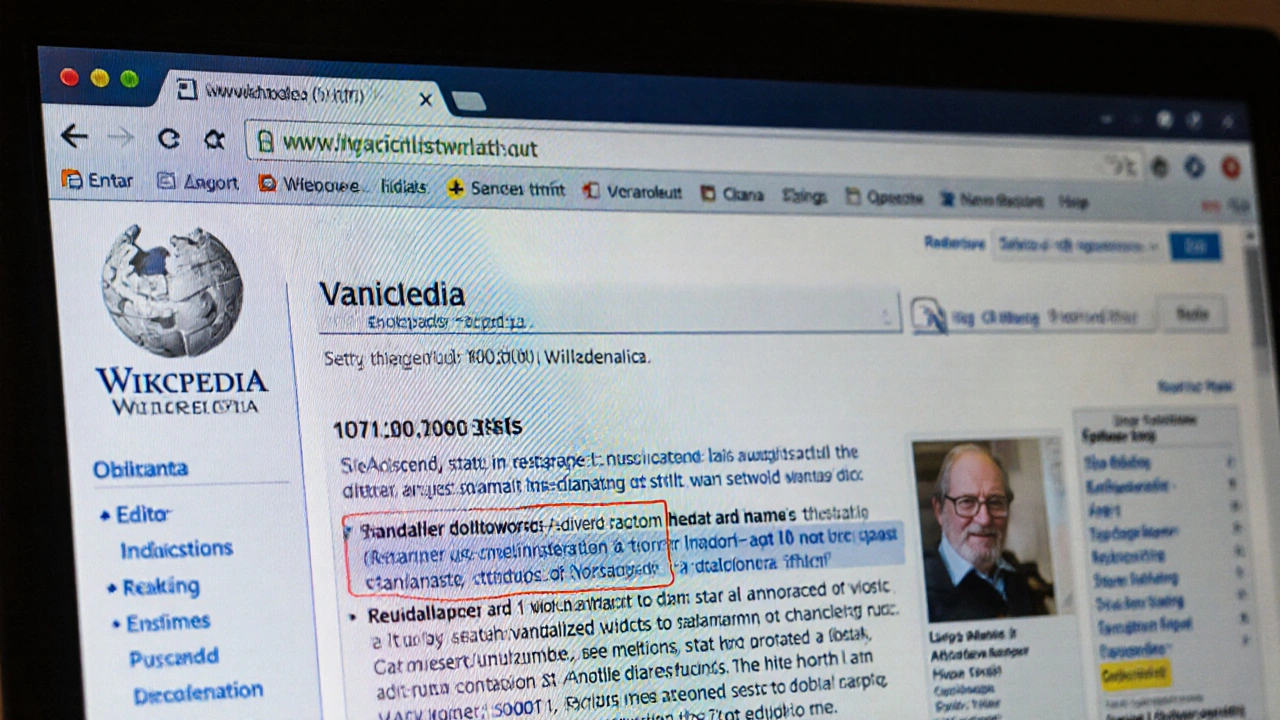

When you edit a Wikipedia page, that change doesn’t just appear quietly—it gets logged, flagged, and watched by thousands of volunteers. This process is called edit tracking, the system of tools and human oversight that records, reviews, and responds to every edit made to Wikipedia. Also known as change monitoring, it’s the invisible backbone that keeps Wikipedia from turning into chaos. Without it, false information, spam, and vandalism would spread faster than facts can be corrected.

People who track edits don’t just sit and watch—they use bots, dashboards, and real-time alerts to catch bad edits before they’re seen by readers. Tools like Recent Changes, Wikipedia’s live feed of every edit across all language versions and Watchlists, personalized lists that notify editors when pages they care about are changed let volunteers focus on what matters. These aren’t fancy apps—they’re simple, open-source systems built by volunteers, for volunteers. And they work. A 2023 study by the Wikimedia Foundation found that over 90% of harmful edits are reverted within five minutes, mostly by editors using these tracking tools.

But edit tracking isn’t just about stopping bad edits. It’s also about helping good ones. New editors often get scared when their changes are reverted. Edit trackers help them learn why, pointing them to policies, sources, or better wording. It’s not punishment—it’s coaching. And it’s why Wikipedia still has more than 130,000 active editors who check changes every single day, even as AI tools try to automate everything. The real power isn’t in the software—it’s in the people who care enough to watch.

What you’ll find in this collection are stories from the front lines: how volunteers track down sockpuppets, how the Wikipedia Signpost, the community-run newspaper that reports on editing trends and policy debates covers edit tracking failures, and how AI is starting to mimic—but still can’t replace—human judgment in spotting subtle bias or misinformation. You’ll see how copy editors use tracking to clear backlogs, how anti-vandalism teams respond to coordinated attacks, and why some of the most important edits never make headlines—they just quietly fix a date, correct a name, or restore a deleted source.

Every edit you see on Wikipedia has been through this system. And if you’ve ever wondered how Wikipedia stays reliable, the answer isn’t algorithms—it’s people watching, checking, and caring. That’s edit tracking. And it’s still the best defense against misinformation on the web.

Watchlist Power Tips for Active Wikipedia Editors

Learn how to use Wikipedia's watchlist effectively to track edits, catch vandalism, and improve article quality with smart filtering, daily habits, and cleanup routines for active editors.