Reliable Sources on Wikipedia: What Makes a Source Trustworthy

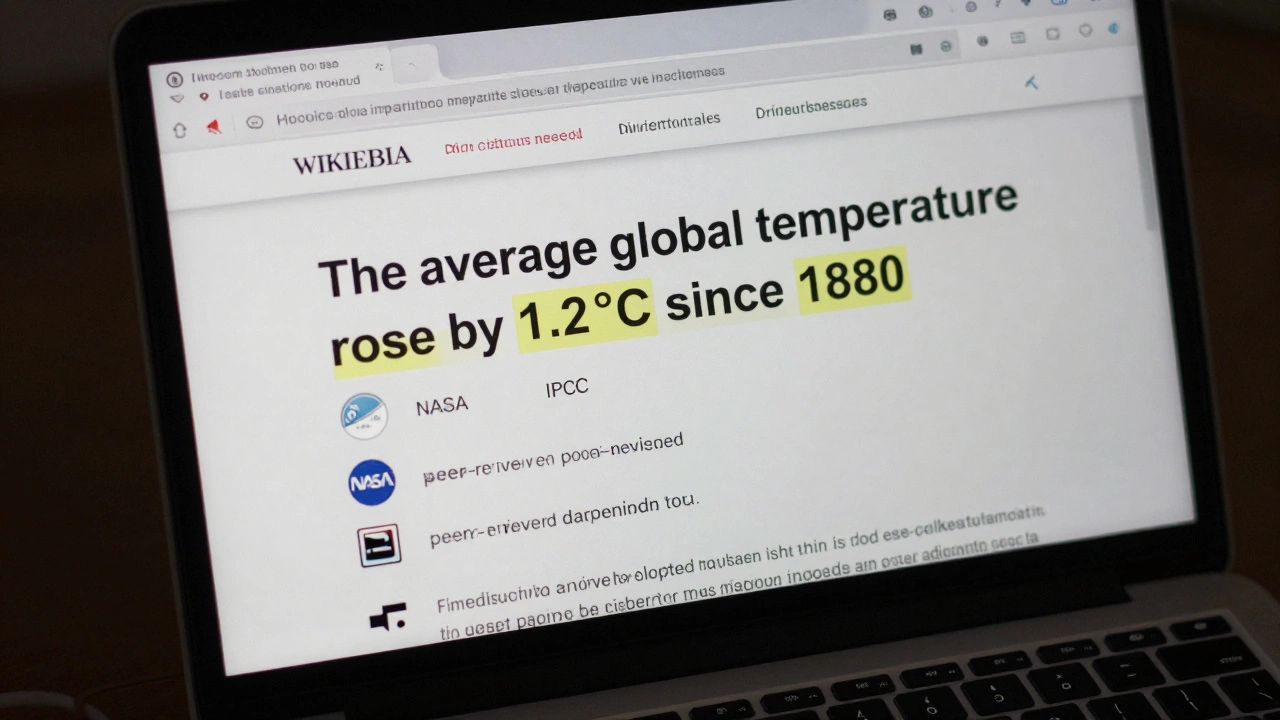

When you see a claim on Wikipedia, it doesn’t just appear out of thin air—it’s backed by a reliable source, a published, verifiable reference that experts recognize as credible and authoritative. Also known as verifiable source, it’s the foundation of everything Wikipedia stands for: truth, not opinion. Without reliable sources, Wikipedia becomes just another collection of guesses. With them, it becomes the most trusted reference in the world—even when AI tries to copy it.

Wikipedia doesn’t care how famous a website is, or how many clicks it gets. It cares if the source has editorial oversight, fact-checking, and a track record of accuracy. A peer-reviewed journal? That’s gold. A personal blog? Not even close. Even major news outlets can fail this test if they’re biased, unverified, or reporting rumors as facts. That’s why editors dig into archives, government reports, academic papers, and regional newspapers to find sources that actually support what’s written. This isn’t about prestige—it’s about proof. And when a source doesn’t hold up, the edit gets reverted, no matter how popular the claim seems.

The rules around reliable sources, the standard for determining what information can be included in Wikipedia articles are strict, but they’re not arbitrary. They exist because people have tried to game the system—corporations pushing PR, activists deleting criticism, bots inserting fake citations. The result? Thousands of articles cleaned up by volunteers who treat sourcing like a legal contract: if you can’t prove it, you can’t say it. This is why Wikipedia beats AI encyclopedias in trust surveys: you can see where the info came from. AI just makes it up—and sometimes, it cites sources that don’t even say what they’re claimed to say.

It’s not just about finding good sources—it’s about using them right. The due weight policy, a rule that ensures articles reflect the real balance of evidence, not just the loudest voices, means even minority views get space—if they’re backed by reliable sources. And when sources conflict? Editors don’t pick sides. They show the debate, cite the evidence, and let readers decide. That’s why Wikipedia’s approach to citation accuracy, how closely a source matches the claim it supports matters more than ever. AI tools are getting better at generating fake citations, but they still can’t replicate human judgment. Only a person can spot when a source is being twisted to fit a narrative.

What you’ll find below isn’t just theory—it’s real stories from the front lines. How journalists use Wikipedia to find real sources. Why some Wikipedia articles get deleted because their sources aren’t good enough. How volunteers fight copyright takedowns that erase valuable knowledge. And how AI is starting to mimic Wikipedia’s sourcing—but failing in ways that matter. This is the quiet, relentless work that keeps Wikipedia honest. And it all starts with one question: Can you prove it?

Using Wikidata to Standardize Sources on Wikipedia

Wikidata helps standardize citations on Wikipedia by storing source details in a central database, making citations consistent, verifiable, and automatically updatable across all articles.

How to Resolve Conflicting Citations on Wikipedia

Learn how to handle conflicting sources on Wikipedia by evaluating reliability, presenting both sides neutrally, using meta-analyses, and flagging disputes. Avoid common mistakes and contribute accurately to the world's largest encyclopedia.

How Wikipedia Handles Official Statements vs. Investigative Reporting Sources

Wikipedia doesn't decide truth - it shows you where facts come from. Learn how it weighs official statements against investigative journalism to build accurate, transparent entries.

Wikipedia Citation Templates Explained: Cite News, Cite Journal, and Other Essential Tools

Learn how to use Wikipedia's citation templates like Cite News and Cite Journal to back up claims with reliable sources. Essential guide for editors who want to improve accuracy and avoid common mistakes.

How to Fact-Check Academic Claims Using Wikipedia and Reliable Sources

Learn how to verify academic claims using Wikipedia as a starting point and reliable sources like Google Scholar and PubMed. Avoid common mistakes and build a solid fact-checking routine for research.

Citation Density on Wikipedia: How Many References Are Enough

Wikipedia's reliability depends on how well its claims are backed by sources. Learn how many citations are enough, what counts as reliable, and how to spot weak references.

How to Recover Sources to Save a Wikipedia Article from Deletion

Learn how to find and add reliable sources to save a Wikipedia article from deletion. Step-by-step guidance on locating credible references, citing them properly, and responding to deletion nominations.

Correcting the Record: Off-Wiki Statements vs. On-Wiki Evidence

Off-wiki statements like tweets and press releases don't override on-wiki evidence. Wikipedia relies on verified, independent sources-not claims from public figures. Learn why documented facts beat loud opinions.

Rapid Citation Management in Wikipedia During News Events

During breaking news events, Wikipedia updates rapidly with accurate citations using verified sources, automated tools, and a global network of volunteers. Learn how real-time fact-checking keeps Wikipedia reliable when it matters most.

How to Detect and Remove Original Research on Wikipedia

Learn how to identify and remove original research on Wikipedia - the key policy that keeps the encyclopedia reliable. Understand what counts as unsourced analysis and how to fix it without breaking community rules.

When Wikipedia Allows Self-Published Sources and Why It Rarely Does

Wikipedia rarely accepts self-published sources because they lack independent verification. Learn when exceptions are made and why reliable, third-party sources are required to maintain accuracy and trust.

How Wikipedia Handles Retractions and Corrections in Cited Sources

Wikipedia updates its articles when cited sources are retracted or corrected, relying on community vigilance and strict sourcing policies to keep information accurate and transparent.