Wikipedia reliability: How trusted knowledge stays accurate and what threatens it

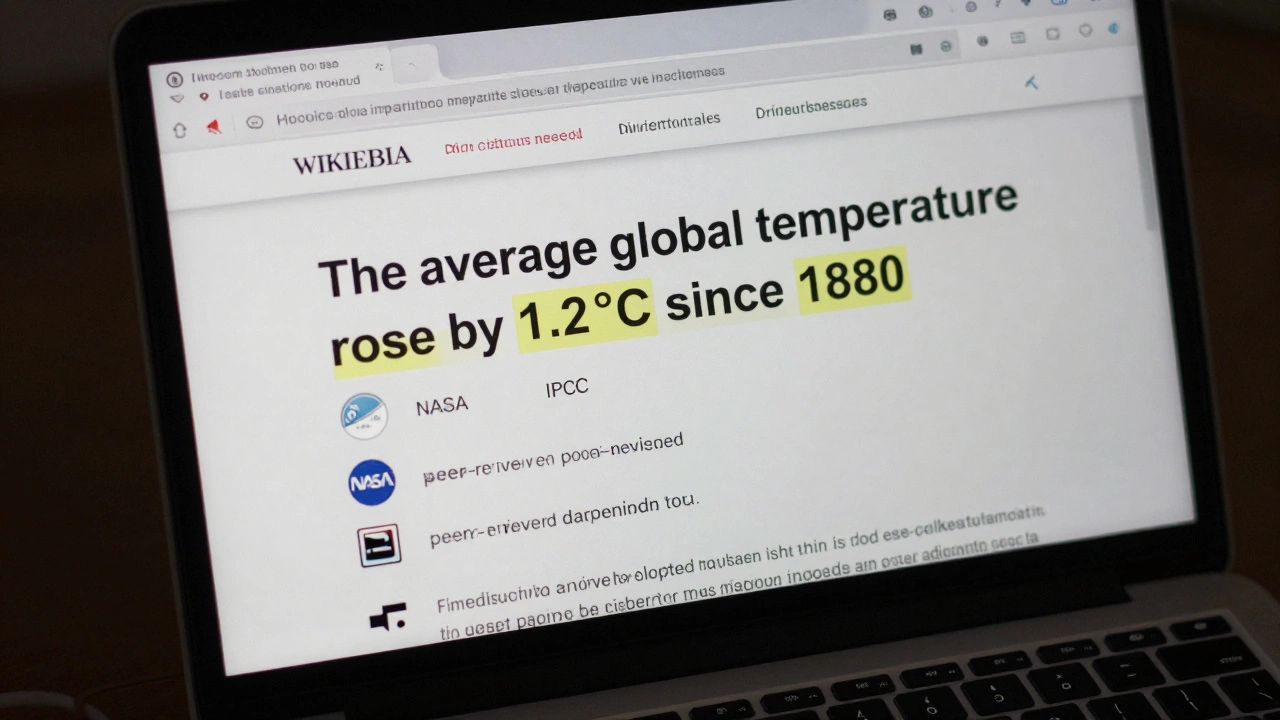

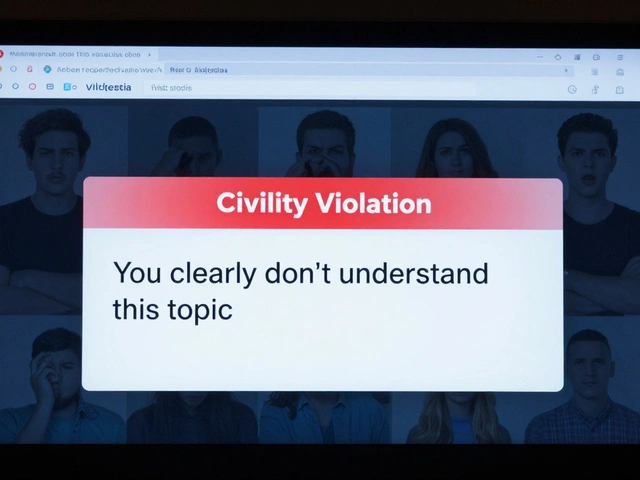

When you need to know something fast, Wikipedia reliability, the collective trust in Wikipedia as a source of accurate, verified information built by volunteers. Also known as crowdsourced accuracy, it’s what makes millions turn to it before Google or social media. But here’s the twist: it’s not reliable because it’s perfect. It’s reliable because it’s constantly checked, challenged, and fixed by people who care. Every edit is tracked. Every claim needs a source. Every bias gets called out. That’s not magic—it’s policy, process, and a lot of late-night editing.

Behind that trust are reliable sources, published, peer-reviewed, or authoritative materials like academic journals, books, and major news outlets that Wikipedia editors must cite to back up facts. You can’t just write "this happened"—you need a source that says so. That’s why primary sources like personal blogs or press releases rarely work. Wikipedia policies, the formal rules that govern how content is added, edited, and removed on Wikipedia like "no original research" and "due weight" make sure articles don’t reflect opinions or popularity—they reflect what real sources say. And when AI starts generating fake citations or rewriting articles without context, that’s when AI encyclopedias, automated knowledge platforms that pull data from open sources but often misrepresent or fabricate citations start looking shiny but feel hollow. People still trust Wikipedia more because they can see the sources, track the changes, and even fix mistakes themselves.

It’s not all smooth. Copyright takedowns erase good content. Systemic bias hides voices. Volunteer burnout slows updates. But the system keeps working because people show up—not for money, but because they believe knowledge should be free and fair. What you’ll find below are real stories from inside Wikipedia: how editors fight misinformation, why some articles survive while others vanish, how AI is changing the game, and what happens when a community decides what’s true.

How Wikipedia Handles Pseudoscience vs. Mainstream Science

Wikipedia doesn't declare what's true-it reports what reliable sources say. Learn how it distinguishes mainstream science from pseudoscience using citations, consensus, and proportional representation.

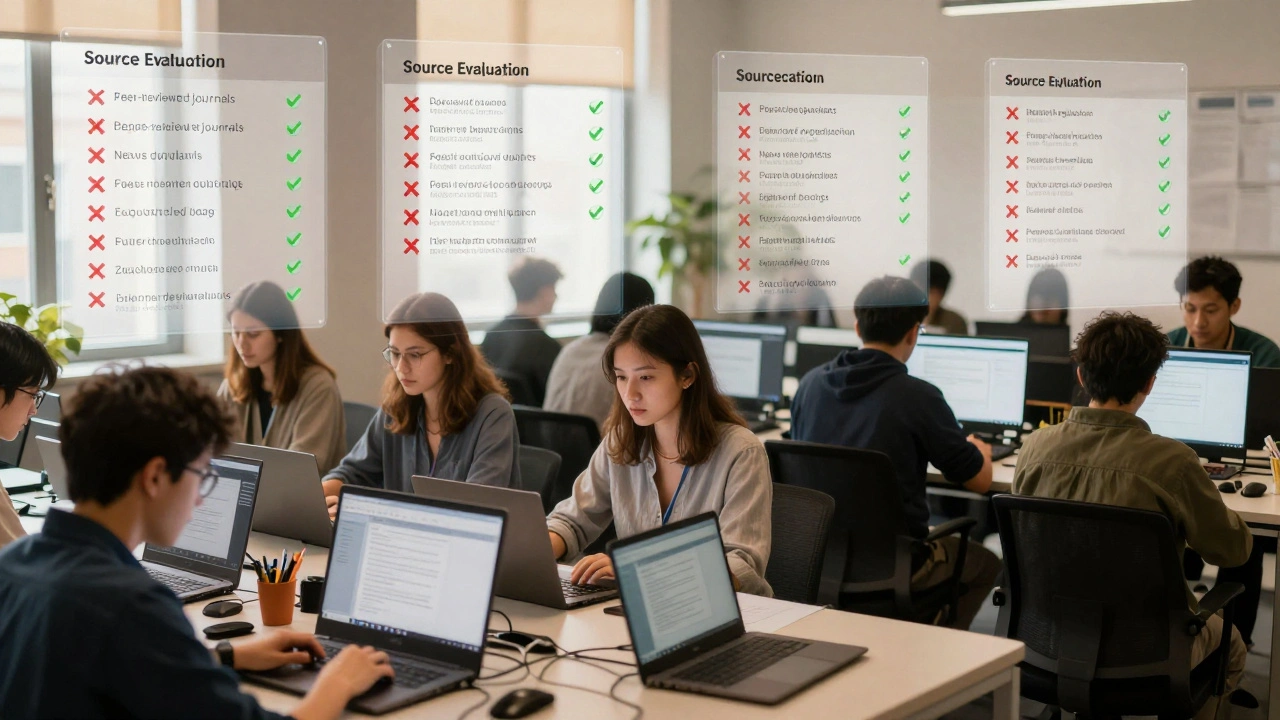

How to Evaluate Wikipedia Article Quality Before Citing in Academia

Learn how to evaluate Wikipedia articles for academic use by checking citations, edit history, and quality ratings. Discover why professors discourage direct citations-and how to use Wikipedia as a gateway to credible sources.

How Press Freedom Shapes the Reliability of News Sources on Wikipedia

Press freedom ensures accurate, independent journalism-which is the foundation of reliable information on Wikipedia. Without it, Wikipedia's content becomes incomplete, biased, or outdated.

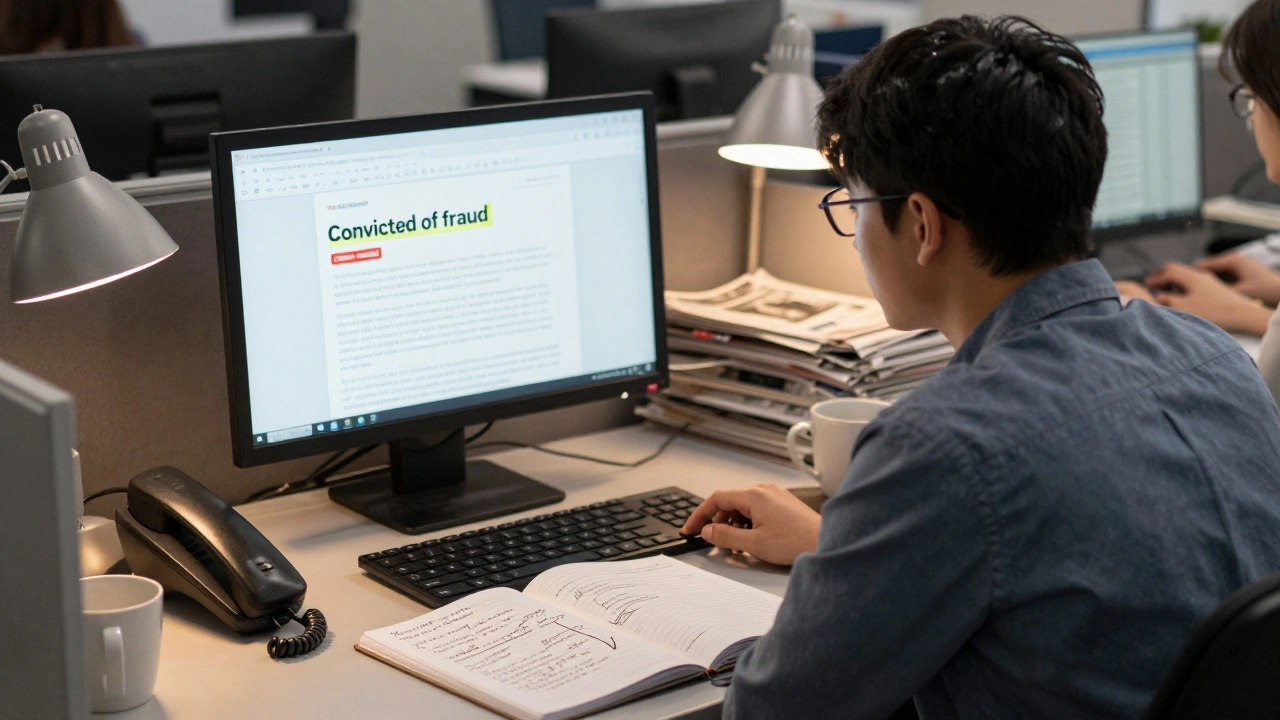

Handling Living Person Disputes on Wikipedia: BLP Best Practices

Learn how to handle disputes over living person biographies on Wikipedia using the BLP policy. Discover what sources are valid, how to respond to false claims, and why neutrality matters more than speed.

Citation Density on Wikipedia: How Many References Are Enough

Wikipedia's reliability depends on how well its claims are backed by sources. Learn how many citations are enough, what counts as reliable, and how to spot weak references.

How Wikipedia News Coverage Shapes Editorial Decisions in Journalism

Wikipedia’s real-time edits influence how journalists verify and prioritize stories. While not a source, it acts as a barometer for public understanding-and shapes editorial decisions in subtle but powerful ways.

How to Build a Newsroom Policy for Wikipedia Use and Citation

A clear policy for using Wikipedia in journalism helps prevent misinformation. Learn how to train reporters, verify sources, and avoid citing Wikipedia directly in published stories.

How Wikipedia Updates Articles After Major News Events

Wikipedia updates articles after major news events by relying on verified sources and a global network of volunteer editors. It prioritizes accuracy over speed, waiting for confirmation before making changes. This process keeps it more reliable than many news outlets in the first hours after breaking news.

How Citation Density Affects Perceived Reliability on Wikipedia

Citation density on Wikipedia directly shapes how reliable readers perceive an article to be. More consistent, high-quality citations build trust - even if users don’t read them. This is why editing Wikipedia isn’t just about facts - it’s about signaling accountability.

Curating News Candidates on Wikipedia: Criteria and Real Examples

Wikipedia doesn't post breaking news instantly. It waits for multiple reliable sources to confirm events before creating articles. Learn the criteria and real examples of what makes the cut-and what doesn't.

Reliable Sources Noticeboard: How Community Decisions Shape Source Quality

The Reliable Sources Noticeboard is Wikipedia's community-driven system for evaluating source quality. Learn how volunteers decide what sources are trustworthy-and why it matters for everyone who uses online information.

Media Criticism of Wikipedia: Common Patterns and How Wikipedia Responds

Media often criticizes Wikipedia for bias and inaccuracies, but its open model allows rapid correction. This article explores common criticisms, how Wikipedia responds, and why it remains the most transparent reference tool online.